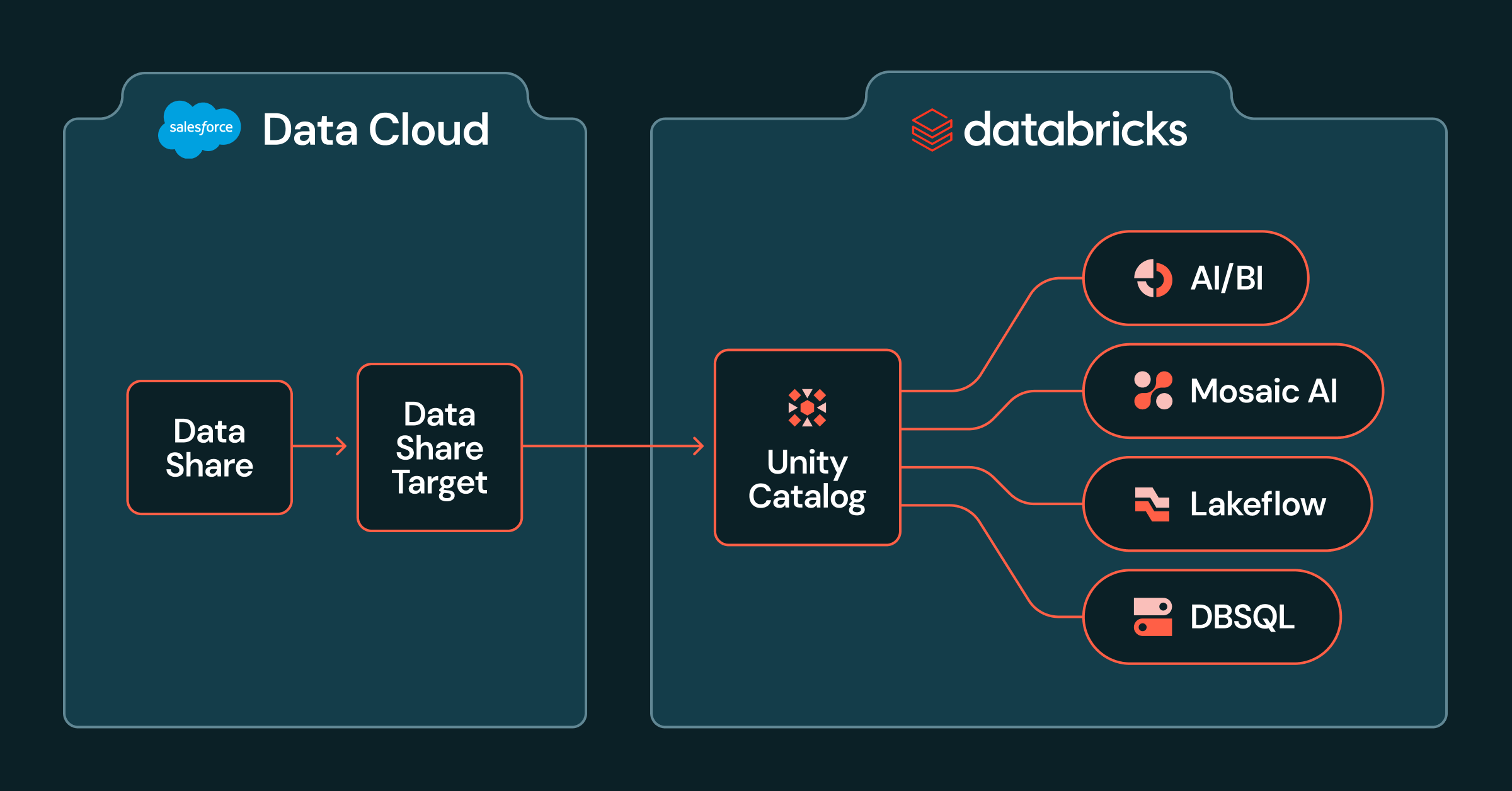

Salesforce Knowledge Cloud File Sharing into Databricks Unity Catalog is now in public preview. This integration allows you to question Salesforce Knowledge Cloud Objects straight from the Databricks Knowledge Intelligence Platform, so you’ll be able to run analytics with out constructing pipelines or sustaining duplicate information. This allows you to use your Knowledge Cloud buyer 360 property in place whereas Databricks handles processing and evaluation in actual time.

This new performance enhances the info federation from Databricks into Salesforce Knowledge Cloud (the opposite course), which lets you leverage Salesforce Knowledge Cloud to activate it throughout apps and experiences.

Evolving Knowledge Entry: From Question Federation to File Sharing

You may already be acquainted with our current federated queries characteristic utilizing Lakehouse Federation. Federated queries utilizing Lakehouse Federation can help you push down SQL queries to Salesforce Knowledge Cloud, performing computations inside Salesforce Knowledge Cloud. Whereas this supplies instant entry, for advanced queries or bigger datasets, the efficiency and price can generally be a limiting issue.

With File Sharing, we’re taking a big leap ahead. This characteristic lets you:

- Entry Knowledge in-place: securely entry and analyze your Salesforce Knowledge Cloud information in close to actual time straight utilizing Databricks compute, eliminating the necessity to transfer or duplicate your information.

- Enhance efficiency and scale back prices with Databricks compute: By leveraging Databricks’ highly effective and optimized compute capabilities, you’ll be able to obtain superior question efficiency and considerably optimize your operational prices. As a substitute of pushing down the question, the info itself is analyzed in Databricks compute, enabling Databricks’ engine to deal with the heavy lifting.

- Expertise safe, secret-less authentication: This characteristic makes use of Workload Identification Federation, offering a strong and safe authentication mechanism with out the necessity for managing secrets and techniques.

Leverage Salesforce Knowledge Cloud information in Databricks to ship advertising and marketing insights

Think about working superior analytics, constructing machine studying fashions, and producing dashboards stories straight together with your Salesforce buyer profiles, engagement information, and extra – all with out ever extracting the info from Salesforce Knowledge Cloud. This not solely streamlines your information pipelines but in addition ensures you are at all times working with the freshest information. For entrepreneurs, this unlocks immense enterprise worth.

- Improve Personalization: Mix your wealthy Salesforce buyer profiles with different enterprise information in Databricks to create a really unified view of your clients, enabling extremely customized advertising and marketing campaigns and buyer journeys.

- Refine Viewers Modeling: Construct subtle viewers segments utilizing Databricks’ superior analytics and machine studying capabilities on stay Salesforce Knowledge Cloud information, resulting in more practical concentrating on and better conversion charges.

- Speed up Insights: Rapidly analyze buyer conduct, marketing campaign efficiency, and product interactions with out information motion delays, permitting for agile decision-making and optimization of promoting methods.

Getting Began: Connecting Databricks to Salesforce Knowledge Cloud

To allow Zero Copy File Sharing, a collaboration between your Salesforce Knowledge Cloud admin and Databricks admin is required. Here is a high-level overview of the steps to get began (see our documentation for full particulars):

When you join your Salesforce Knowledge Cloud Knowledge Share, it seems in Unity Catalog as a Catalog. From there, you’ll be able to set permissions and use Databricks to question and analyze the info.

- On Salesforce Knowledge Cloud:

- Create a knowledge share goal in Knowledge Cloud.

- Choose Databricks because the goal.

- Use the Core Tenant ID and Tenant Endpoint to finish the steps 2.b under in Databricks.

- Retrieve and enter Connection URL and Account URL from Databricks (see step 2.c under).

- At this level, you could have created a Knowledge Share Goal.

- Create a Knowledge Share containing the objects you wish to share, and hyperlink it to the Knowledge Share Goal.

- On Databricks:

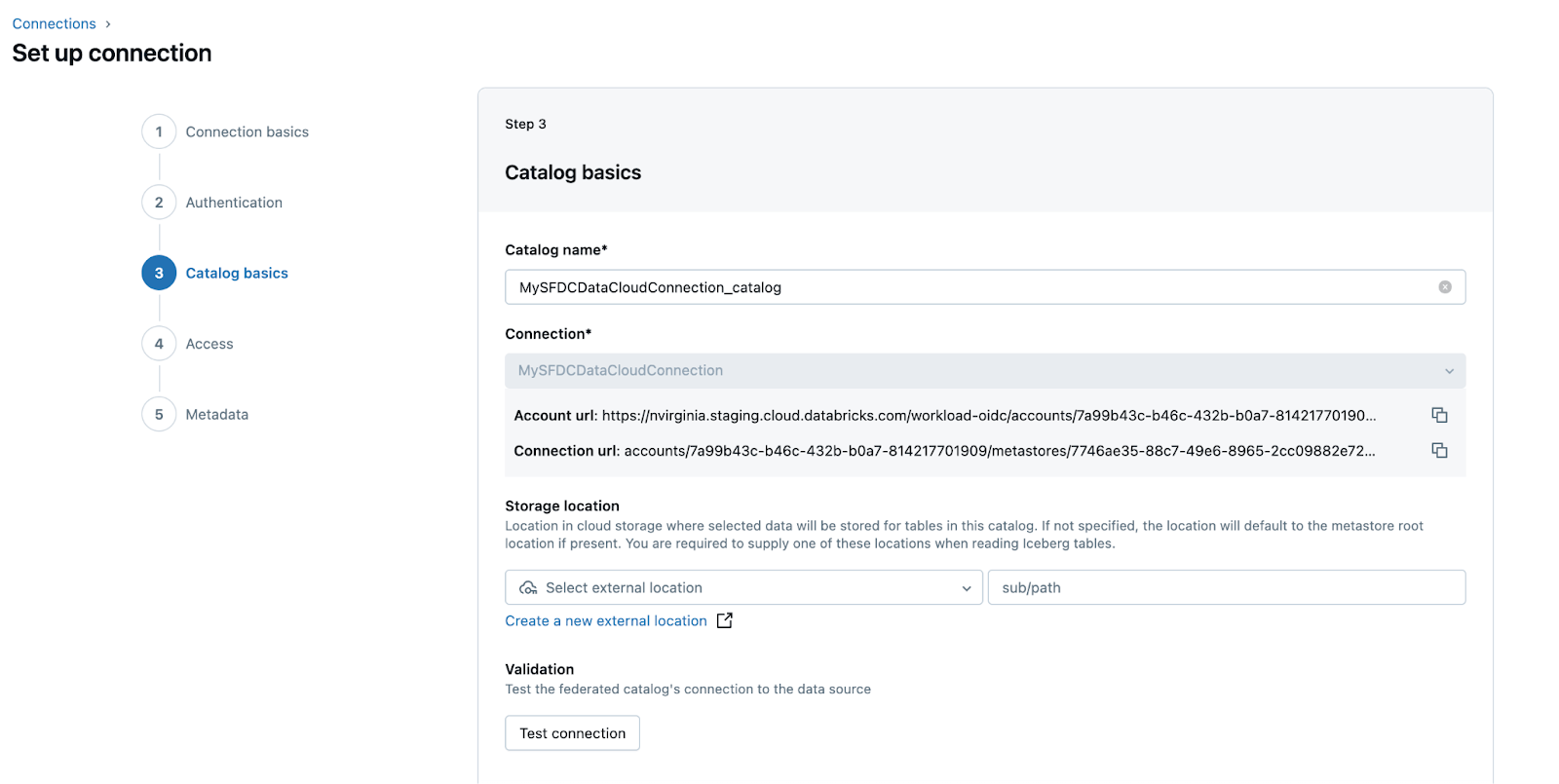

- In your Databricks workspace, navigate to the Catalog pane and choose “Add a connection”.

- Enter a user-friendly identify and choose “Salesforce Knowledge Cloud File Sharing” as connection sort.

- Enter the Core Tenant ID and Tenant Endpoint particulars supplied by Salesforce admin (see above).

- Present Connection URL and Account URL to Salesforce Admin (see step 1.c) to complete establishing the info share goal.

- Select a storage location from the drop-down menu; this location will solely retailer metadata.

- Click on “Create Catalog”.

When you join your Salesforce Knowledge Cloud Knowledge Share, it seems in Unity Catalog as a Catalog. From there, you’ll be able to set permissions and use Databricks to question and analyze the info.

In essence, when you’ve got invested in Salesforce Knowledge Cloud and wish to maximize its worth with Databricks’ superior analytics capabilities with out information motion, File Sharing is the reply.

Be a part of us on the Knowledge and AI summit to study extra about this characteristic in our joint session: Unlock the Potential of Your Enterprise Knowledge With Zero-Copy Knowledge Sharing, that includes SAP and Salesforce.