OpenAI has disrupted over 20 malicious cyber operations abusing its AI-powered chatbot, ChatGPT, for debugging and growing malware, spreading misinformation, evading detection, and conducting spear-phishing assaults.

The report, which focuses on operations because the starting of the yr, constitutes the primary official affirmation that generative mainstream AI instruments are used to reinforce offensive cyber operations.

The primary indicators of such exercise had been reported by Proofpoint in April, who suspected TA547 (aka “Scully Spider”) of deploying an AI-written PowerShell loader for his or her last payload, Rhadamanthys info-stealer.

Final month, HP Wolf researchers reported with excessive confidence that cybercriminals focusing on French customers had been using AI instruments to put in writing scripts used as a part of a multi-step an infection chain.

The newest report by OpenAI confirms the abuse of ChatGPT, presenting instances of Chinese language and Iranian risk actors leveraging it to reinforce the effectiveness of their operations.

Use of ChatGPT in actual assaults

The primary risk actor outlined by OpenAI is ‘SweetSpecter,’ a Chinese language adversary first documented by Cisco Talos analysts in November 2023 as a cyber-espionage risk group focusing on Asian governments.

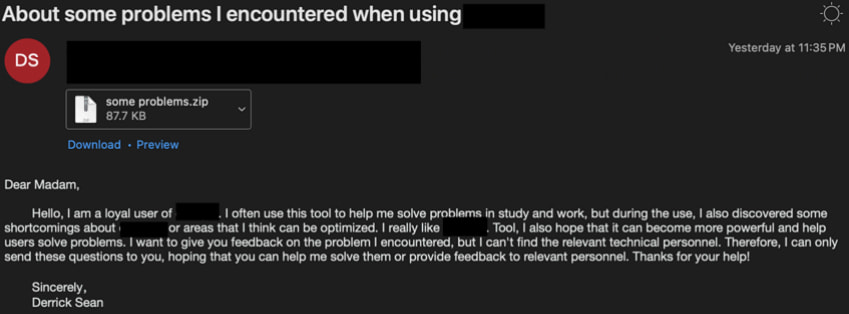

OpenAI experiences that SweetSpecter focused them straight, sending spear phishing emails with malicious ZIP attachments masked as assist requests to the non-public e mail addresses of OpenAI workers.

If opened, the attachments triggered an an infection chain, resulting in SugarGh0st RAT being dropped on the sufferer’s system.

Supply: Proofpoint

Upon additional investigation, OpenAI discovered that SweetSpecter was utilizing a cluster of ChatGPT accounts that carried out scripting and vulnerability evaluation analysis with the assistance of the LLM software.

The risk actors utilized ChatGPT for the next requests:

| Exercise | LLM ATT&CK Framework Class |

| Asking about vulnerabilities in varied purposes. | LLM-informed reconnaissance |

| Asking how you can seek for particular variations of Log4j which are susceptible to the crucial RCE Log4Shell. | LLM-informed reconnaissance |

| Asking about in style content material administration programs used overseas. | LLM-informed reconnaissance |

| Asking for info on particular CVE numbers. | LLM-informed reconnaissance |

| Asking how internet-wide scanners are made. | LLM-informed reconnaissance |

| Asking how sqlmap can be used to add a possible internet shell to a goal server. | LLM-assisted vulnerability analysis |

| Asking for assist discovering methods to use infrastructure belonging to a outstanding automobile producer. | LLM-assisted vulnerability analysis |

| Offering code and asking for added assist utilizing communication companies to programmatically ship textual content messages. | LLM-enhanced scripting strategies |

| Asking for assist debugging the event of an extension for a cybersecurity software. | LLM-enhanced scripting strategies |

| Asking for assist to debug code that is half of a bigger framework for programmatically sending textual content messages to attacker specified numbers. | LLM-aided improvement |

| Asking for themes that authorities division workers would discover attention-grabbing and what can be good names for attachments to keep away from being blocked. | LLM-supported social engineering |

| Asking for variations of an attacker-provided job recruitment message. | LLM-supported social engineering |

The second case considerations the Iranian Authorities Islamic Revolutionary Guard Corps (IRGC)-affiliated risk group ‘CyberAv3ngers,’ identified for focusing on industrial programs in crucial infrastructure areas in Western nations.

OpenAI experiences that accounts related to this risk group requested ChatGPT to provide default credentials in extensively used Programmable Logic Controllers (PLCs), develop customized bash and Python scripts, and obfuscate code.

The Iranian hackers additionally used ChatGPT to plan their post-compromise exercise, learn to exploit particular vulnerabilities, and select strategies to steal person passwords on macOS programs, as listed under.

| Exercise | LLM ATT&CK Framework Class |

| Asking to record generally used industrial routers in Jordan. | LLM-informed reconnaissance |

| Asking to record industrial protocols and ports that may connect with the Web. | LLM-informed reconnaissance |

| Asking for the default password for a Tridium Niagara machine. | LLM-informed reconnaissance |

| Asking for the default person and password of a Hirschmann RS Collection Industrial Router. | LLM-informed reconnaissance |

| Asking for just lately disclosed vulnerabilities in CrushFTP and the Cisco Built-in Administration Controller in addition to older vulnerabilities within the Asterisk Voice over IP software program. | LLM-informed reconnaissance |

| Asking for lists of electrical energy corporations, contractors and customary PLCs in Jordan. | LLM-informed reconnaissance |

| Asking why a bash code snippet returns an error. | LLM enhanced scripting strategies |

| Asking to create a Modbus TCP/IP consumer. | LLM enhanced scripting strategies |

| Asking to scan a community for exploitable vulnerabilities. | LLM assisted vulnerability analysis |

| Asking to scan zip information for exploitable vulnerabilities. | LLM assisted vulnerability analysis |

| Asking for a course of hollowing C supply code instance. | LLM assisted vulnerability analysis |

| Asking how you can obfuscate vba script writing in excel. | LLM-enhanced anomaly detection evasion |

| Asking the mannequin to obfuscate code (and offering the code). | LLM-enhanced anomaly detection evasion |

| Asking how you can copy a SAM file. | LLM-assisted publish compromise exercise |

| Asking for an alternate utility to mimikatz. | LLM-assisted publish compromise exercise |

| Asking how you can use pwdump to export a password. | LLM-assisted publish compromise exercise |

| Asking how you can entry person passwords in MacOS. | LLM-assisted publish compromise exercise |

The third case highlighted in OpenAI’s report considerations Storm-0817, additionally Iranian risk actors.

That group reportedly used ChatGPT to debug malware, create an Instagram scraper, translate LinkedIn profiles into Persian, and develop a customized malware for the Android platform together with the supporting command and management infrastructure, as listed under.

| Exercise | LLM ATT&CK Framework Class |

| Searching for assist debugging and implementing an Instagram scraper. | LLM-enhanced scripting strategies |

| Translating LinkedIn profiles of Pakistani cybersecurity professionals into Persian. | LLM-informed reconnaissance |

| Asking for debugging and improvement assist in implementing Android malware and the corresponding command and management infrastructure. | LLM-aided improvement |

The malware created with the assistance of OpenAI’s chatbot can steal contact lists, name logs, and information saved on the machine, take screenshots, scrutinize the person’s searching historical past, and get their exact place.

“In parallel, STORM-0817 used ChatGPT to assist the event of server aspect code essential to deal with connections from compromised gadgets,” reads the Open AI report.

“This allowed us to see that the command and management server for this malware is a WAMP (Home windows, Apache, MySQL & PHP/Perl/Python) setup and through testing was utilizing the area stickhero[.]professional.”

All OpenAI accounts utilized by the above risk actors had been banned, and the related indicators of compromise, together with IP addresses, have been shared with cybersecurity companions.

Though not one of the instances described above give risk actors new capabilities in growing malware, they represent proof that generative AI instruments could make offensive operations extra environment friendly for low-skilled actors, aiding them in all levels, from planning to execution.