What an unbelievable week we’ve already had at re:Invent 2023! For those who haven’t checked them out already, I encourage you to learn our crew’s weblog posts protecting Monday Night time Stay with Peter DeSantis and Tuesday’s keynote from Adam Selipsky.

In the present day we heard Dr. Swami Sivasubramanian’s keynote handle at re:Invent 2023. Dr. Sivasubramanian is the Vice President of Information and AI at AWS. Now greater than ever, with the current proliferation of generative AI companies and choices, this house is ripe for innovation and new service releases. Let’s see what this yr has in retailer!

Swami started his keynote by outlining how over 200 years of technological innovation and progress within the fields of mathematical computation, new architectures and algorithms, and new programming languages has led us to this present inflection level with generative AI. He challenged everybody to take a look at the alternatives that generative AI presents when it comes to intelligence augmentation. By combining knowledge with generative AI, collectively in a symbiotic relationship with human beings, we are able to speed up new improvements and unleash our creativity.

Every of in the present day’s bulletins will be seen by the lens of a number of of the core components of this symbiotic relationship between knowledge, generative AI, and people. To that finish, Swami supplied a listing of the next necessities for constructing a generative AI utility:

- Entry to a wide range of basis fashions

- Personal surroundings to leverage your knowledge

- Simple-to-use instruments to construct and deploy functions

- Objective-built ML infrastructure

On this put up, I will likely be highlighting the principle bulletins from Swami’s keynote, together with:

- Help for Anthropic’s Claude 2.1 basis mannequin in Amazon Bedrock

- Amazon Titan Multimodal Embeddings, Textual content fashions, and Picture Generator now accessible in Amazon Bedrock

- Amazon SageMaker HyperPod

- Vector engine for Amazon OpenSearch Serverless

- Vector seek for Amazon DocumentDB (with MongoDB compatibility) and Amazon MemoryDB for Redis

- Amazon Neptune Analytics

- Amazon OpenSearch Service zero-ETL integration with Amazon S3

- AWS Clear Rooms ML

- New AI capabilities in Amazon Redshift

- Amazon Q generative SQL in Amazon Redshift

- Amazon Q knowledge integration in AWS Glue

- Mannequin Analysis on Amazon Bedrock

Let’s start by discussing a few of the new basis fashions now accessible in Amazon Bedrock!

Anthropic Claude 2.1

Simply final week, Anthropic introduced the discharge of its newest mannequin, Claude 2.1. In the present day, this mannequin is now accessible inside Amazon Bedrock. It gives important advantages over prior variations of Claude, together with:

- A 200,000 token context window

- A 2x discount within the mannequin hallucination charge

- A 25% discount in the price of prompts and completions on Bedrock

These enhancements assist to boost the reliability and trustworthiness of generative AI functions constructed on Bedrock. Swami additionally famous how accessing a wide range of basis fashions (FMs) is significant and that “nobody mannequin will rule all of them.” To that finish, Bedrock gives assist for a broad vary of FMs, together with Meta’s Llama 2 70B, which was additionally introduced in the present day.

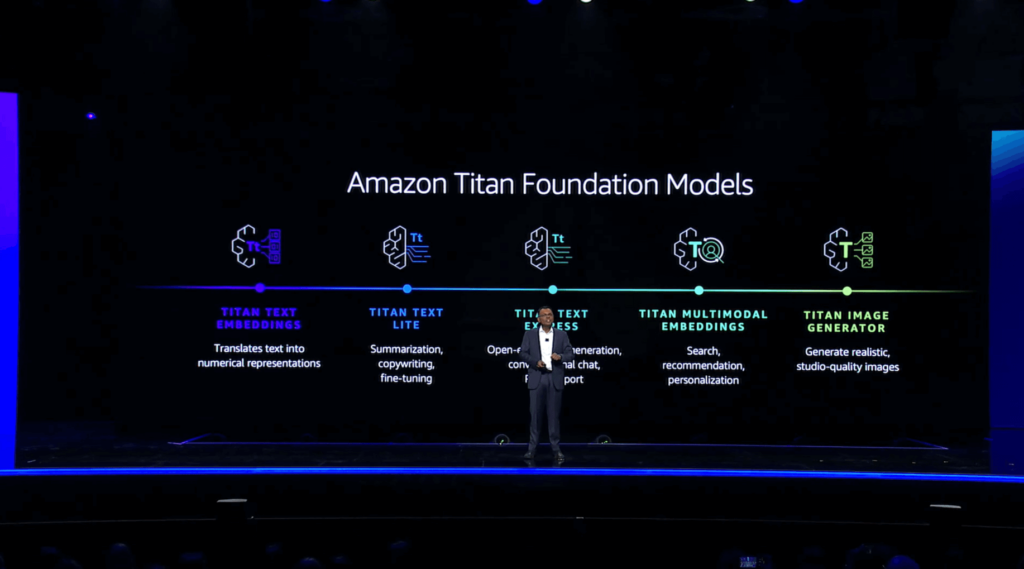

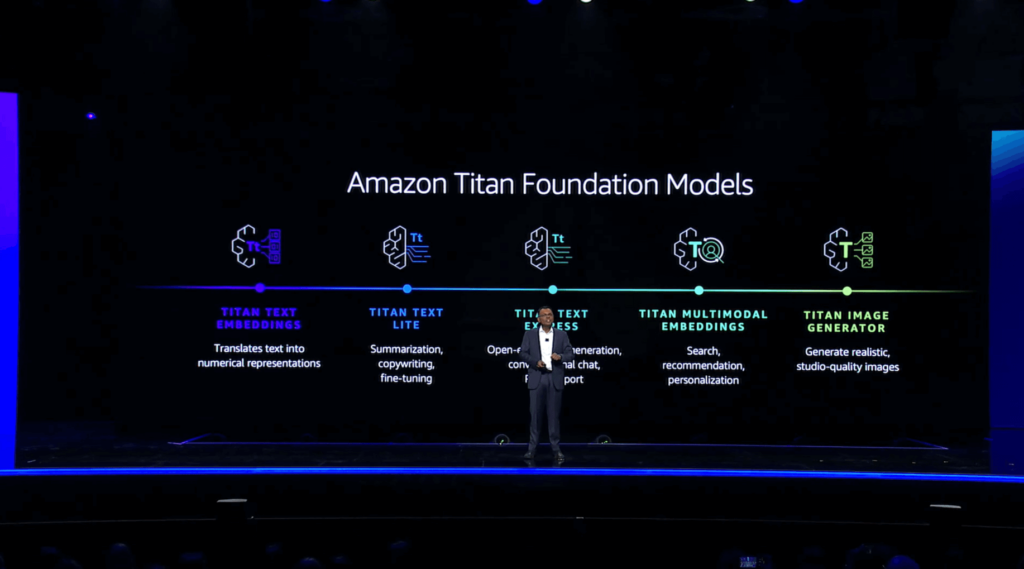

Amazon Titan Multimodal Embeddings, Textual content fashions, and Picture Generator now accessible in Amazon Bedrock

Swami launched the idea of vector embeddings, that are numerical representations of textual content. These embeddings are crucial when customizing and enhancing generative AI functions with issues like multimodal search, which might contain a text-based question together with uploaded photographs, video, or audio. To that finish, he launched Amazon Titan Multimodal Embeddings, which may settle for textual content, photographs, or a mixture of each to offer search, suggestion, and personalization capabilities inside generative AI functions. He then demonstrated an instance utility that leverages multimodal search to help prospects to find the required instruments and assets to finish a family transforming venture based mostly on a consumer’s textual content enter and image-based design selections.

He additionally introduced the final availability of Amazon Titan Textual content Lite and Amazon Titan Textual content Specific. Titan Textual content Lite is beneficial for performing duties like summarizing textual content and copywriting, whereas Titan Textual content Specific can be utilized for open-ended textual content era and conversational chat. Titan Textual content Specific additionally helps retrieval-augmented era, or RAG, which is beneficial when coaching your personal FMs based mostly in your group’s knowledge.

He then launched Titan Picture Generator and confirmed how it may be used to each generate new photographs from scratch and edit present photographs based mostly on pure language prompts. Titan Picture Generator additionally helps the accountable use of AI by embedding an invisible watermark inside each picture it generates indicating that the picture was generated by AI.

Amazon SageMaker HyperPod

Swami then moved on to a dialogue in regards to the complexities and challenges confronted by organizations when coaching their very own FMs. These embrace needing to interrupt up massive datasets into chunks which are then unfold throughout nodes inside a coaching cluster. It’s additionally essential to implement checkpoints alongside the best way to protect in opposition to knowledge loss from a node failure, including additional delays to an already time and resource-intensive course of. SageMaker HyperPod reduces the time required to coach FMs by permitting you to separate your coaching knowledge and mannequin throughout resilient nodes, permitting you to coach FMs for months at a time whereas taking full benefit of your cluster’s compute and community infrastructure, decreasing the time required to coach fashions by as much as 40%.

Vector engine for Amazon OpenSearch Serverless

Returning to the topic of vectors, Swami defined the necessity for a powerful knowledge basis that’s complete, built-in, and ruled when constructing generative AI functions. In assist of this effort, AWS has developed a set of companies on your group’s knowledge basis that features investments in storing vectors and knowledge collectively in an built-in trend. This lets you use acquainted instruments, keep away from extra licensing and administration necessities, present a sooner expertise to finish customers, and cut back the necessity for knowledge motion and synchronization. AWS is investing closely in enabling vector search throughout all of its companies. The primary announcement associated to this funding is the final availability of the vector engine for Amazon OpenSearch Serverless, which lets you retailer and question embeddings straight alongside your small business knowledge, enabling extra related similarity searches whereas additionally offering a 20x enchancment in queries per second, all with no need to fret about sustaining a separate underlying vector database.

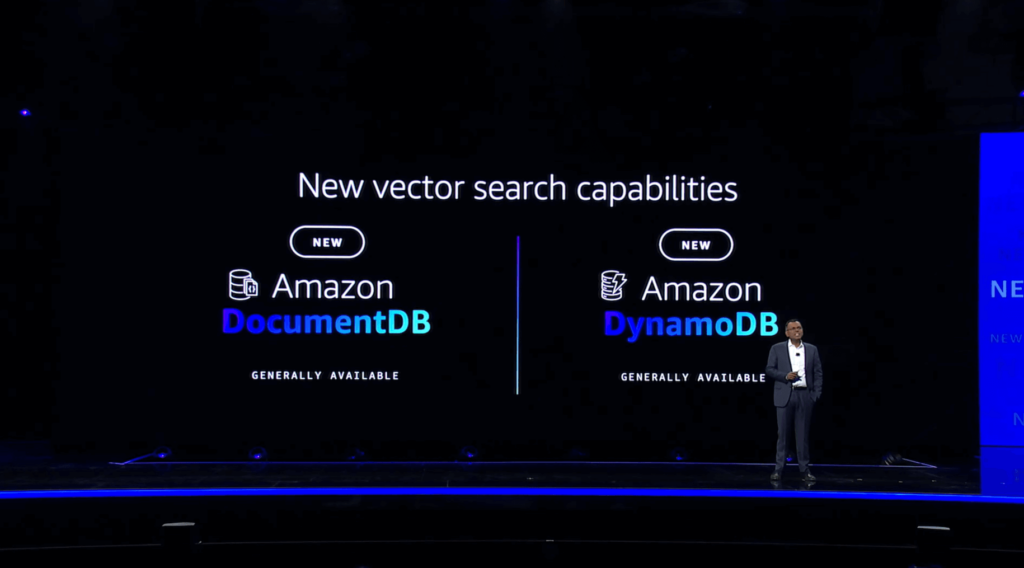

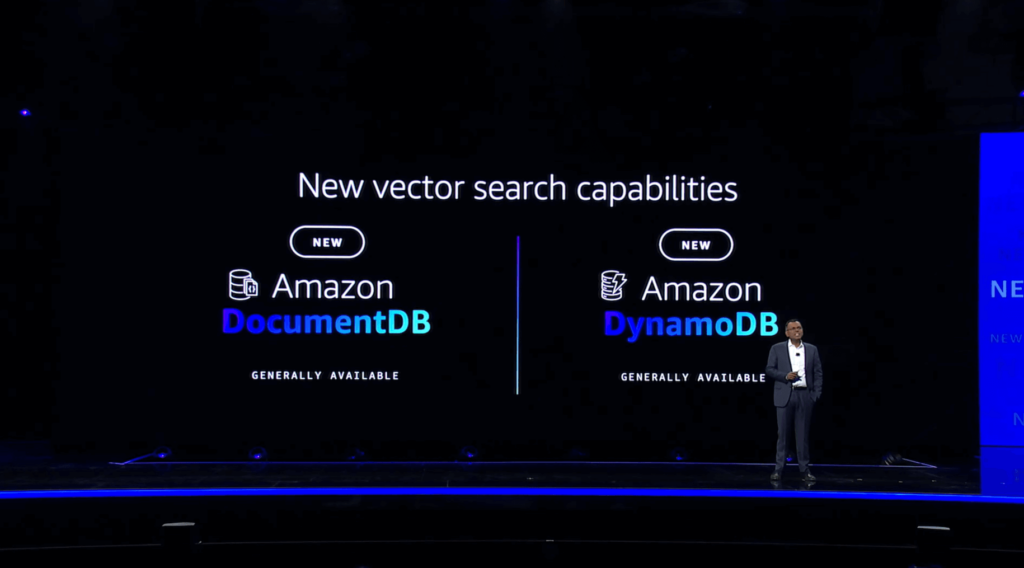

Vector seek for Amazon DocumentDB (with MongoDB compatibility) and Amazon MemoryDB for Redis

Vector search capabilities had been additionally introduced for Amazon DocumentDB (with MongoDB compatibility) and Amazon MemoryDB for Redis, becoming a member of their present providing of vector search inside DynamoDB. These vector search choices all present assist for each excessive throughput and excessive recall, with millisecond response occasions even at concurrency charges of tens of hundreds of queries per second. This stage of efficiency is particularly vital inside functions involving fraud detection or interactive chatbots, the place any diploma of delay could also be pricey.

Amazon Neptune Analytics

Staying inside the realm of AWS database companies, the subsequent announcement centered round Amazon Neptune, a graph database that means that you can signify relationships and connections between knowledge entities. In the present day’s announcement of the final availability of Amazon Neptune Analytics makes it sooner and simpler for knowledge scientists to rapidly analyze massive volumes of knowledge saved inside Neptune. Very similar to the opposite vector search capabilities talked about above, Neptune Analytics permits sooner vector looking out by storing your graph and vector knowledge collectively. This lets you discover and unlock insights inside your graph knowledge as much as 80x sooner than with present AWS options by analyzing tens of billions of connections inside seconds utilizing built-in graph algorithms.

Amazon OpenSearch Service zero-ETL integration with Amazon S3

Along with enabling vector search throughout AWS database companies, Swami additionally outlined AWS’ dedication to a “zero-ETL” future, with out the necessity for sophisticated and costly extract, rework, and cargo, or ETL pipeline growth. AWS has already introduced quite a lot of new zero-ETL integrations this week, together with Amazon DynamoDB zero-ETL integration with Amazon OpenSearch Service and numerous zero-ETL integrations with Amazon Redshift. In the present day, Swami introduced one other new zero-ETL integration, this time between Amazon OpenSearch Service and Amazon S3. Now accessible in preview, this integration means that you can seamlessly search, analyze, and visualize your operational knowledge saved in S3, equivalent to VPC Move Logs and Elastic Load Balancing logs, in addition to S3-based knowledge lakes. You’ll additionally have the ability to leverage OpenSearch’s out of the field dashboards and visualizations.

AWS Clear Rooms ML

Swami went on to debate AWS Clear Rooms, which had been launched earlier this yr and permit AWS prospects to securely collaborate with companions in “clear rooms” that don’t require you to repeat or share any of your underlying uncooked knowledge. In the present day, AWS introduced a preview launch of AWS Clear Rooms ML, extending the clear rooms paradigm to incorporate collaboration on machine studying fashions by the usage of AWS-managed lookalike fashions. This lets you practice your personal customized fashions and work with companions with no need to share any of your personal uncooked knowledge. AWS additionally plans to launch a healthcare mannequin to be used inside Clear Rooms ML inside the subsequent few months.

New AI capabilities in Amazon Redshift

The following two bulletins each contain Amazon Redshift, starting with some AI-driven scaling and optimizations in Amazon Redshift Serverless. These enhancements embrace clever auto-scaling for dynamic workloads, which gives proactive scaling based mostly on utilization patterns that embrace the complexity and frequency of your queries together with the scale of your knowledge units. This lets you concentrate on deriving vital insights out of your knowledge relatively than worrying about efficiency tuning your knowledge warehouse. You possibly can set price-performance targets and reap the benefits of ML-driven tailor-made optimizations that may do every thing from adjusting your compute to modifying the underlying schema of your database, permitting you to optimize for price, efficiency, or a steadiness between the 2 based mostly in your necessities.

Amazon Q generative SQL in Amazon Redshift

The following Redshift announcement is unquestionably one in every of my favorites. Following yesterday’s bulletins about Amazon Q, Amazon’s new generative AI-powered assistant that may be tailor-made to your particular enterprise wants and knowledge, in the present day we realized about Amazon Q generative SQL in Amazon Redshift. Very similar to the “pure language to code” capabilities of Amazon Q that had been unveiled yesterday with Amazon Q Code Transformation, Amazon Q generative SQL in Amazon Redshift means that you can write pure language queries in opposition to knowledge that’s saved in Redshift. Amazon Q makes use of contextual details about your database, its schema, and any question historical past in opposition to your database to generate the required SQL queries based mostly in your request. You possibly can even configure Amazon Q to leverage the question historical past of different customers inside your AWS account when producing SQL. You can even ask questions of your knowledge, equivalent to “what was the highest promoting merchandise in October” or “present me the 5 highest rated merchandise in our catalog,” with no need to know your underlying desk construction, schema, or any difficult SQL syntax.

Amazon Q knowledge integration in AWS Glue

One extra Amazon Q-related announcement concerned an upcoming knowledge integration in AWS Glue. This promising characteristic will simplify the method of establishing customized ETL pipelines in eventualities the place AWS doesn’t but supply a zero-ETL integration, leveraging brokers for Amazon Bedrock to interrupt down a pure language immediate right into a collection of duties. For example, you might ask Amazon Q to “write a Glue ETL job that reads knowledge from S3, removes all null data, and masses the information into Redshift” and it’ll deal with the remainder for you robotically.

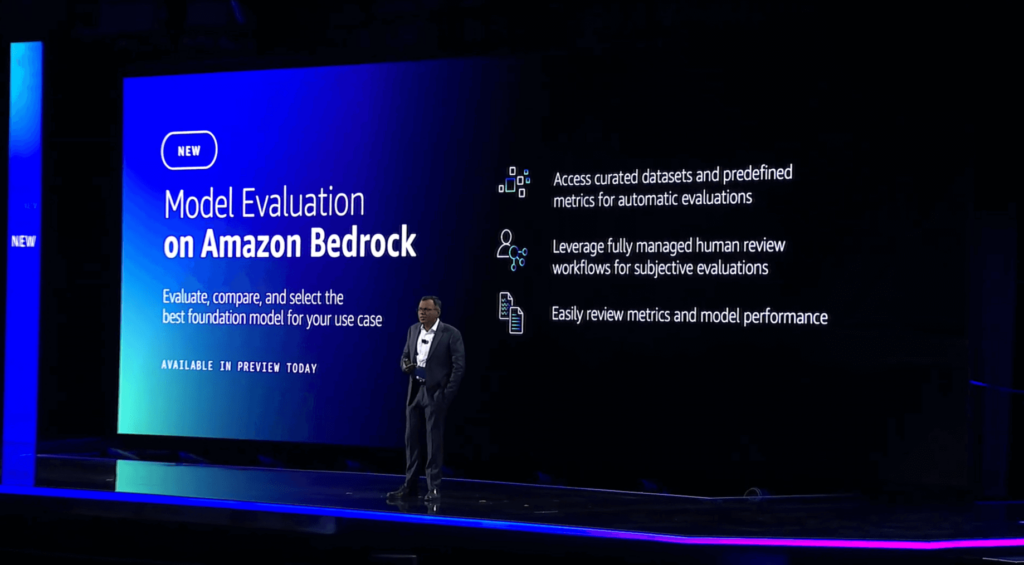

Mannequin Analysis on Amazon Bedrock

Swami’s remaining announcement circled again to the number of basis fashions which are accessible inside Amazon Bedrock and his earlier assertion that “nobody mannequin will rule all of them.” Due to this, mannequin evaluations are an vital instrument that needs to be carried out continuously by generative AI utility builders. In the present day’s preview launch of Mannequin Analysis on Amazon Bedrock means that you can consider, examine, and choose the most effective FM on your use case. You possibly can select to make use of automated analysis based mostly on metrics equivalent to accuracy and toxicity, or human analysis for issues like model and acceptable “model voice.” As soon as an analysis job is full, Mannequin Analysis will produce a mannequin analysis report that comprises a abstract of metrics detailing the mannequin’s efficiency.

Swami concluded his keynote by addressing the human factor of generative AI and reaffirming his perception that generative AI functions will speed up human productiveness. In any case, it’s people who should present the important inputs needed for generative AI functions to be helpful and related. The symbiotic relationship between knowledge, generative AI, and people creates longevity, with collaboration strengthening every factor over time. He concluded by asserting that people can leverage knowledge and generative AI to “create a flywheel of success.” With the upcoming generative AI revolution, human comfortable expertise equivalent to creativity, ethics, and adaptableness will likely be extra vital than ever. In keeping with a World Financial Discussion board survey, practically 75% of corporations will undertake generative AI by the yr 2027. Whereas generative AI might eradicate the necessity for some roles, numerous new roles and alternatives will little question emerge within the years to come back.

I entered in the present day’s keynote full of pleasure and anticipation, and as ordinary, Swami didn’t disappoint. I’ve been completely impressed by the breadth and depth of bulletins and new characteristic releases already this week, and it’s solely Wednesday! Control our weblog for extra thrilling keynote bulletins from re:Invent 2023!