Introduction

Let’s get this out of the way in which at first: understanding efficient streaming information architectures is difficult, and understanding learn how to make use of streaming information for analytics is actually exhausting. Kafka or Kinesis? Stream processing or an OLAP database? Open supply or absolutely managed? This weblog collection will assist demystify streaming information, and extra particularly, present engineering leaders a information for incorporating streaming information into their analytics pipelines.

Here’s what the collection will cowl:

- This submit will cowl the fundamentals: streaming information codecs, platforms, and use circumstances

- Half 2 will define key variations between stream processing and real-time analytics

- Half 3 will supply suggestions for operationalizing streaming information, together with a couple of pattern architectures

When you’d wish to skip round this submit, reap the benefits of our desk of contents (to the left of the textual content).

What Is Streaming Knowledge?

We’re going to begin with a fundamental query: what’s streaming information? It’s a steady and unbounded stream of data that’s generated at a excessive frequency and delivered to a system or software. An instructive instance is clickstream information, which information a person’s interactions on an internet site. One other instance can be sensor information collected in an industrial setting. The frequent thread throughout these examples is that a considerable amount of information is being generated in actual time.

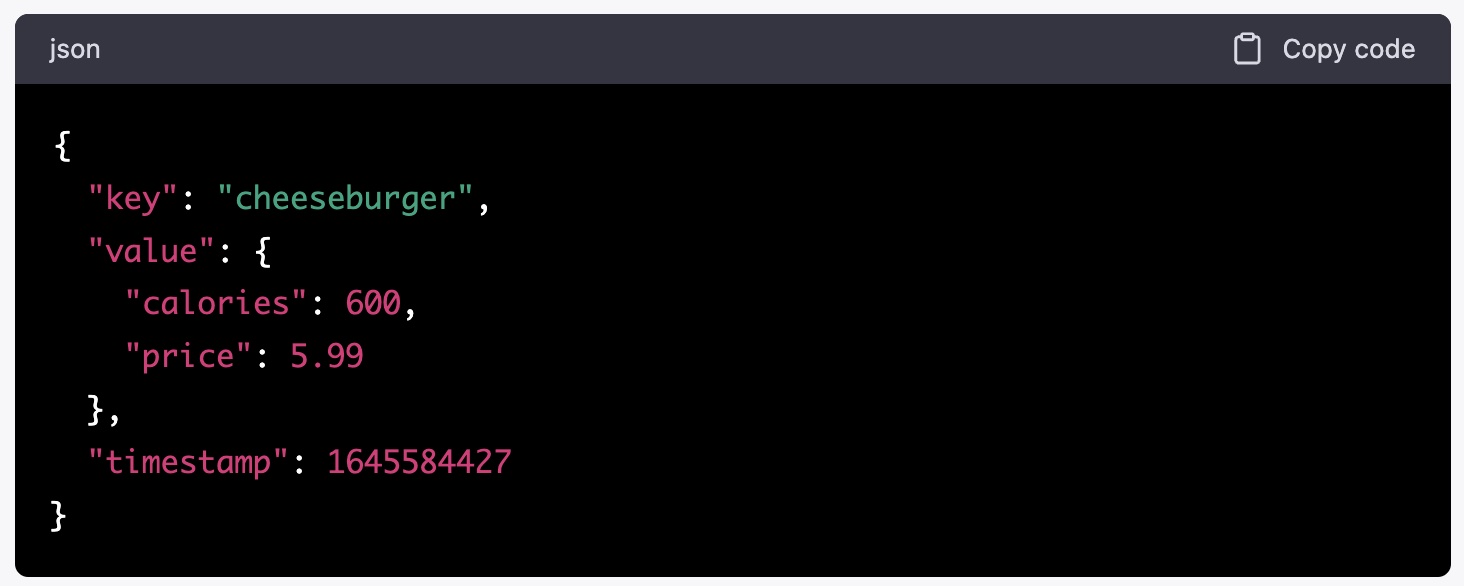

Sometimes, the “items” of knowledge being streamed are thought-about occasions, which resemble a report in a database, with some key variations. First, occasion information is unstructured or semi-structured and saved in a nested format like JSON or AVRO. Occasions sometimes embody a key, a price (which might have further nested components), and a timestamp. Second, occasions are normally immutable (this shall be a vital characteristic on this collection!). Third, occasions on their very own will not be ultimate for understanding the present state of a system. Occasion streams are nice at updating techniques with info like “A cheeseburger was offered” however are much less appropriate out of the field to reply “what number of cheeseburgers have been offered right now”. Lastly, and maybe most significantly, streaming information is exclusive as a result of it’s high-velocity and excessive quantity, with an expectation that the information is offered for use within the database in a short time after the occasion has occurred.

Streaming information has been round for many years. It gained traction within the early Nineties as telecommunication firms used it to handle the circulation of voice and information site visitors over their networks. At present, streaming information is in every single place. It has expanded to numerous industries and purposes, together with IoT sensor information, monetary information, internet analytics, gaming behavioral information, and lots of extra use circumstances. One of these information has grow to be a vital part of real-time analytics purposes as a result of reacting to occasions rapidly can have main results on a enterprise’ income. Actual-time analytics on streaming information will help organizations detect patterns and anomalies, establish income alternatives, and reply to altering situations, all close to immediately. Nevertheless, streaming information poses a novel problem for analytics as a result of it requires specialised applied sciences and approaches to realize. This collection will stroll you thru choices for operationalizing streaming information, however we’re going to begin with the fundamentals, together with codecs, platforms, and use circumstances.

Streaming Knowledge Codecs

There are a couple of quite common general-purpose streaming information codecs. They’re essential to check and perceive as a result of every format has a couple of traits that make it higher or worse for specific use circumstances. We’ll spotlight these briefly after which transfer on to streaming platforms.

JSON (JavaScript Object Notation)

This can be a light-weight, text-based format that’s simple to learn (normally), making it a well-liked alternative for information alternate. Listed here are a couple of traits of JSON:

- Readability: JSON is human-readable and straightforward to grasp, making it simpler to debug and troubleshoot.

- Vast help: JSON is broadly supported by many programming languages and frameworks, making it a sensible choice for interoperability between completely different techniques.

- Versatile schema: JSON permits for versatile schema design, which is helpful for dealing with information that will change over time.

Pattern use case: JSON is an efficient alternative for APIs or different interfaces that have to deal with various information varieties. For instance, an e-commerce web site could use JSON to alternate information between its web site frontend and backend server, in addition to with third-party distributors that present delivery or cost companies.

Instance message:

Avro

Avro is a compact binary format that’s designed for environment friendly serialization and deserialization of knowledge. You may as well format Avro messages in JSON. Listed here are a couple of traits of Avro:

- Environment friendly: Avro’s compact binary format can enhance efficiency and cut back community bandwidth utilization.

- Sturdy schema help: Avro has a well-defined schema that permits for kind security and powerful information validation.

- Dynamic schema evolution: Avro’s schema might be up to date with out requiring a change to the shopper code.

Pattern use case: Avro is an efficient alternative for large information platforms that have to course of and analyze massive volumes of log information. Avro is helpful for storing and transmitting that information effectively and has sturdy schema help.

Instance message:

x16cheeseburgerx02xdcx07x9ax99x19x41x12xcdxccx0cx40xcexfax8excax1f

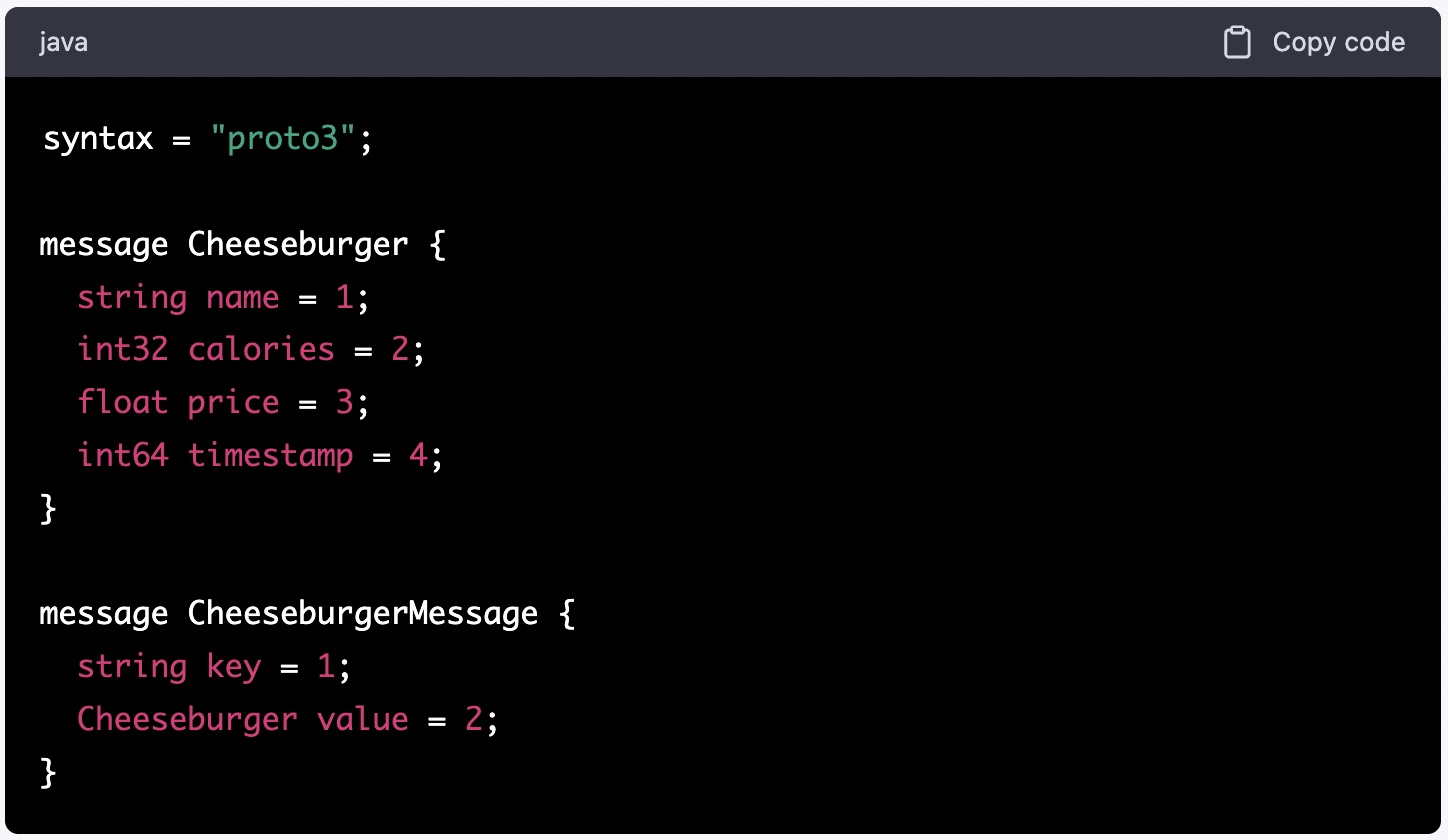

Protocol buffers (normally known as protobuf)

Protobuf is a compact binary format that, like Avro, is designed for environment friendly serialization and deserialization of structured information. Some traits of protobuf embody:

- Compact: protobuf is designed to be extra compact than different serialization codecs, which might additional enhance efficiency and cut back community bandwidth utilization.

- Sturdy typing: protobuf has a well-defined schema that helps sturdy typing and information validation.

- From side to side compatibility: protobuf helps from side to side compatibility, which implies that a change to the schema won’t break present code that makes use of the information.

Pattern use case: protobuf would work nice for a real-time messaging system that should deal with massive volumes of messages. The format is nicely suited to effectively encode and decode message information, whereas additionally benefiting from its compact measurement and powerful typing help.

Instance message:

It’s most likely clear that format alternative must be use-case pushed. Pay particular consideration to your anticipated information quantity, processing, and compatibility with different techniques. That stated, when unsure, JSON has the widest help and provides essentially the most flexibility.

Streaming information platforms

Okay, we’ve lined the fundamentals of streaming in addition to frequent codecs, however we have to speak about learn how to transfer this information round, course of it, and put it to make use of. That is the place streaming platforms are available. It’s potential to go very deep on streaming platforms. This weblog won’t cowl platforms in depth, however as an alternative supply fashionable choices, cowl the high-level variations between fashionable platforms, and supply a couple of essential issues for selecting a platform in your use case.

Apache Kafka

Kafka, for brief, is an open-source distributed streaming platform (sure, that may be a mouthful) that permits real-time processing of huge volumes of knowledge. That is the only hottest streaming platform. It supplies all the fundamental options you’d anticipate, like information streaming, storage, and processing, and is broadly used for constructing real-time information pipelines and messaging techniques. It helps varied information processing fashions corresponding to stream and batch processing (each lined partially 2 of this collection), and complicated occasion processing. Lengthy story brief, kafka is extraordinarily highly effective and broadly used, with a big neighborhood to faucet for greatest practices and help. It additionally provides quite a lot of deployment choices. A couple of noteworthy factors:

- Self-managed Kafka might be deployed on-premises or within the cloud. It’s open supply, so it’s “free”, however be forewarned that its complexity would require important in-house experience.

- Kafka might be deployed as a managed service through Confluent Cloud or AWS Managed Streaming for Kafka (MSK). Each of those choices simplify deployment and scaling considerably. You may get arrange in just some clicks.

- Kafka doesn’t have many built-in methods to perform analytics on occasions information.

AWS Kinesis

Amazon Kinesis is a totally managed, real-time information streaming service offered by AWS. It’s designed to gather, course of, and analyze massive volumes of streaming information in actual time, similar to Kafka. There are a couple of notable variations between Kafka and Kinesis, however the largest is that Kinesis is a proprietary and fully-managed service offered by Amazon Net Companies (AWS). The advantage of being proprietary is that Kinesis can simply make streaming information out there for downstream processing and storage in companies corresponding to Amazon S3, Amazon Redshift, and Amazon Elasticsearch. It’s additionally seamlessly built-in with different AWS companies like AWS Lambda, AWS Glue, and Amazon SageMaker, making it simple to orchestrate end-to-end streaming information processing pipelines with out having to handle the underlying infrastructure. There are some caveats to concentrate on, that can matter for some use circumstances:

- Whereas Kafka helps quite a lot of programming languages together with Java, Python, and C++, Kinesis primarily helps Java and different JVM languages.

- Kafka supplies infinite retention of knowledge whereas Kinesis shops information for a most of seven days by default.

- Kinesis shouldn’t be designed for a lot of shoppers.

Azure Occasion Hubs and Azure Service Bus

Each of those fully-managed companies by Microsoft supply streaming information constructed on Microsoft Azure, however they’ve essential variations in design and performance. There’s sufficient content material right here for its personal weblog submit, however we’ll cowl the high-level variations briefly.

Azure Occasion Hubs is a extremely scalable information streaming platform designed for amassing, reworking, and analyzing massive volumes of knowledge in actual time. It’s ultimate for constructing information pipelines that ingest information from a variety of sources, corresponding to IoT gadgets, clickstreams, social media feeds, and extra. Occasion Hubs is optimized for top throughput, low latency information streaming situations and might course of tens of millions of occasions per second.

Azure Service Bus is a messaging service that gives dependable message queuing and publish-subscribe messaging patterns. It’s designed for decoupling software parts and enabling asynchronous communication between them. Service Bus helps quite a lot of messaging patterns and is optimized for dependable message supply. It might probably deal with excessive throughput situations, however its focus is on messaging, which doesn’t sometimes require real-time processing or stream processing.

Much like Amazon Kinesis’ integration with different AWS companies, Azure Occasion Hubs or Azure Service Bus might be wonderful selections in case your software program is constructed on Microsoft Azure.

Use circumstances for real-time analytics on streaming information

We’ve lined the fundamentals for streaming information codecs and supply platforms, however this collection is primarily about learn how to leverage streaming information for real-time analytics; we’ll now shine some gentle on how main organizations are placing streaming information to make use of in the actual world.

Personalization

Organizations are utilizing streaming information to feed real-time personalization engines for eCommerce, adtech, media, and extra. Think about a purchasing platform that infers a person is curious about books, then historical past books, after which historical past books about Darwin’s journey to the Galapagos. As a result of streaming information platforms are completely suited to seize and transport massive quantities of knowledge at low-latency, firms are starting to make use of that information to derive intent and make predictions about what customers would possibly wish to see subsequent. Rockset has seen fairly a little bit of curiosity on this use case, and corporations are driving important incremental income by leveraging streaming information to personalize person experiences.

Anomaly Detection

Fraud and anomaly detection are one of many extra fashionable use circumstances for real-time analytics on streaming information. Organizations are capturing person habits through occasion streams, enriching these streams with historic information, and making use of on-line characteristic shops to detect anomalous or fraudulent person habits. Unsurprisingly, this use case is turning into fairly frequent at fintech and funds firms seeking to convey a real-time edge to alerting and monitoring.

Gaming

On-line video games sometimes generate huge quantities of streaming information, a lot of which is now getting used for real-time analytics. One can leverage streaming information to tune matchmaking heuristics, making certain gamers are matched at an applicable ability degree. Many studios are in a position to increase participant engagement and retention with reside metrics and leaderboards. Lastly, occasion streams can be utilized to assist establish anomalous habits related to dishonest.

Logistics

One other huge client of streaming information is the logistics trade. Streaming information with an applicable real-time analytics stack helps main logistics orgs handle and monitor the well being of fleets, obtain alerts in regards to the well being of apparatus, and advocate preventive upkeep to maintain fleets up and working. Moreover, superior makes use of of streaming information embody optimizing supply routes with real-time information from GPS gadgets, orders and supply schedules.

Area-driven design, information mesh, and messaging companies

Streaming information can be utilized to implement event-driven architectures that align with domain-driven design ideas. As an alternative of polling for updates, streaming information supplies a steady circulation of occasions that may be consumed by microservices. Occasions can characterize modifications within the state of the system, person actions, or different domain-specific info. By modeling the area by way of occasions, you may obtain free coupling, scalability, and suppleness.

Log aggregation

Streaming information can be utilized to mixture log information in actual time from techniques all through a corporation. Logs might be streamed to a central platform (normally an OLAP database; extra on this in components 2 and three), the place they are often processed and analyzed for alerting, troubleshooting, monitoring, or different functions.

Conclusion

We’ve lined rather a lot on this weblog, from codecs to platforms to make use of circumstances, however there’s a ton extra to find out about. There’s some attention-grabbing and significant variations between real-time analytics on streaming information, stream processing, and streaming databases, which is precisely what submit 2 on this collection will concentrate on. Within the meantime, in case you’re seeking to get began with real-time analytics on streaming information, Rockset has built-in connectors for Kafka, Confluent Cloud, MSK, and extra. Begin your free trial right now, with $300 in credit, no bank card required.