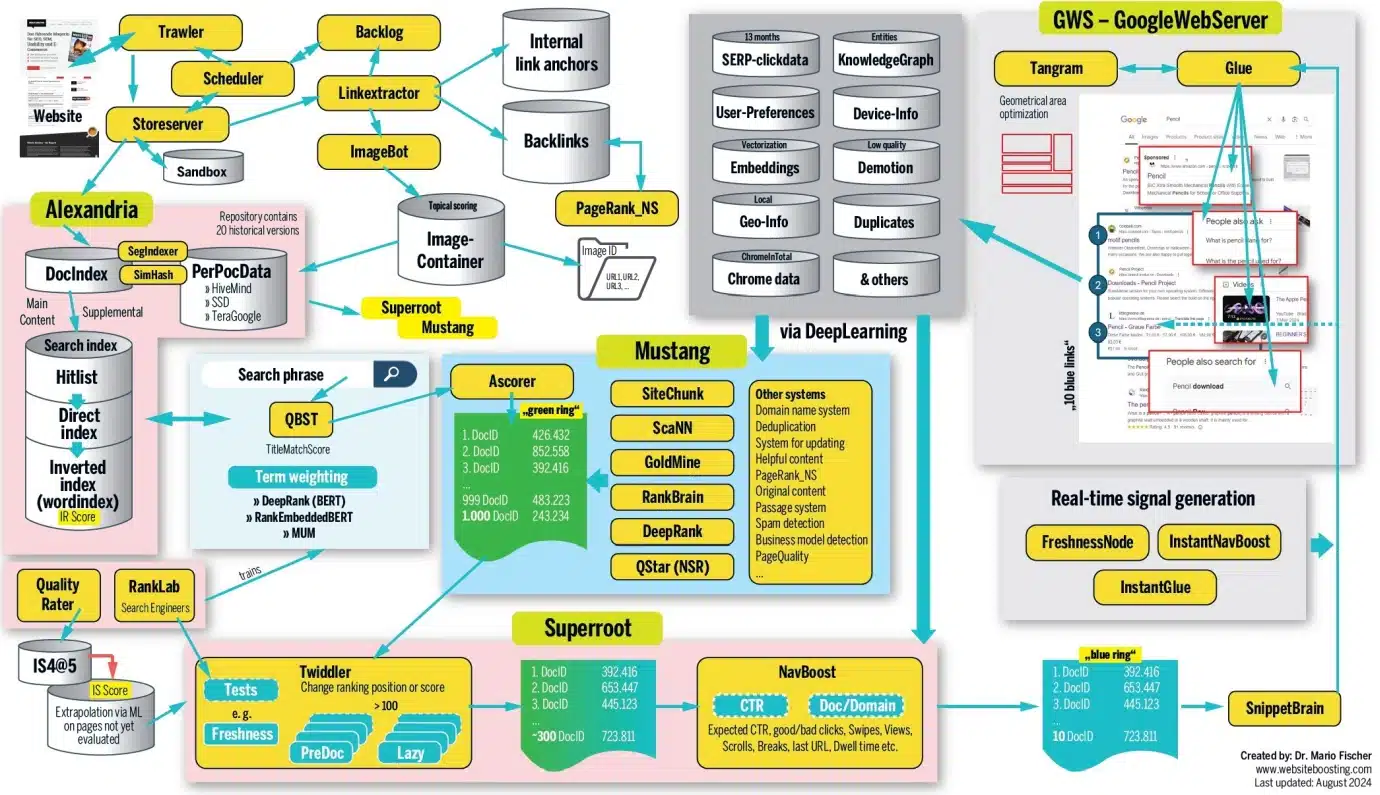

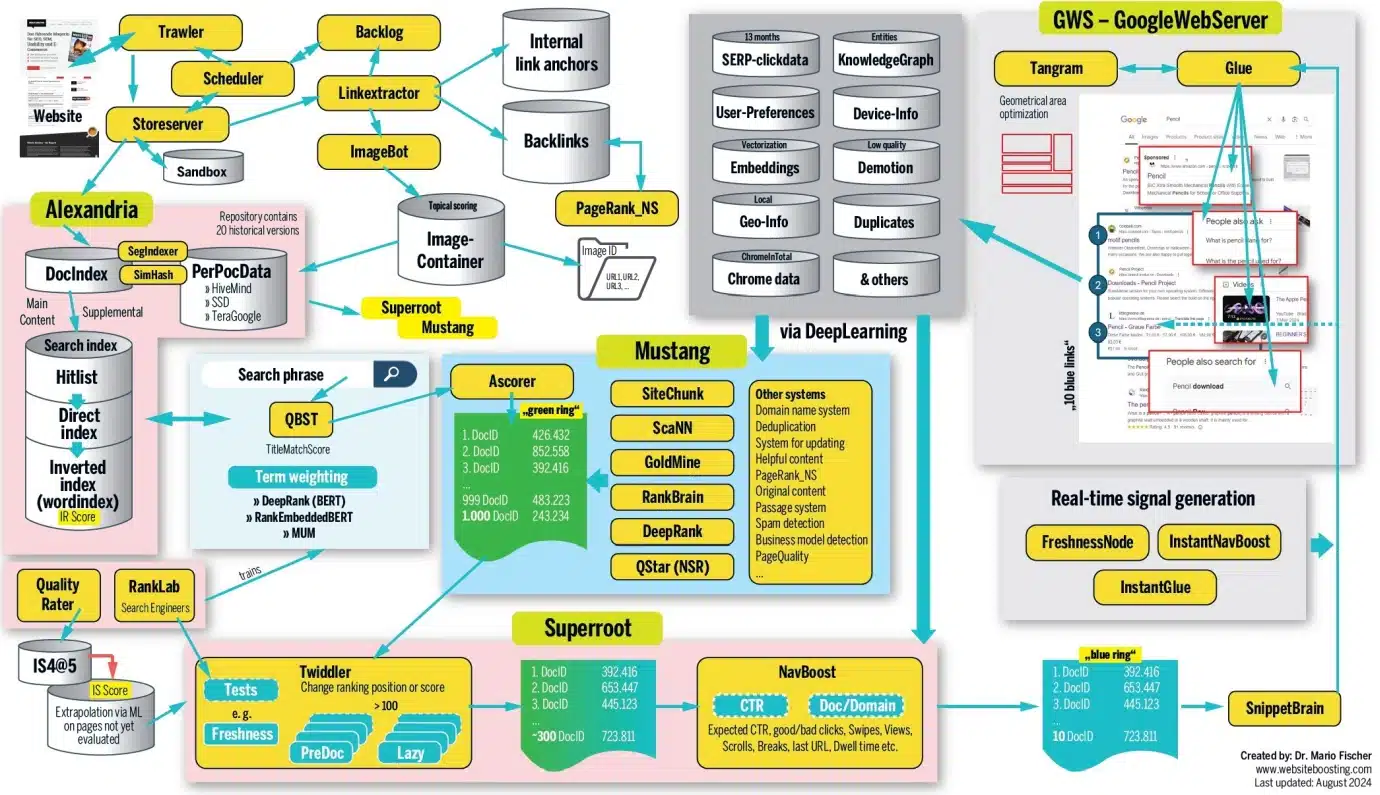

It needs to be clear to everybody that the Google documentation leak and the public paperwork from antitrust hearings do not likely inform us precisely how the rankings work.

The construction of natural search outcomes is now so complicated – not least because of using machine studying – that even the Google staff who work on the rating algorithms say they’ll now not clarify why successful is at one or two. We have no idea the weighting of the numerous indicators and the precise interaction.

Nonetheless, you will need to familiarize your self with the construction of the search engine to grasp why well-optimized pages don’t rank or, conversely, why seemingly quick and non-optimized outcomes generally seem on the high of the rankings. Crucial facet is that it is advisable to broaden your view of what’s actually necessary.

All the data out there clearly reveals that. Anybody who’s even marginally concerned with rating ought to incorporate these findings into their very own mindset. You will notice your web sites from a very completely different perspective and incorporate further metrics into your analyses, planning and selections.

To be sincere, this can be very tough to attract a very legitimate image of the techniques’ construction. The knowledge on the net is sort of completely different in its interpretation and generally differs in phrases, though the identical factor is supposed.

An instance: The system chargeable for constructing a SERP (search outcomes web page) that optimizes area use is named Tangram. In some Google paperwork, nonetheless, it’s also known as Tetris, which might be a reference to the well-known sport.

Over weeks of detailed work, I’ve considered, analyzed, structured, discarded and restructured virtually 100 paperwork many instances.

This text shouldn’t be supposed to be exhaustive or strictly correct. It represents my greatest effort (i.e., “to the very best of my data and perception”) and a little bit of Inspector Columbo’s investigative spirit. The result’s what you see right here.

A brand new doc ready for Googlebot’s go to

If you publish a brand new web site, it isn’t listed instantly. Google should first change into conscious of the URL. This often occurs both by way of an up to date sitemap or by way of a hyperlink positioned there from an already-known URL.

Incessantly visited pages, such because the homepage, naturally convey this hyperlink data to Google’s consideration extra rapidly.

The trawler system retrieves new content material and retains observe of when to revisit the URL to test for updates. That is managed by a part referred to as the scheduler. The shop server decides whether or not the URL is forwarded or whether or not it’s positioned within the sandbox.

Google denies the existence of this field, however the current leaks recommend that (suspected) spam websites and low-value websites are positioned there. It needs to be talked about that Google apparently forwards among the spam, in all probability for additional evaluation to coach its algorithms.

Our fictitious doc passes this barrier. Outgoing hyperlinks from our doc are extracted and sorted in keeping with inside or exterior outgoing. Different techniques primarily use this data for hyperlink evaluation and PageRank calculation. (Extra on this later.)

Hyperlinks to photographs are transferred to the ImageBot, which calls them up, generally with a big delay, and they’re positioned (along with similar or related pictures) in a picture container. Trawler apparently makes use of its personal PageRank to regulate the crawl frequency. If a web site has extra site visitors, this crawl frequency will increase (ClientTrafficFraction).

Alexandria: The nice library

Google’s indexing system, referred to as Alexandria, assigns a novel DocID to every piece of content material. If the content material is already recognized, resembling within the case of duplicates, a brand new ID shouldn’t be created; as an alternative, the URL is linked to the present DocID.

Essential: Google differentiates between a URL and a doc. A doc may be made up of a number of URLs that comprise related content material, together with completely different language variations, if they’re correctly marked. URLs from different domains are additionally sorted right here. All of the indicators from these URLs are utilized by way of the frequent DocID.

For duplicate content material, Google selects the canonical model, which seems in search rankings. This additionally explains why different URLs could generally rank equally; the willpower of the “unique” (canonical) URL can change over time.

As there may be solely this one model of our doc on the net, it’s given its personal DocID.

Particular person segments of our web site are looked for related key phrase phrases and pushed into the search index. There, the “hit listing” (all of the necessary phrases on the web page) is first despatched to the direct index, which summarizes the key phrases that happen a number of instances per web page.

Now an necessary step takes place. The person key phrase phrases are built-in into the phrase catalog of the inverted index (phrase index). The phrase pencil and all necessary paperwork containing this phrase are already listed there.

In easy phrases, as our doc prominently incorporates the phrase pencil a number of instances, it’s now listed within the phrase index with its DocID beneath the entry “pencil.”

The DocID is assigned an algorithmically calculated IR (data retrieval) rating for pencil, later used for inclusion within the Posting Checklist. In our doc, for instance, the phrase pencil has been marked in daring within the textual content and is contained in H1 (saved in AvrTermWeight). Such and different indicators enhance the IR rating.

Google strikes paperwork thought-about necessary to the so-called HiveMind, i.e., the primary reminiscence. Google makes use of each quick SSDs and standard HDDs (known as TeraGoogle) for long-term storage of knowledge that doesn’t require fast entry. Paperwork and indicators are saved in the primary reminiscence.

Notably, consultants estimate that earlier than the current AI growth, about half of the world’s net servers had been housed at Google. An unlimited community of interconnected clusters permits tens of millions of primary reminiscence items to work collectively. A Google engineer as soon as famous at a convention that, in concept, Google’s primary reminiscence may retailer your entire net.

It’s fascinating to notice that hyperlinks, together with backlinks, saved in HiveMind appear to hold considerably extra weight. For instance, hyperlinks from necessary paperwork are given a lot larger significance, whereas hyperlinks from URLs in TeraGoogle (HDD) could also be weighted much less or probably not thought-about in any respect.

- Trace: Present your paperwork with believable and constant date values. BylineDate (date within the supply code), syntaticDate (extracted date from URL and/or title) and semanticDate (taken from the readable content material) are used, amongst others.

- Faking topicality by altering the date can actually result in downranking (demotion). The lastSignificantUpdate attribute data when the final vital change was made to a doc. Fixing minor particulars or typos doesn’t have an effect on this counter.

Further data and indicators for every DocID are saved dynamically within the repository (PerDocData). Many techniques entry this later with regards to fine-tuning relevance. It’s helpful to know that the final 20 variations of a doc are saved there (by way of CrawlerChangerateURLHistory).

Google has the power to guage and assess modifications over time. If you wish to fully change the content material or subject of a doc, you’ll theoretically have to create 20 intermediate variations to override the previous content material indicators. This is the reason reviving an expired area (a site that was beforehand energetic however has since been deserted or offered, maybe because of insolvency) doesn’t provide any rating benefit.

If a site’s Admin-C modifications and its thematic content material modifications on the similar time, a machine can simply acknowledge this at this level. Google then units all indicators to zero, and the supposedly helpful previous area now not presents any benefits over a very newly registered area.

QBST: Somebody is in search of ‘pencil’

When somebody enters “pencil” as a search time period in Google, QBST begins its work. The search phrase is analyzed, and if it incorporates a number of phrases, the related ones are despatched to the phrase index for retrieval.

The method of time period weighting is sort of complicated, involving techniques like RankBrain, DeepRank (previously BERT) and RankEmbeddedBERT. The related phrases, resembling “pencil,” are then handed on to the Ascorer for additional processing.

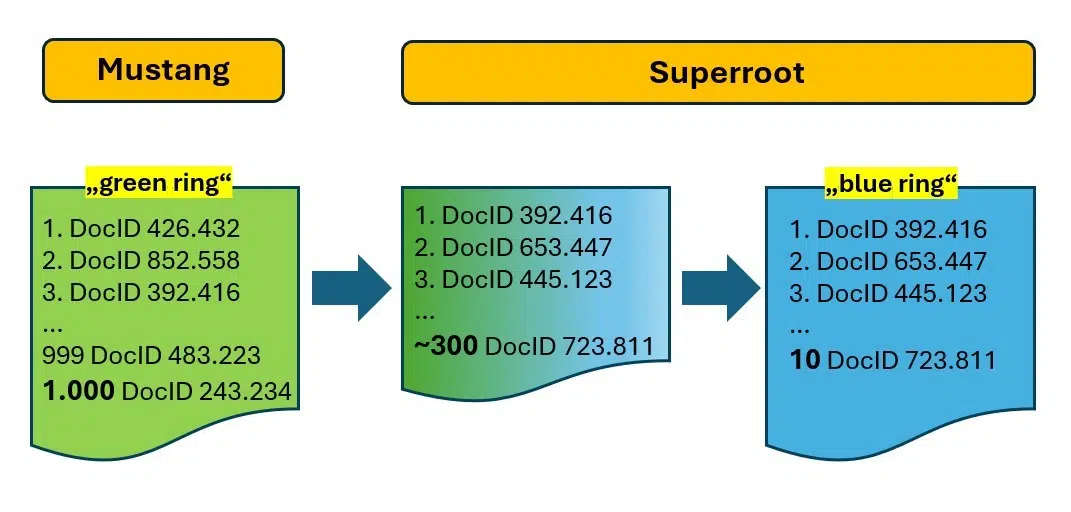

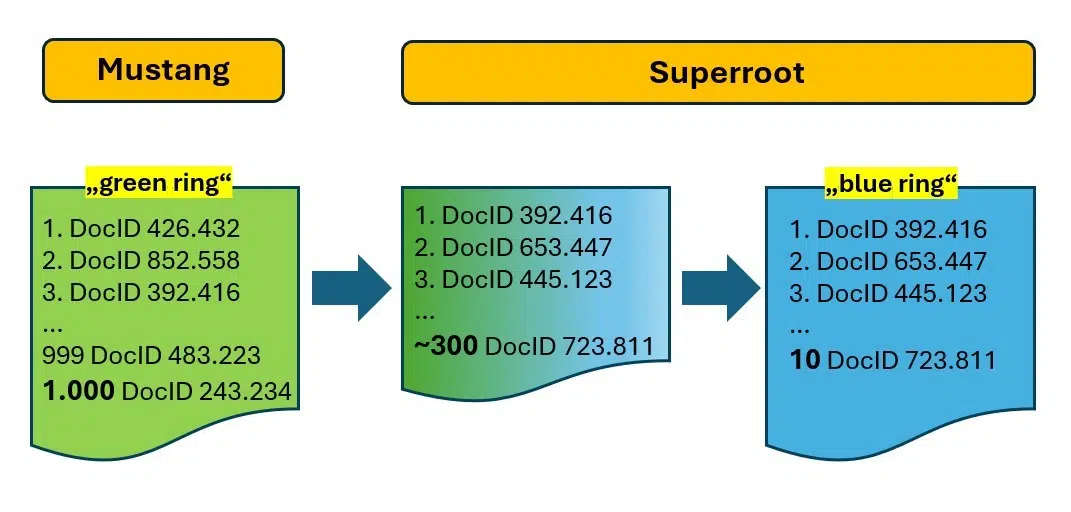

Ascorer: The ‘inexperienced ring’ is created

The Ascorer retrieves the highest 1,000 DocIDs for “pencil” from the inverted index, ranked by IR rating. In keeping with inside paperwork, this listing is known as a “inexperienced ring.” Inside the business, it is called a posting listing.

The Ascorer is a part of a rating system referred to as Mustang, the place additional filtering happens via strategies resembling deduplication utilizing SimHash (a sort of doc fingerprint), passage evaluation, techniques for recognizing unique and useful content material, and many others. The purpose is to refine the 1,000 candidates all the way down to the “10 blue hyperlinks” or the “blue ring.”

Our doc about pencils is on the posting listing, at present ranked at 132. With out further techniques, this could be its closing place.

Superroot: Flip 1,000 into 10!

The Superroot system is chargeable for re-ranking, finishing up the precision work of lowering the “inexperienced ring” (1,000 DocIDs) to the “blue ring” with solely 10 outcomes.

Twiddlers and NavBoost carry out this activity. Different techniques are in all probability in use right here, however their precise particulars are unclear because of imprecise data.

- Google Caffeine now not exists on this type. Solely the identify has remained.

- Google now works with numerous micro-services that talk with one another and generate attributes for paperwork which might be used as indicators by all kinds of rating and re-ranking techniques and with which the neural networks are skilled to make predictions.

Filter after filter: The Twiddlers

Varied paperwork point out that a number of hundred Twiddler techniques are in use. Consider a Twiddler as a plug-in just like these in WordPress.

Every Twiddler has its personal particular filter goal. They’re designed this manner as a result of they’re comparatively straightforward to create and don’t require modifications to the complicated rating algorithms in Ascorer.

Modifying these algorithms is difficult and would contain intensive planning and programming because of potential negative effects. In distinction, Twiddlers function in parallel or sequentially and are unaware of the actions of different Twiddlers.

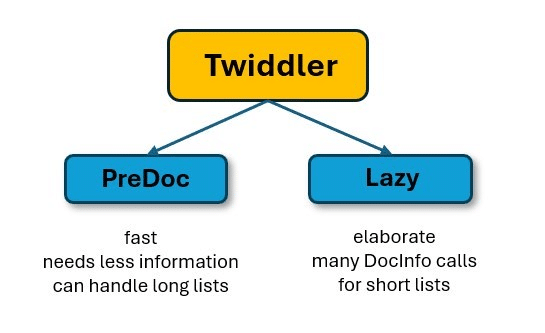

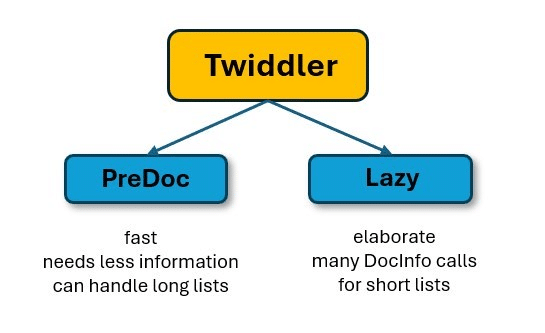

There are mainly two varieties of Twiddlers.

- PreDoc Twiddlers can work with your entire set of a number of hundred DocIDs as a result of they require little or no further data.

- In distinction, Twiddlers of the “Lazy” sort require extra data, for instance, from the PerDocData database. This takes correspondingly longer and is extra complicated.

Because of this, the PreDocs first cut back the posting listing to considerably fewer entries after which begin with slower filters. This protects an unlimited quantity of computing capability and time.

Some Twiddlers alter the IR rating, both positively or negatively, whereas others modify the rating place instantly. Since our doc is new to the index, a Twiddler designed to offer current paperwork a greater probability of rating would possibly, for example, multiply the IR rating by an element of 1.7. This adjustment may transfer our doc from the 132nd place to the 81st place.

One other Twiddler enhances range (strideCategory) within the SERPs by devaluing paperwork with related content material. In consequence, a number of paperwork forward of us lose their positions, permitting our pencil doc to maneuver up 12 spots to 69. Moreover, a Twiddler that limits the variety of weblog pages to a few for particular queries boosts our rating to 61.

Our web page acquired a zero (for “Sure”) for the CommercialScore attribute. The Mustang system recognized a gross sales intention throughout evaluation. Google possible is aware of that searches for “pencil” are often adopted by refined searches like “purchase pencil,” indicating a industrial or transactional intent. A Twiddler designed to account for this search intent provides related outcomes and boosts our web page by 20 positions, shifting us as much as 41.

One other Twiddler comes into play, imposing a “web page three penalty” that limits pages suspected of being spam to a most rank of 31 (Web page 3). The most effective place for a doc is outlined by the BadURL-demoteindex attribute, which prevents rating above this threshold. Attributes like DemoteForContent, DemoteForForwardlinks and DemoteForBacklinks are used for this objective. In consequence, three paperwork above us are demoted, permitting our web page to maneuver as much as Place 38.

Our doc may have been devalued, however to maintain issues easy, we’ll assume it stays unaffected. Let’s take into account one final Twiddler that assesses how related our pencil web page is to our area primarily based on embeddings. Since our web site focuses solely on writing devices, this works to our benefit and negatively impacts 24 different paperwork.

As an illustration, think about a value comparability web site with a various vary of matters however with one “good” web page about pencils. As a result of this web page’s subject differs considerably from the positioning’s general focus, it might be devalued by this Twiddler.

Attributes like siteFocusScore and siteRadius mirror this thematic distance. In consequence, our IR rating is boosted as soon as extra, and different outcomes are downgraded, shifting us as much as Place 14.

As talked about, Twiddlers serve a variety of functions. Builders can experiment with new filters, multipliers or particular place restrictions. It’s even attainable to rank a consequence particularly both in entrance of or behind one other consequence.

One among Google’s leaked inside paperwork warns that sure Twiddler options ought to solely be utilized by consultants and after consulting with the core search crew.

“When you suppose you perceive how they work, belief us: you don’t. We’re unsure that we do both.”

There are additionally Twiddlers that solely create annotations and add these to the DocID on the best way to the SERP. A picture then seems within the snippet, for instance, or the title and/or description are dynamically rewritten later.

When you puzzled through the pandemic why your nation’s nationwide well being authority (such because the Division of Well being and Human Companies within the U.S.) persistently ranked first in COVID-19 searches, it was because of a Twiddler that reinforces official sources primarily based on language and nation utilizing queriesForWhichOfficial.

You’ve got little management over how Twiddler reorders your outcomes, however understanding its mechanisms will help you higher interpret rating fluctuations or “inexplicable rankings.” It’s helpful to usually assessment SERPs and word the varieties of outcomes.

For instance, do you persistently see solely a sure variety of discussion board or weblog posts, even with completely different search phrases? What number of outcomes are transactional, informational, or navigational? Are the identical domains repeatedly showing, or do they differ with slight modifications within the search phrase?

When you discover that only some on-line shops are included within the outcomes, it could be much less efficient to strive rating with the same web site. As an alternative, take into account specializing in extra information-oriented content material. Nonetheless, don’t leap to conclusions simply but, because the NavBoost system might be mentioned later.

Google’s high quality raters and RankLab

A number of thousand high quality raters work for Google worldwide to guage sure search outcomes and take a look at new algorithms and/or filters earlier than they go “reside.”

Google explains, “Their scores don’t instantly affect rating.”

That is primarily appropriate, however these votes do have a big oblique affect on rankings.

Right here’s the way it works: Raters obtain URLs or search phrases (search outcomes) from the system and reply predetermined questions, usually assessed on cell gadgets.

For instance, they could be requested, “Is it clear who wrote this content material and when? Does the creator have skilled experience on this subject?” The solutions to those questions are saved and used to coach machine studying algorithms. These algorithms analyze the traits of excellent and reliable pages versus much less dependable ones.

This strategy signifies that as an alternative of counting on Google search crew members to create standards for rating, algorithms use deep studying to establish patterns primarily based on the coaching supplied by human evaluators.

Let’s take into account a thought experiment as an instance this. Think about individuals intuitively price a chunk of content material as reliable if it contains an creator’s image, full identify, and a LinkedIn biography hyperlink. Pages missing these options are perceived as much less reliable.

If a neural community is skilled on varied web page options alongside these “Sure” or “No” scores, it is going to establish this attribute as a key issue. After a number of constructive take a look at runs, which generally final no less than 30 days, the community would possibly begin utilizing this function as a rating sign. In consequence, pages with an creator picture, full identify, and LinkedIn hyperlink would possibly obtain a rating enhance, doubtlessly via a Twiddler, whereas pages with out these options might be devalued.

Google’s official stance of not specializing in authors may align with this state of affairs. Nonetheless, leaked data reveals attributes like isAuthor and ideas resembling “creator fingerprinting” via the AuthorVectors attribute, which makes the idiolect (the person use of phrases and formulations) of an creator distinguishable or identifiable – once more by way of embeddings.

Raters’ evaluations are compiled into an “data satisfaction” (IS) rating. Though many raters contribute, an IS rating is just out there for a small fraction of URLs. For different pages with related patterns, this rating is extrapolated for rating functions.

Google notes, “Quite a lot of paperwork don’t have any clicks however may be necessary.” When extrapolation isn’t attainable, the system mechanically sends the doc to raters to generate a rating.

The time period “golden” is talked about in relation to high quality raters, suggesting there could be a gold customary for sure paperwork or doc sorts. It may be inferred that aligning with the expectations of human testers may assist your doc meet this gold customary. Moreover, it’s possible that a number of Twiddlers would possibly present a big enhance to DocIDs deemed “golden,” doubtlessly pushing them into the highest 10.

High quality raters are usually not full-time Google staff and may go via exterior corporations. In distinction, Google’s personal consultants function inside the RankLab, the place they conduct experiments, develop new Twiddlers and consider whether or not these or refined Twiddlers enhance consequence high quality or merely filter out spam.

Confirmed and efficient Twiddlers are then built-in into the Mustang system, the place complicated, computationally intensive and interconnected algorithms are used.

However what do customers need? NavBoost can repair that!

Our pencil doc hasn’t absolutely succeeded but. Inside Superroot, one other core system, NavBoost, performs a big function in figuring out the order of search outcomes. NavBoost makes use of “slices” to handle completely different information units for cell, desktop, and native searches.

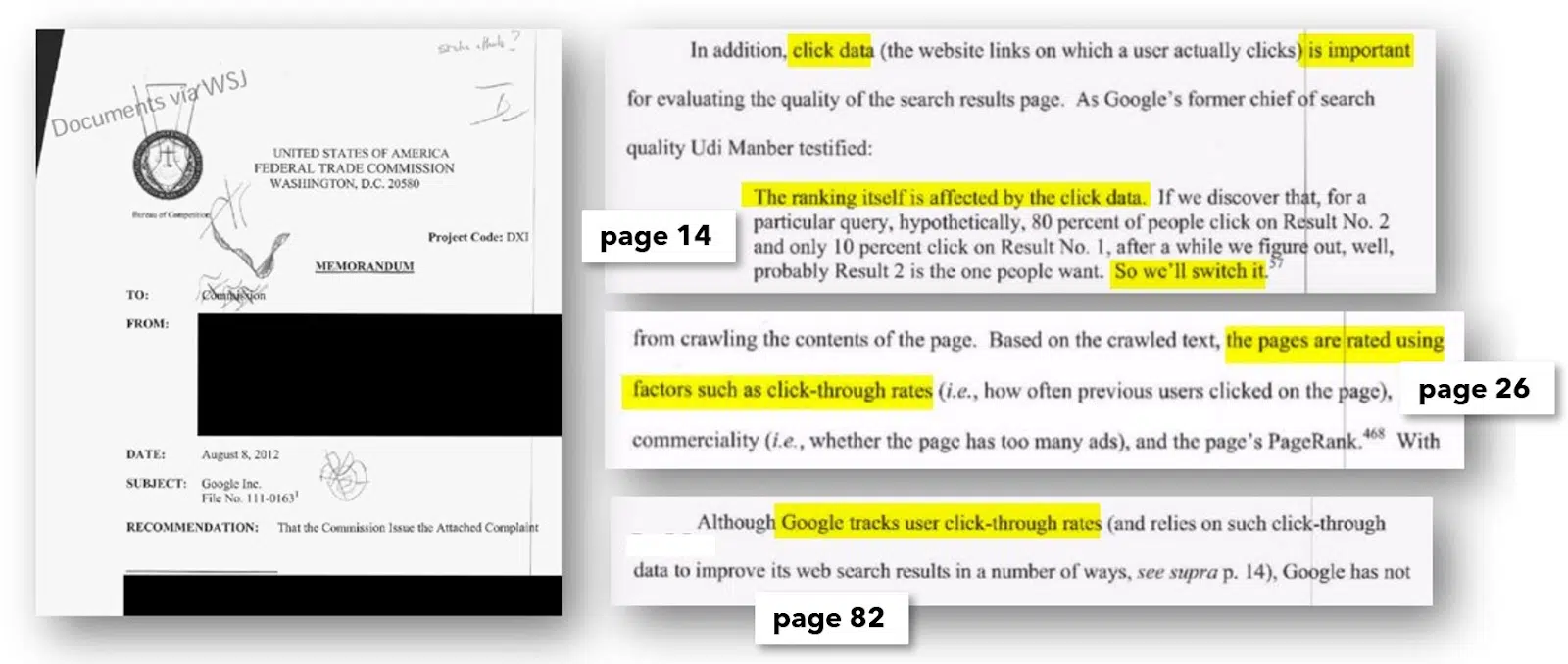

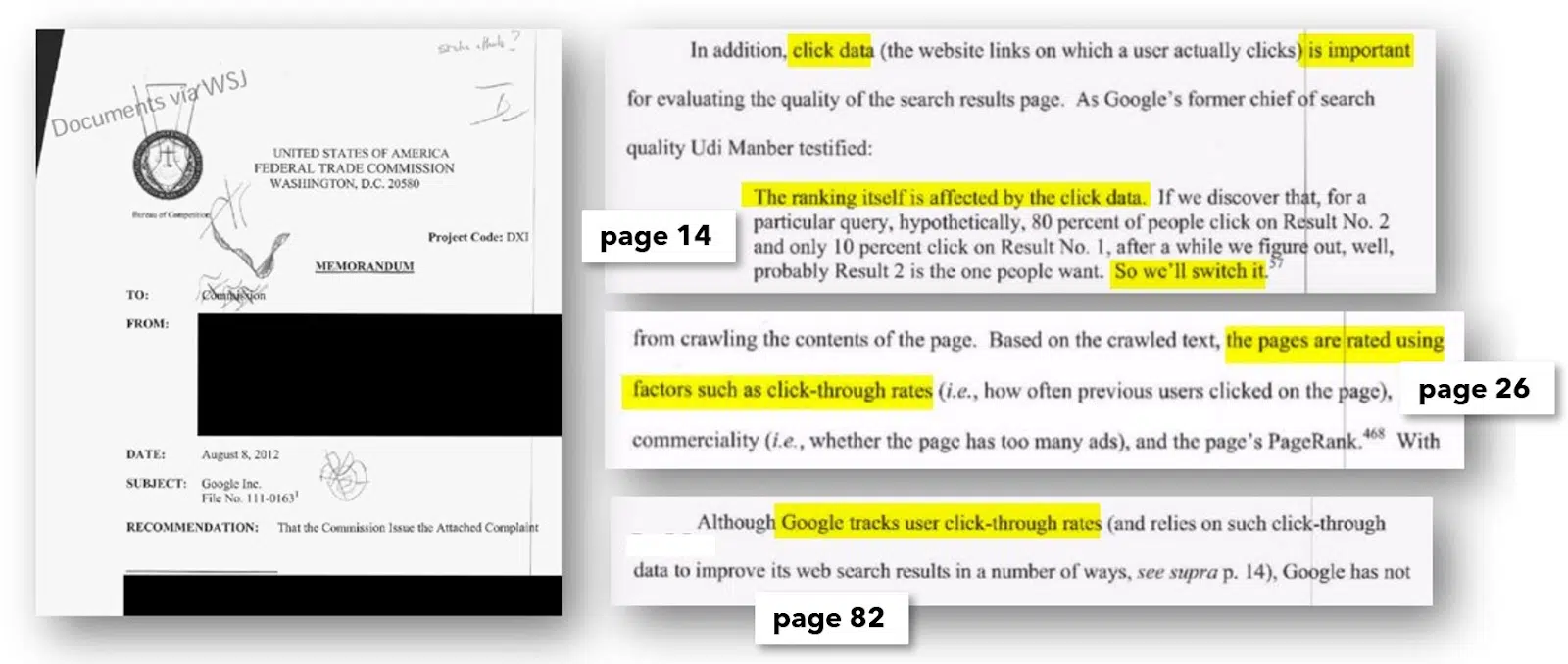

Though Google has formally denied utilizing consumer clicks for rating functions, FTC paperwork reveal an inside e-mail instructing that the dealing with of click on information should stay confidential.

This shouldn’t be held in opposition to Google, because the denial of utilizing click on information includes two key elements. Firstly, acknowledging using click on information may provoke media outrage over privateness considerations, portraying Google as a “information octopus” monitoring our on-line exercise. Nonetheless, the intent behind utilizing click on information is to acquire statistically related metrics, to not monitor particular person customers. Whereas information safety advocates would possibly view this in another way, this angle helps clarify the denial.

FTC paperwork verify that click on information is used for rating functions and often point out the NavBoost system on this context (54 instances within the April 18, 2023 listening to). An official listening to in 2012 additionally revealed that click on information influences rankings.

It has been established that each click on habits on search outcomes and site visitors on web sites or webpages affect rankings. Google can simply consider search habits, together with searches, clicks, repeat searches and repeat clicks, instantly inside the SERPs.

There was hypothesis that Google may infer area motion information from Google Analytics, main some to keep away from utilizing this technique. Nonetheless, this concept has limitations.

First, Google Analytics doesn’t present entry to all transaction information for a site. Extra importantly, with over 60% of individuals utilizing the Google Chrome browser (over three billion customers), Google collects information on a considerable portion of net exercise.

This makes Chrome an important part in analyzing net actions, as highlighted in hearings. Moreover, Core Internet Vitals indicators are formally collected via Chrome and aggregated into the “chromeInTotal” worth.

The damaging publicity related to “monitoring” is one purpose for the denial, whereas one other is the priority that evaluating click on and motion information may encourage spammers and tricksters to manufacture site visitors utilizing bot techniques to govern rankings. Whereas the denial could be irritating, the underlying causes are no less than comprehensible.

- Among the metrics which might be saved embody badClicks and goodClicks. The size of time a searcher stays on the goal web page and the data on what number of different pages they view there and at what time (Chrome information) are most probably included on this analysis.

- A brief detour to a search consequence and a fast return to the search outcomes and additional clicks on different outcomes can enhance the variety of dangerous clicks. The search consequence that had the final “good” click on in a search session is recorded because the lastLongestClick.

- The information is squashed (i.e., condensed), in order that it’s statistically normalized and fewer inclined to manipulation.

- If a web page, a cluster of pages or the beginning web page of a site usually has good customer metrics (Chrome information), this has a constructive impact by way of NavBoost. By analyzing motion patterns inside a site or throughout domains, it’s even attainable to find out how good the consumer steering is by way of the navigation.

- Since Google measures whole search classes, it’s theoretically even attainable in excessive instances to acknowledge {that a} fully completely different doc is taken into account appropriate for a search question. If a searcher leaves the area that they clicked on within the search consequence inside a search and goes to a different area (as a result of it might even have been linked from there) and stays there because the recognizable finish of the search, this “finish” doc might be flushed to the entrance by way of NavBoost sooner or later, supplied it’s out there within the choice ring set. Nonetheless, this could require a robust statistically related sign from many searchers.

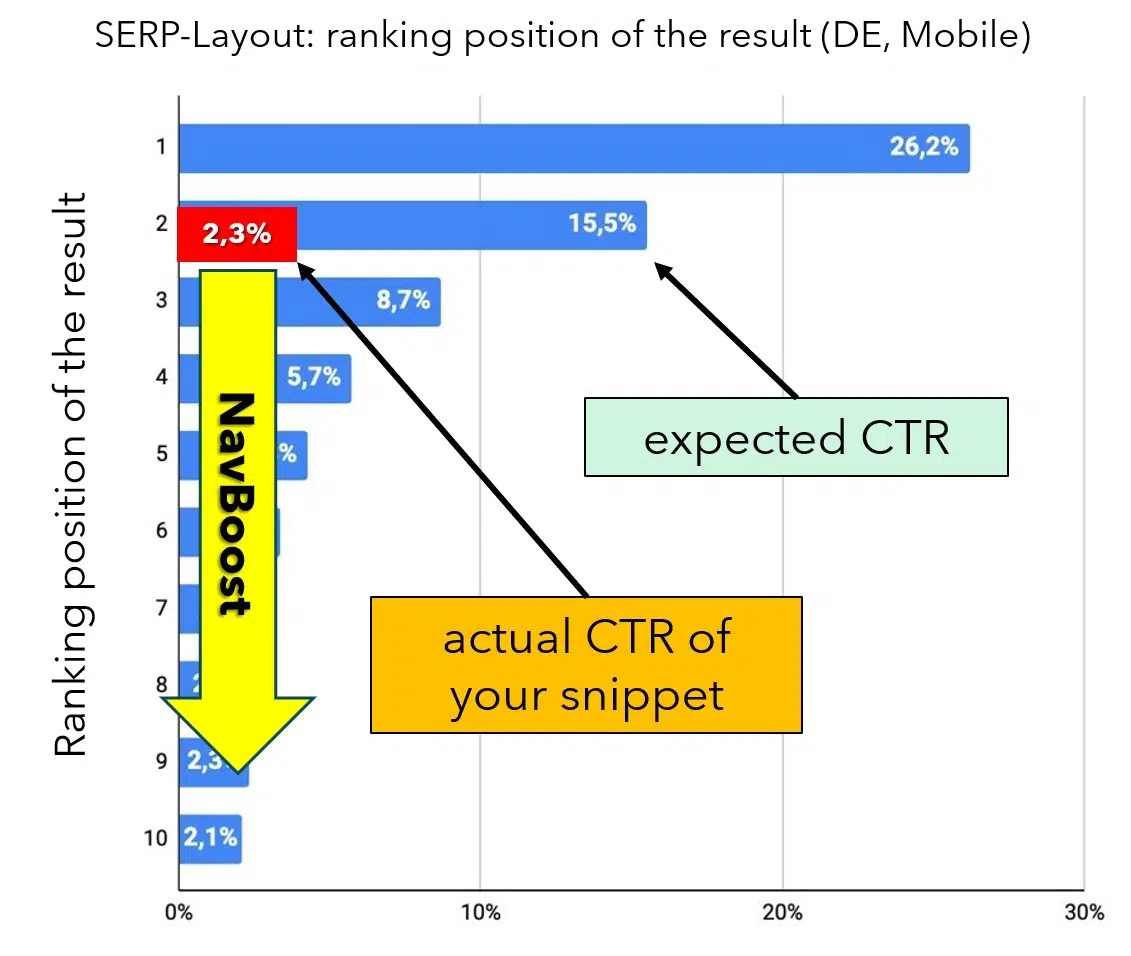

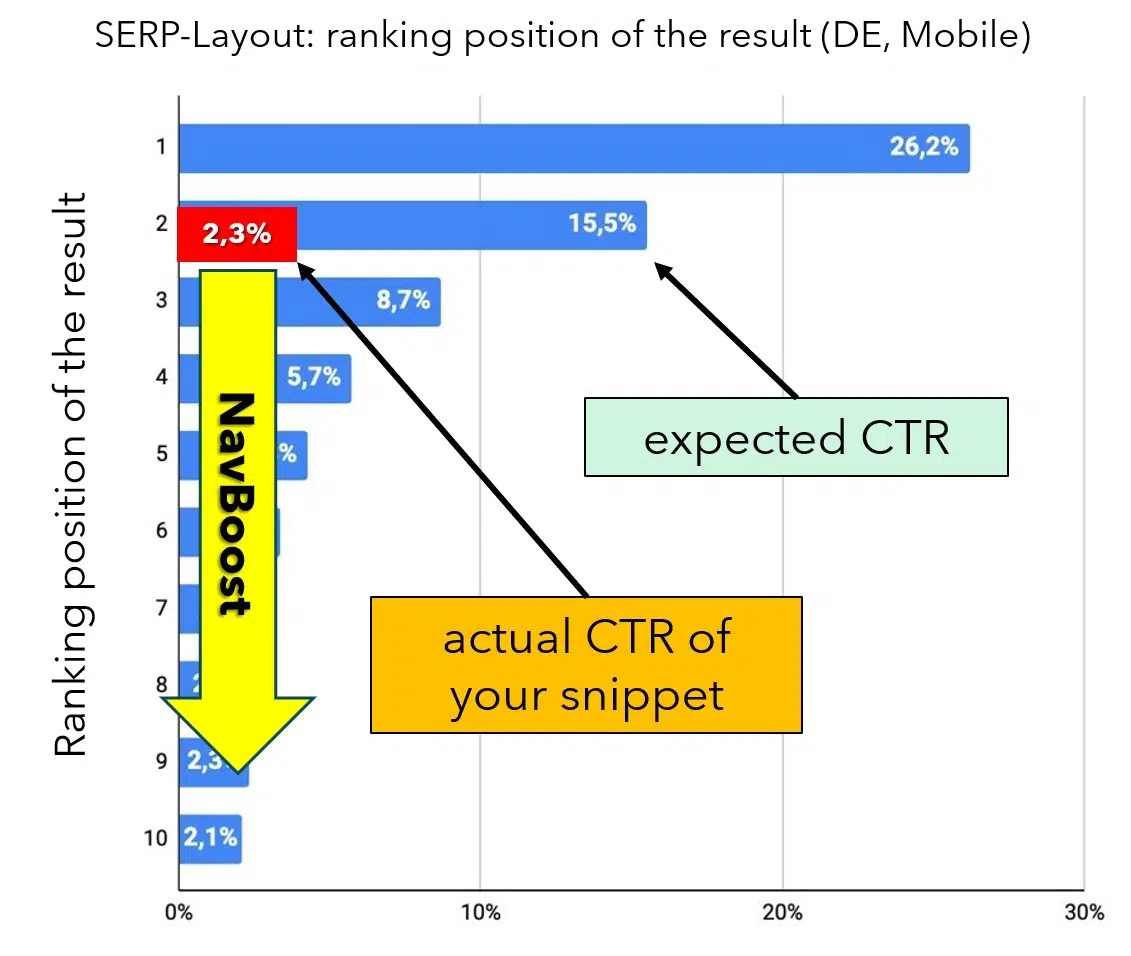

Let’s first look at clicks in search outcomes. Every rating place within the SERPs has a mean anticipated click-through price (CTR), serving as a efficiency benchmark. For instance, in keeping with an evaluation by Johannes Beus introduced at this 12 months’s CAMPIXX in Berlin, the natural Place 1 receives a mean of 26.2% of clicks, whereas Place 2 will get 15.5%.

If a snippet’s precise CTR considerably falls in need of the anticipated price, the NavBoost system registers this discrepancy and adjusts the rating of the DocIDs accordingly. If a consequence traditionally generates considerably extra or fewer clicks than anticipated, NavBoost will transfer the doc up or down within the rankings as wanted (see Determine 6).

This strategy is smart as a result of clicks primarily symbolize a vote from customers on the relevance of a consequence primarily based on the title, description and area. This idea is even detailed in official paperwork, as illustrated in Determine 7.

Since our pencil doc remains to be new, there aren’t any out there CTR values but. It’s unclear whether or not CTR deviations are ignored for paperwork with no information, however this appears possible, because the purpose is to include consumer suggestions. Alternatively, the CTR would possibly initially be estimated primarily based on different values, just like how the standard issue is dealt with in Google Advertisements.

- web optimization consultants and information analysts have lengthy reported that they’ve observed the next phenomenon when comprehensively monitoring their very own click-through charges: If a doc for a search question newly seems within the high 10 and the CTR falls considerably in need of expectations, you may observe a drop in rating inside a number of days (relying on the search quantity).

- Conversely, the rating typically rises if the CTR is considerably increased in relation to the rank. You solely have a short while to react and alter the snippet if the CTR is poor (often by optimizing the title and outline) in order that extra clicks are collected. In any other case, the place deteriorates and is subsequently not really easy to regain. Exams are regarded as behind this phenomenon. If a doc proves itself, it may possibly keep. If searchers don’t prefer it, it disappears once more. Whether or not that is truly associated to NavBoost is neither clear nor conclusively provable.

Based mostly on the leaked data, it seems that Google makes use of intensive information from a web page’s “atmosphere” to estimate indicators for brand new, unknown pages.

As an illustration, NearestSeedversion means that the PageRank of the house web page HomePageRank_NS is transferred to new pages till they develop their very own PageRank. Moreover, pnavClicks appears to be used to estimate and assign the likelihood of clicks via navigation.

Calculating and updating PageRank is time-consuming and computationally intensive, which is why the PageRank_NS metric is probably going used as an alternative. “NS” stands for “nearest seed,” that means {that a} set of associated pages shares a PageRank worth, which is briefly or completely utilized to new pages.

It’s possible that values from neighboring pages are additionally used for different important indicators, serving to new pages climb the rankings regardless of missing vital site visitors or backlinks. Many indicators will not be attributed in real-time however could contain a notable delay.

- Google itself set a superb instance of freshness throughout a listening to. For instance, in case you seek for “Stanley Cup,” the search outcomes usually function the well-known mug. Nonetheless, when the Stanley Cup ice hockey video games are actively going down, NavBoost adjusts the outcomes to prioritize details about the video games, reflecting modifications in search and click on habits.

- Freshness doesn’t discuss with new (i.e., “recent”) paperwork however to modifications in search habits. In keeping with Google, there are over a billion (that’s not a typo) new behaviors within the SERPs on daily basis! So each search and each click on contributes to Google’s studying. The idea that Google is aware of all the pieces about seasonality might be not appropriate. Google acknowledges fine-grained modifications in search intentions and continuously adapts the system – which creates the phantasm that Google truly “understands” what searchers need.

The press metrics for paperwork are apparently saved and evaluated over a interval of 13 months (one month overlap within the 12 months for comparisons with the earlier 12 months), in keeping with the newest findings.

Since our hypothetical area has sturdy customer metrics and substantial direct site visitors from promoting, as a widely known model (which is a constructive sign), our new pencil doc advantages from the favorable indicators of older, profitable pages.

In consequence, NavBoost elevates our rating from 14th to fifth place, putting us within the “blue ring” or high 10. This high 10 listing, together with our doc, is then forwarded to the Google Internet Server together with the opposite 9 natural outcomes.

- Opposite to expectations, Google doesn’t truly ship many personalised search outcomes. Exams have in all probability proven that modeling consumer habits and making modifications to it delivers higher outcomes than evaluating the non-public preferences of particular person customers.

- That is outstanding. The prediction by way of neural networks is now higher suited to us than our personal browsing and clicking historical past. Nonetheless, particular person preferences, resembling a choice for video content material, are nonetheless included within the private outcomes.

The GWS: The place all the pieces involves an finish and a brand new starting

The Google Internet Server (GWS) is chargeable for assembling and delivering the search outcomes web page (SERP). This contains 10 blue hyperlinks, together with advertisements, pictures, Google Maps views, “Individuals additionally ask” sections and different parts.

The Tangram system handles geometric area optimization, calculating how a lot area every factor requires and what number of outcomes match into the out there “packing containers.” The Glue system then arranges these parts of their correct locations.

Our pencil doc, at present in fifth place, is a part of the natural outcomes. Nonetheless, the CookBook system can intervene on the final second. This method contains FreshnessNode, InstantGlue (reacts in durations of 24 hours with a delay of round 10 minutes) and InstantNavBoost. These parts generate further indicators associated to topicality and may alter rankings within the closing moments earlier than the web page is displayed.

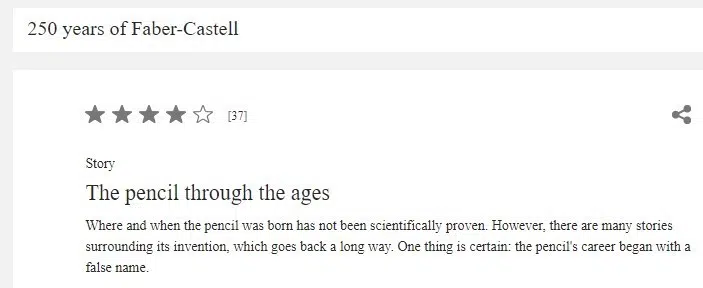

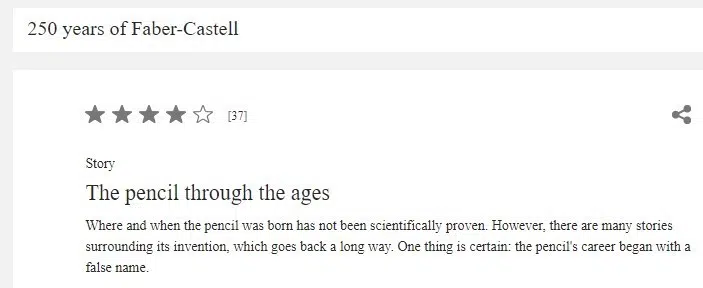

Let’s say a German TV program about 250 years of Faber-Castell and the myths surrounding the phrase “pencil” begins to air. Inside minutes, hundreds of viewers seize their smartphones or tablets to go looking on-line. It is a typical state of affairs. FreshnessNode detects the surge in searches for “pencil” and, noting that customers are in search of data reasonably than making purchases, adjusts the rankings accordingly.

On this distinctive state of affairs, InstantNavBoost removes all transactional outcomes and replaces them with informational ones in actual time. InstantGlue then updates the “blue ring,” inflicting our beforehand sales-oriented doc to drop out of the highest rankings and get replaced by extra related outcomes.

Unlucky as it might be, this hypothetical finish to our rating journey illustrates an necessary level: reaching a excessive rating isn’t solely about having an amazing doc or implementing the precise web optimization measures with high-quality content material.

Rankings may be influenced by quite a lot of elements, together with modifications in search habits, new indicators for different paperwork and evolving circumstances. Due to this fact, it’s essential to acknowledge that having a superb doc and doing a superb job with web optimization is only one a part of a broader and extra dynamic rating panorama.

The method of compiling search outcomes is extraordinarily complicated, influenced by hundreds of indicators. With quite a few assessments performed reside by SearchLab utilizing Twiddler, even backlinks to your paperwork may be affected.

These paperwork could be moved from HiveMind to much less important ranges, resembling SSDs and even TeraGoogle, which may weaken or eradicate their affect on rankings. This will shift rating scales even when nothing has modified with your individual doc.

Google’s John Mueller has emphasised {that a} drop in rating typically doesn’t imply you’ve accomplished something unsuitable. Adjustments in consumer habits or different elements can alter how outcomes carry out.

As an illustration, if searchers begin preferring extra detailed data and shorter texts over time, NavBoost will mechanically alter rankings accordingly. Nonetheless, the IR rating within the Alexandria system or Ascorer stays unchanged.

One key takeaway is that web optimization have to be understood in a broader context. Optimizing titles or content material received’t be efficient if a doc and its search intent don’t align.

The affect of Twiddlers and NavBoost on rankings can typically outweigh conventional on-page, on-site or off-site optimizations. If these techniques restrict a doc’s visibility, further on-page enhancements can have minimal impact.

Nonetheless, our journey doesn’t finish on a low word. The affect of the TV program about pencils is short-term. As soon as the search surge subsides, FreshnessNode will now not have an effect on our rating, and we’ll settle again at fifth place.

As we restart the cycle of accumulating click on information, a CTR of round 4% is predicted for Place 5 (primarily based on Johannes Beus from SISTRIX). If we are able to preserve this CTR, we are able to anticipate staying within the high ten. All might be effectively.

Key web optimization takeaways

- Diversify site visitors sources: Make sure you obtain site visitors from varied sources, not simply search engines like google. Visitors from much less apparent channels, like social media platforms, can also be helpful. Even when Google’s crawler can’t entry sure pages, Google can nonetheless observe what number of guests come to your web site via platforms like Chrome or direct URLs.

- Construct model and area consciousness: All the time work on strengthening your model or area identify recognition. The extra acquainted persons are along with your identify, the extra possible they’re to click on in your web site in search outcomes. Rating for a lot of long-tail key phrases also can enhance your area’s visibility. Leaks recommend that “web site authority” is a rating sign, so constructing your model’s popularity will help enhance your search rankings.

- Perceive search intent: To higher meet your guests’ wants, attempt to perceive their search intent and journey. Use instruments like Semrush or SimilarWeb to see the place your guests come from and the place they go after visiting your web site. Analyze these domains – do they provide data that your touchdown pages lack? Steadily add this lacking content material to change into the “closing vacation spot” in your guests’ search journey. Bear in mind, Google tracks associated search classes and is aware of exactly what searchers are in search of and the place they’ve been looking out.

- Optimize your titles and descriptions to enhance CTR: Begin by reviewing your present CTR and making changes to reinforce click on attraction. Capitalizing a number of necessary phrases will help them stand out visually, doubtlessly boosting CTR; take a look at this strategy to see if it really works for you. The title performs a important function in figuring out whether or not your web page ranks effectively for a key term, so optimizing it needs to be a high precedence.

- Consider hidden content material: When you use accordions to “disguise” necessary content material that requires a click on to disclose, test if these pages have a higher-than-average bounce price. When searchers can’t instantly see they’re in the precise place and have to click on a number of instances, the probability of damaging click on indicators will increase.

- Take away underperforming pages: Pages that no one visits (net analytics) or that don’t obtain a superb rating over longer durations of time needs to be eliminated if essential. Unhealthy indicators are additionally handed on to neighboring pages! When you publish a brand new doc in a “dangerous” web page cluster, the brand new web page has few probabilities. “deltaPageQuality” apparently truly measures the qualitative distinction between particular person paperwork in a site or cluster.

- Improve web page construction: A transparent web page construction, straightforward navigation and a robust first impression are important for reaching high rankings, typically due to NavBoost.

- Maximize engagement: The longer guests keep in your web site, the higher the indicators your area sends, which advantages your whole subpages. Purpose to be the ultimate vacation spot by offering all the data they want so guests received’t have to go looking elsewhere.

- Broaden current content material reasonably than continuously creating new ones: Updating and enhancing your present content material may be more practical. ContentEffortScore measures the trouble put into making a doc, with elements like high-quality pictures, movies, instruments and distinctive content material all contributing to this necessary sign.

- Align your headings with the content material they introduce: Make sure that (intermediate) headings precisely mirror the textual content blocks that comply with. Thematic evaluation, utilizing strategies like embeddings (textual content vectorization), is more practical at figuring out whether or not headings and content material match appropriately in comparison with purely lexical strategies.

- Make the most of net analytics: Instruments like Google Analytics allows you to observe customer engagement successfully and establish and handle any gaps. Pay explicit consideration to the bounce price of your touchdown pages. If it’s too excessive, examine potential causes and take corrective actions. Bear in mind, Google can entry this information via the Chrome browser.

- Goal much less aggressive key phrases: You may as well concentrate on rating effectively for much less aggressive key phrases first and thus extra simply construct up constructive consumer indicators.

- Domesticate high quality backlinks: Concentrate on hyperlinks from current or high-traffic pages saved in HiveMind, as these present extra helpful indicators. Hyperlinks from pages with little site visitors or engagement are much less efficient. Moreover, backlinks from pages inside the similar nation and people with thematic relevance to your content material are extra useful. Remember that “poisonous” backlinks, which negatively affect your rating, do exist and needs to be averted.

- Take note of the context surrounding hyperlinks: The textual content earlier than and after a hyperlink, not simply the anchor textual content itself, are thought-about for rating. Ensure that the textual content naturally flows across the hyperlink. Keep away from utilizing generic phrases like “click on right here,” which has been ineffective for over twenty years.

- Be aware of the Disavow instrument’s limitations: The Disavow instrument, used to invalidate dangerous hyperlinks, shouldn’t be talked about within the leak in any respect. Plainly algorithms don’t take into account it, and it serves primarily a documentary objective for spam fighters.

- Contemplate creator experience: When you use creator references, guarantee they’re additionally acknowledged on different web sites and show related experience. Having fewer however extremely certified authors is healthier than having many much less credible ones. In keeping with a patent, Google can assess content material primarily based on the creator’s experience, distinguishing between consultants and laypeople.

- Create unique, useful, complete and well-structured content material: That is particularly necessary for key pages. Exhibit your real experience on the subject and, if attainable, present proof of it. Whereas it’s straightforward to have somebody write content material simply to have one thing on the web page, setting excessive rating expectations with out actual high quality and experience is probably not lifelike.

A model of this text was initially printed in German in August 2024 in Web site Boosting, Challenge 87.

Contributing authors are invited to create content material for Search Engine Land and are chosen for his or her experience and contribution to the search group. Our contributors work beneath the oversight of the editorial employees and contributions are checked for high quality and relevance to our readers. The opinions they specific are their very own.