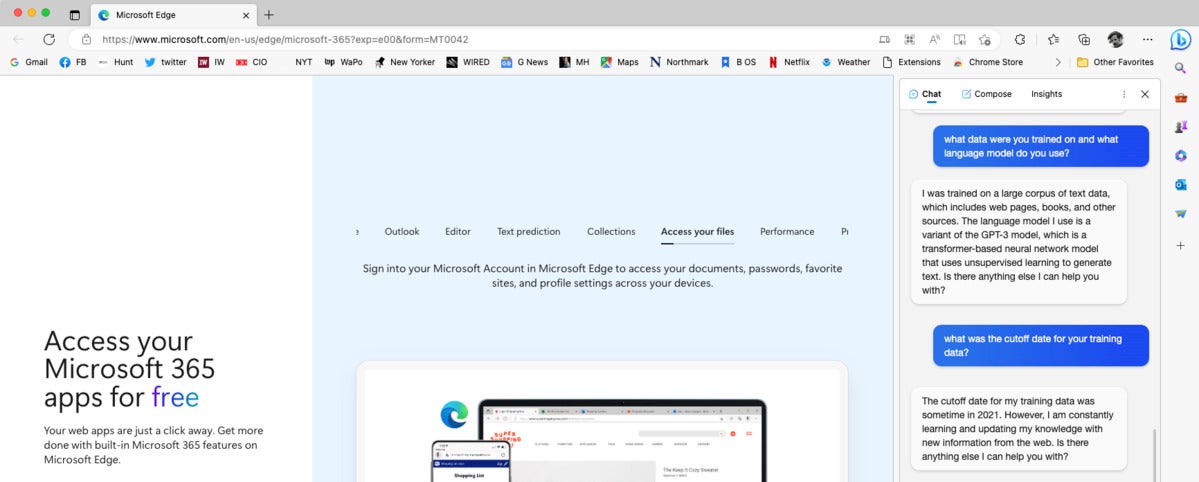

BingGPT explains its language mannequin and coaching knowledge, as seen within the textual content window on the proper of the display.

In early March 2023, Professor Pascale Fung of the Centre for Synthetic Intelligence Analysis on the Hong Kong College of Science & Know-how gave a discuss on ChatGPT analysis. It’s properly well worth the hour to look at it.

LaMDA

LaMDA (Language Mannequin for Dialogue Purposes), Google’s 2021 “breakthrough” dialog expertise, is a Transformer-based language mannequin educated on dialogue and fine-tuned to considerably enhance the sensibleness and specificity of its responses. One in all LaMDA’s strengths is that it might deal with the subject drift that’s frequent in human conversations. When you can’t straight entry LaMDA, its affect on the event of conversational AI is simple because it pushed the boundaries of what’s doable with language fashions and paved the way in which for extra subtle and human-like AI interactions.

PaLM

PaLM (Pathways Language Mannequin) is a dense decoder-only Transformer mannequin from Google Analysis with 540 billion parameters, educated with the Pathways system. PaLM was educated utilizing a mixture of English and multilingual datasets that embrace high-quality internet paperwork, books, Wikipedia, conversations, and GitHub code. Google additionally created a “lossless” vocabulary that preserves all whitespace (particularly vital for code), splits out-of-vocabulary Unicode characters into bytes, and splits numbers into particular person tokens, one for every digit.

Google has made PaLM 2 accessible via the PaLM API and MakerSuite. This implies builders can now use PaLM 2 to construct their very own generative AI functions.

PaLM-Coder is a model of PaLM 540B fine-tuned on a Python-only code dataset.

PaLM-E

PaLM-E is a 2023 embodied (for robotics) multimodal language mannequin from Google. The researchers started with PaLM and “embodied” it (the E in PaLM-E), by complementing it with sensor knowledge from the robotic agent. PaLM-E can also be a generally-capable vision-and-language mannequin; along with PaLM, it incorporates the ViT-22B imaginative and prescient mannequin.

Bard has been up to date a number of instances since its launch. In April 2023 it gained the power to generate code in 20 programming languages. In July 2023 it gained help for enter in 40 human languages, integrated Google Lens, and added text-to-speech capabilities in over 40 human languages.

LLaMA

LLaMA (Giant Language Mannequin Meta AI) is a 65-billion parameter “uncooked” giant language mannequin launched by Meta AI (previously often called Meta-FAIR) in February 2023. In accordance with Meta:

Coaching smaller basis fashions like LLaMA is fascinating within the giant language mannequin area as a result of it requires far much less computing energy and sources to check new approaches, validate others’ work, and discover new use circumstances. Basis fashions practice on a big set of unlabeled knowledge, which makes them preferrred for fine-tuning for a wide range of duties.

LLaMA was launched at a number of sizes, together with a mannequin card that particulars the way it was constructed. Initially, you needed to request the checkpoints and tokenizer, however they’re within the wild now: a downloadable torrent was posted on 4chan by somebody who correctly obtained the fashions by submitting a request, in accordance with Yann LeCun of Meta AI.

Llama

Llama 2 is the following era of Meta AI’s giant language mannequin, educated between January and July 2023 on 40% extra knowledge (2 trillion tokens from publicly obtainable sources) than LLaMA 1 and having double the context size (4096). Llama 2 is available in a variety of parameter sizes—7 billion, 13 billion, and 70 billion—in addition to pretrained and fine-tuned variations. Meta AI calls Llama 2 open supply, however there are some who disagree, on condition that it contains restrictions on acceptable use. A business license is on the market along with a neighborhood license.

Llama 2 is an auto-regressive language mannequin that makes use of an optimized Transformer structure. The tuned variations use supervised fine-tuning (SFT) and reinforcement studying with human suggestions (RLHF) to align to human preferences for helpfulness and security. Llama 2 is at present English-only. The mannequin card contains benchmark outcomes and carbon footprint stats. The analysis paper, Llama 2: Open Basis and Advantageous-Tuned Chat Fashions, gives extra element.

Claude

Claude 3.5 is the present main model.

Anthropic’s Claude 2, launched in July 2023, accepts as much as 100,000 tokens (about 70,000 phrases) in a single immediate, and may generate tales up to some thousand tokens. Claude can edit, rewrite, summarize, classify, extract structured knowledge, do Q&A primarily based on the content material, and extra. It has essentially the most coaching in English, but in addition performs properly in a variety of different frequent languages, and nonetheless has some capability to speak in much less frequent ones. Claude additionally has intensive data of programming languages.

Claude was constitutionally educated to be Useful, Sincere, and Innocent (HHH), and extensively red-teamed to be extra innocent and tougher to immediate to provide offensive or harmful output. It doesn’t practice in your knowledge or seek the advice of the web for solutions, though you possibly can present Claude with textual content from the web and ask it to carry out duties with that content material. Claude is on the market to customers within the US and UK as a free beta, and has been adopted by business companions comparable to Jasper (a generative AI platform), Sourcegraph Cody (a code AI platform), and Amazon Bedrock.

Conclusion

As we’ve seen, giant language fashions are below energetic growth at a number of firms, with new variations transport roughly month-to-month from OpenAI, Google AI, Meta AI, and Anthropic. Whereas none of those LLMs obtain true synthetic basic intelligence (AGI), new fashions principally have a tendency to enhance over older ones. Nonetheless, most LLMs are liable to hallucinations and different methods of going off the rails, and will in some situations produce inaccurate, biased, or different objectionable responses to consumer prompts. In different phrases, you must use them provided that you possibly can confirm that their output is appropriate.