The Imaginative and prescient framework has lengthy included textual content recognition capabilities. We have already got a detailed tutorial that reveals you find out how to scan a picture and carry out textual content recognition utilizing the Imaginative and prescient framework. Beforehand, we utilized VNImageRequestHandler and VNRecognizeTextRequest to extract textual content from a picture.

Over time, the Imaginative and prescient framework has advanced considerably. In iOS 18, Imaginative and prescient introduces new APIs that leverage the facility of Swift 6. On this tutorial, we are going to discover find out how to use these new APIs to carry out textual content recognition. You can be amazed by the enhancements within the framework, which prevent a big quantity of code to implement the identical function.

As at all times, we are going to create a demo utility to information you thru the APIs. We are going to construct a easy app that enables customers to pick out a picture from the photograph library, and the app will extract the textual content from it in actual time.

Let’s get began.

Loading the Picture Library with PhotosPicker

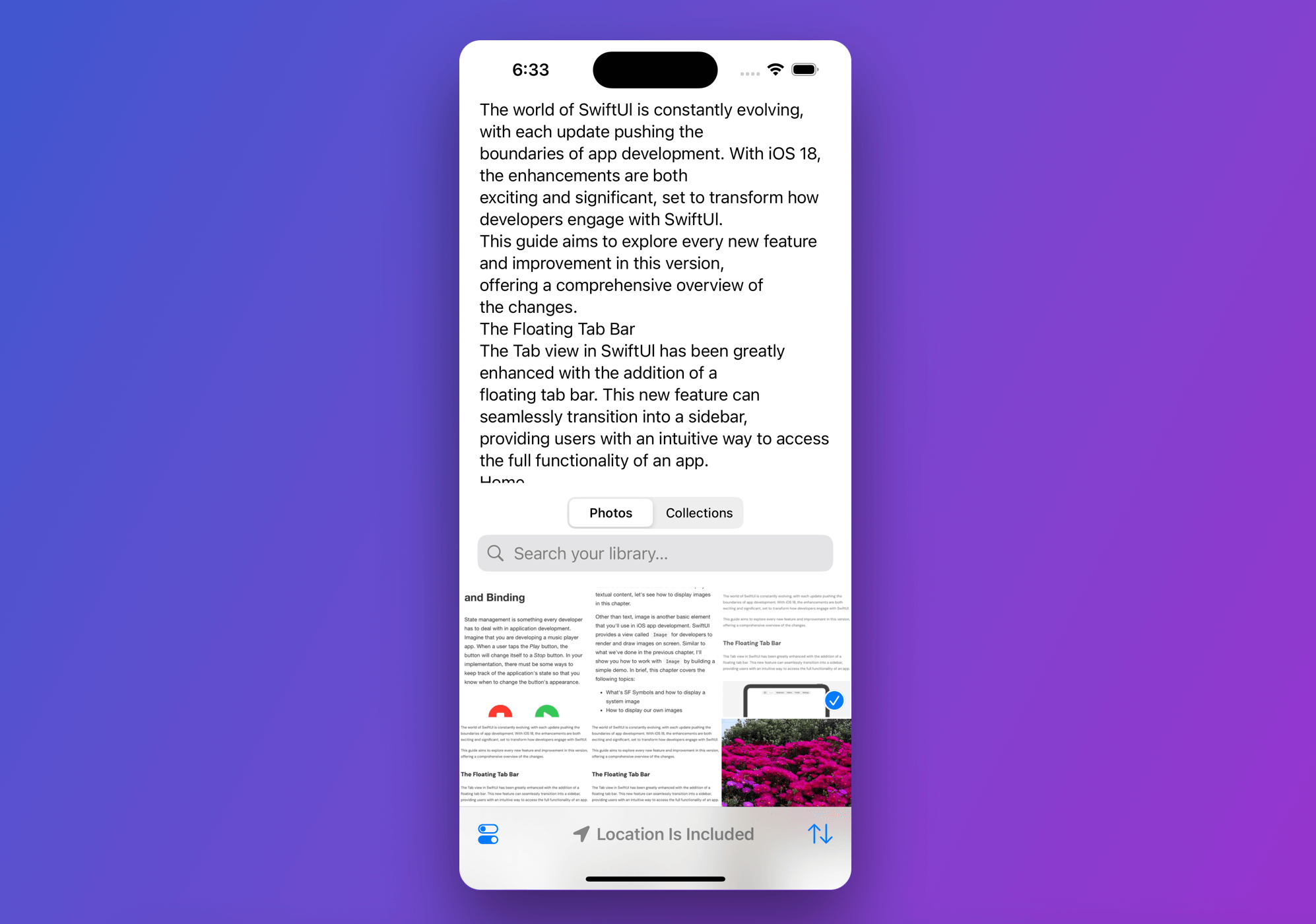

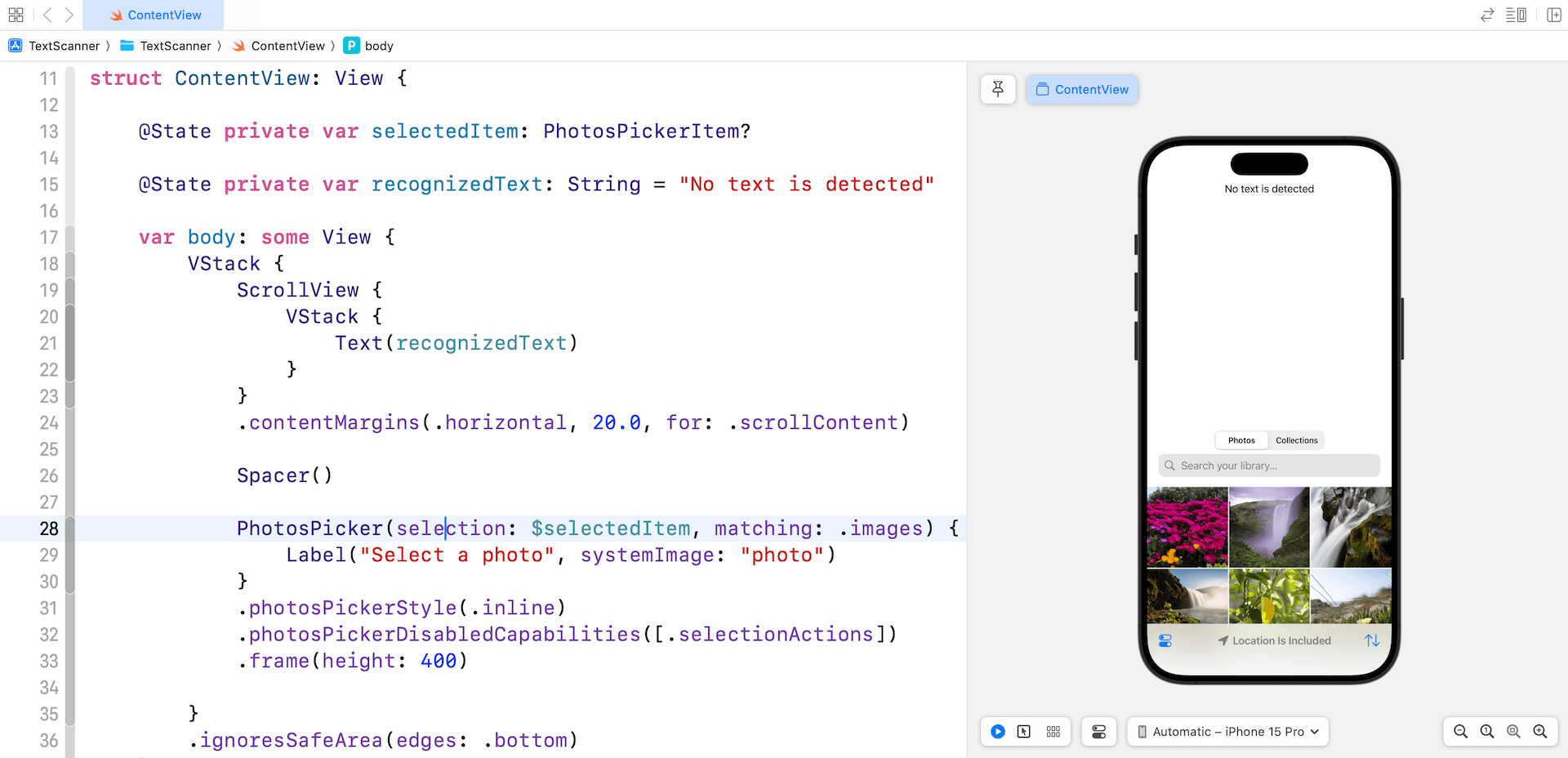

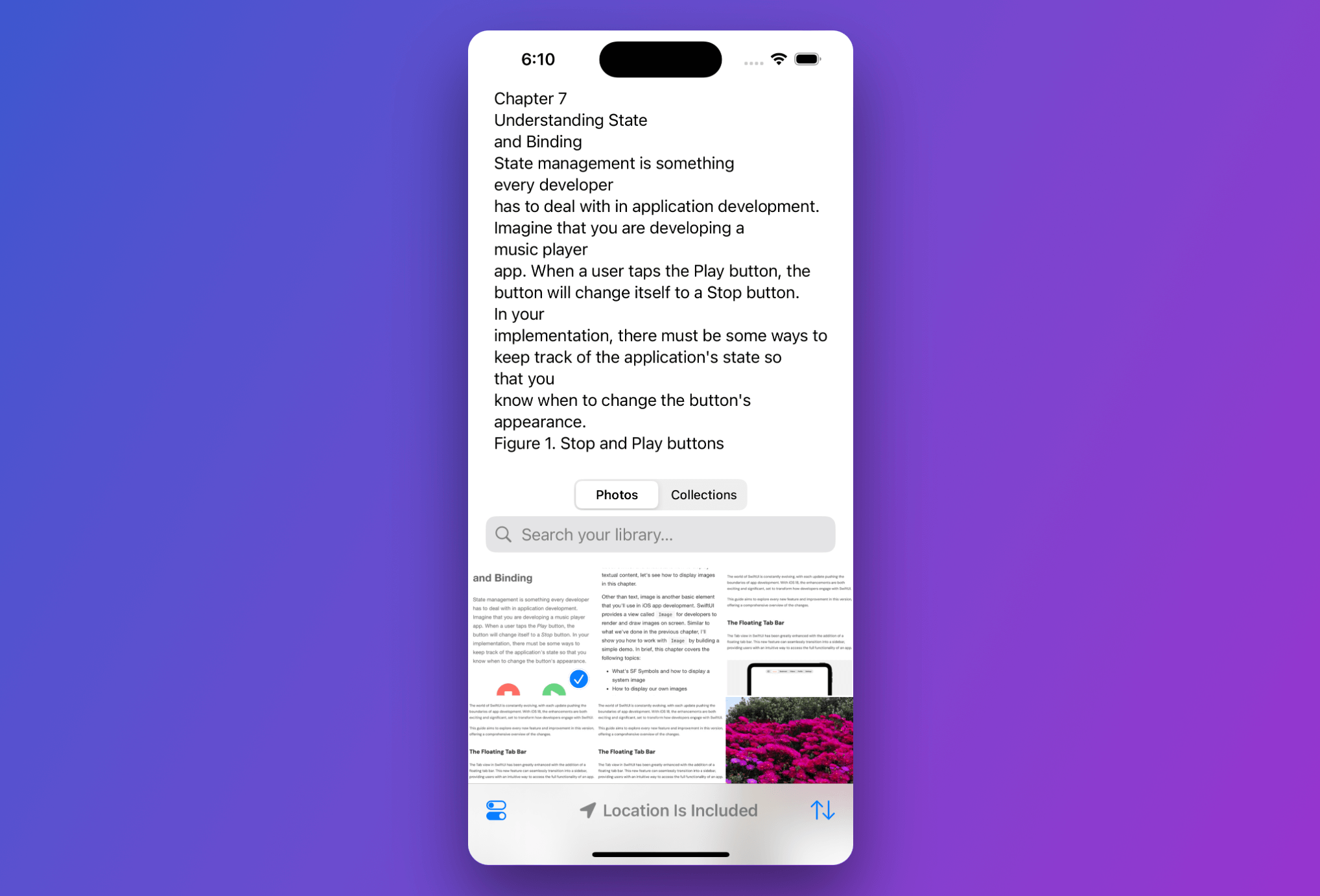

Assuming you’ve created a brand new SwiftUI challenge on Xcode 16, go to ContentView.swift and begin constructing the essential UI of the demo app:

import SwiftUI

import PhotosUI

struct ContentView: View {

@State non-public var selectedItem: PhotosPickerItem?

@State non-public var recognizedText: String = "No textual content is detected"

var physique: some View {

VStack {

ScrollView {

VStack {

Textual content(recognizedText)

}

}

.contentMargins(.horizontal, 20.0, for: .scrollContent)

Spacer()

PhotosPicker(choice: $selectedItem, matching: .photos) {

Label("Choose a photograph", systemImage: "photograph")

}

.photosPickerStyle(.inline)

.photosPickerDisabledCapabilities([.selectionActions])

.body(peak: 400)

}

.ignoresSafeArea(edges: .backside)

}

}We make the most of PhotosPicker to entry the photograph library and cargo the pictures within the decrease a part of the display. The higher a part of the display includes a scroll view for show the acknowledged textual content.

Now we have a state variable to maintain observe of the chosen photograph. To detect the chosen picture and cargo it as Knowledge, you’ll be able to connect the onChange modifier to the PhotosPicker view like this:

.onChange(of: selectedItem) { oldItem, newItem in

Job {

guard let imageData = strive? await newItem?.loadTransferable(kind: Knowledge.self) else {

return

}

}

}Textual content Recognition with Imaginative and prescient

The brand new APIs within the Imaginative and prescient framework have simplified the implementation of textual content recognition. Imaginative and prescient gives 31 totally different request sorts, every tailor-made for a selected form of picture evaluation. For example, DetectBarcodesRequest is used for figuring out and decoding barcodes. For our functions, we might be utilizing RecognizeTextRequest.

Within the ContentView struct, add an import assertion to import Imaginative and prescient and create a brand new operate named recognizeText:

non-public func recognizeText(picture: UIImage) async {

guard let cgImage = picture.cgImage else { return }

let textRequest = RecognizeTextRequest()

let handler = ImageRequestHandler(cgImage)

do {

let end result = strive await handler.carry out(textRequest)

let recognizedStrings = end result.compactMap { statement in

statement.topCandidates(1).first?.string

}

recognizedText = recognizedStrings.joined(separator: "n")

} catch {

recognizedText = "Didn't acknowledged textual content"

print(error)

}

}This operate takes in an UIImage object, which is the chosen photograph, and extract the textual content from it. The RecognizeTextRequest object is designed to determine rectangular textual content areas inside a picture.

The ImageRequestHandler object processes the textual content recognition request on a given picture. After we name its carry outoperate, it returns the outcomes as RecognizedTextObservation objects, every containing particulars in regards to the location and content material of the acknowledged textual content.

We then use compactMap to extract the acknowledged strings. The topCandidates technique returns the perfect matches for the acknowledged textual content. By setting the utmost variety of candidates to 1, we make sure that solely the highest candidate is retrieved.

Lastly, we use the joined technique to concatenate all of the acknowledged strings.

With the recognizeText technique in place, we will replace the onChange modifier to name this technique, performing textual content recognition on the chosen photograph.

.onChange(of: selectedItem) { oldItem, newItem in

Job {

guard let imageData = strive? await newItem?.loadTransferable(kind: Knowledge.self) else {

return

}

await recognizeText(picture: UIImage(information: imageData)!)

}

}With the implementation full, now you can run the app in a simulator to check it out. In case you have a photograph containing textual content, the app ought to efficiently extract and show the textual content on display.

Abstract

With the introduction of the brand new Imaginative and prescient APIs in iOS 18, we will now obtain textual content recognition duties with outstanding ease, requiring only some strains of code to implement. This enhanced simplicity permits builders to shortly and effectively combine textual content recognition options into their functions.

What do you concentrate on this enchancment of the Imaginative and prescient framework? Be happy to depart remark under to share your thought.