Bold synthetic intelligence computing startup Cerebras Programs Inc. is elevating the stakes in its battle in opposition to Nvidia Corp., launching what it says is the world’s quickest AI inference service, and it’s obtainable now within the cloud.

AI inference refers back to the technique of operating stay information via a skilled AI mannequin to make a prediction or remedy a job. Inference providers are the workhorse of the AI trade, and in keeping with Cerebras, it’s the fastest-growing phase too, accounting for about 40% of all AI workloads within the cloud as we speak.

Nevertheless, present AI inference providers don’t seem to fulfill the wants of each buyer. “We’re seeing all types of curiosity in the right way to get inference accomplished quicker and for much less cash,” Chief Govt Andrew Feldman informed a gathering of reporters in San Francisco Monday.

The corporate intends to ship on this with its new “high-speed inference” providers. It believes the launch is a watershed second for the AI trade, saying that 1,000-tokens-per-second speeds it could possibly ship is similar to the introduction of broadband web, enabling game-changing new alternatives for AI purposes.

Uncooked energy

Cerebras is well-equipped to supply such a service. The corporate is a producer of specialised and highly effective laptop chips for AI and high-performance computing or HPC workloads. It has made a variety of headlines over the previous yr, claiming that its chips aren’t solely extra highly effective than Nvidia’s graphics processing models, but in addition cheaper. “That is GPU-impossible efficiency,” declared co-founder and Chief Expertise Officer Sean Lie.

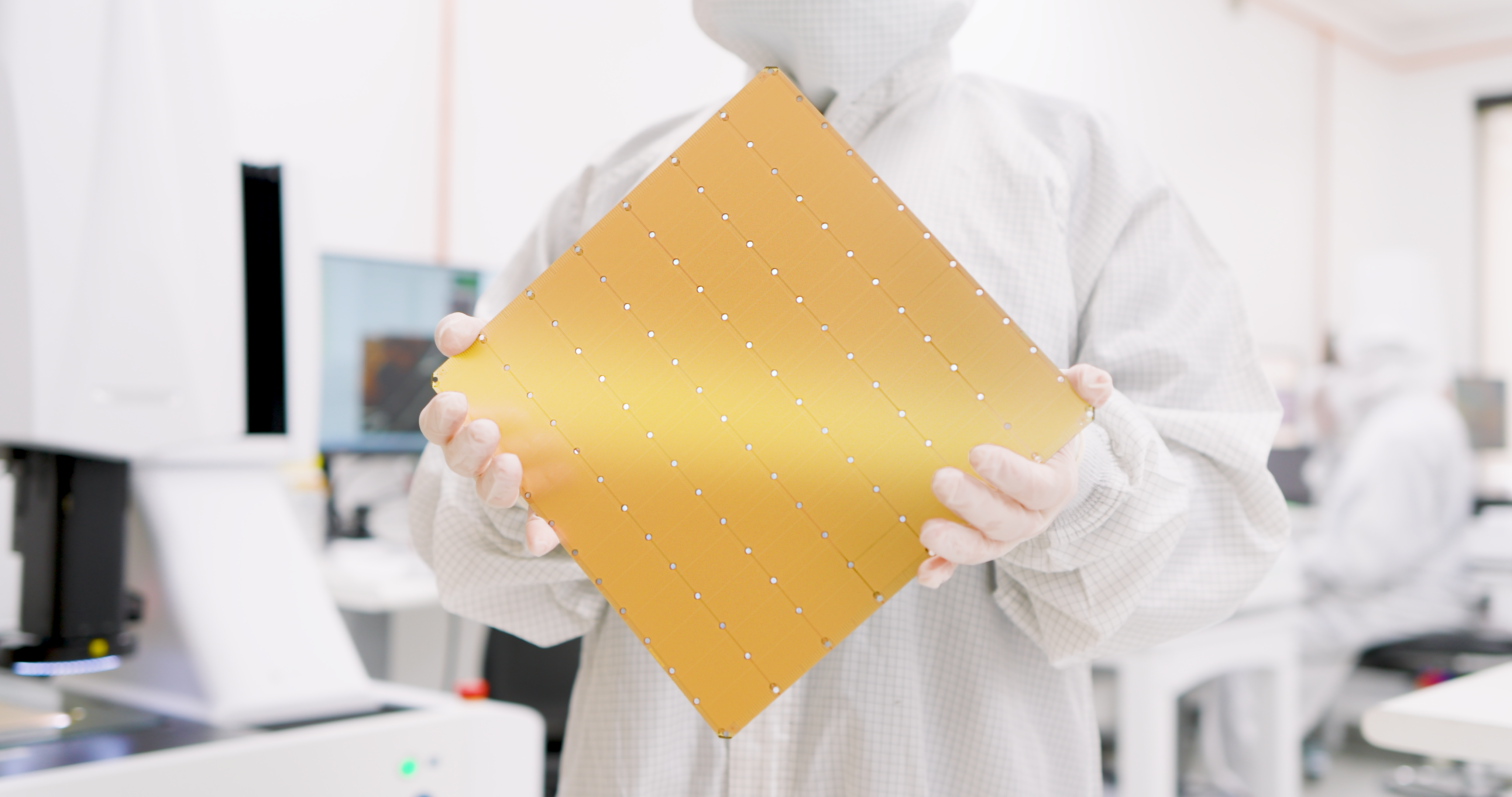

Its flagship product is the brand new WSE-3 processor (pictured), which was introduced in March and builds upon its earlier WSE-2 chipset that debuted in 2021. It’s constructed on a sophisticated five-nanometer course of and options 1.4 trillion transistors greater than its predecessor chip, with greater than 900,000 compute cores and 44 gigabytes of onboard static random-access reminiscence. In line with the startup, the WSE-3 has 52 occasions extra cores than a single Nvidia H100 graphics processing unit.

The chip is offered as a part of a knowledge middle equipment known as the CS-3, which is about the identical measurement as a small fridge. The chip itself is about the identical measurement as a pizza, and comes with built-in cooling and energy supply modules. By way of efficiency, the Cerebras WSE-3 is alleged to be twice as highly effective because the WSE-2, able to hitting a peak velocity of 125 petaflops, with 1 petaflop equal to 1,000 trillion computations per second.

The Cerebras CS-3 system is the engine that powers the brand new Cerebras Inference service, and it notably options 7,000 occasions higher reminiscence than the Nvidia H100 GPU to resolve one in every of generative AI’s elementary technical challenges: the necessity for extra reminiscence bandwidth.

Spectacular speeds at decrease price

It solves that problem in type. The Cerebras Inference service is alleged to be lightning fast, as much as 20 occasions quicker than comparable cloud-based inference providers that use Nvidia’s strongest GPUs. In line with Cerebras, it delivers 1,800 tokens per second for the open-source Llama 3.1 8B mannequin, and 450 tokens per second for Llama 3.1 70B.

It’s competitively priced too, with the startup saying that the service begins at simply 10 cents per million tokens – equating to 100 occasions larger price-performance for AI inference workloads.

The corporate provides the Cerebras Inference service is particularly well-suited for “agentic AI” workloads, or AI brokers that may carry out duties on behalf of customers, as such purposes want the power to consistently immediate their underlying fashions

Micah Hill-Smith, co-founder and chief government of the impartial AI mannequin evaluation firm Synthetic Evaluation Inc., stated his workforce has verified that Llama 3.1 8B and 70B operating on Cerebras Inference achieves “high quality analysis outcomes” in step with native 16-bit precision per Meta’s official variations.

“With speeds that push the efficiency frontier and aggressive pricing, Cerebras Inference is especially compelling for builders of AI purposes with real-time or high-volume necessities,” he stated.

Tiered entry

Clients can select to entry the Cerebras Inference service three obtainable tiers, together with a free providing that gives utility programming interface-based entry and beneficiant utilization limits for anybody who desires to experiment with the platform.

The Developer Tier is for versatile, serverless deployments. It’s accessed by way of an API endpoint that the corporate says prices a fraction of the value of other providers obtainable as we speak. As an illustration, Llama 3.1 8B is priced at simply 10 cents per million tokens, whereas Llama 3.1 70B prices 60 cents. Help for added fashions is on the best way, the corporate stated.

There’s additionally an Enterprise Tier, which presents fine-tuned fashions and customized service degree agreements with devoted assist. That’s for sustained workloads, and it may be accessed by way of a Cerebras-managed personal cloud or else carried out on-premises. Cerebras isn’t revealing the price of this specific tier however says pricing is offered on request.

Cerebras claims a formidable record of early-access prospects, together with organizations corresponding to GlaxoSmithKline Plc., the AI search engine startup Perplexity AI Inc. and the networking analytics software program supplier Meter Inc.

Dr. Andrew Ng, founding father of DeepLearning AI Inc., one other early adopter, defined that his firm has developed a number of agentic AI workflows that require prompting an LLM repeatedly to acquire a consequence. “Cerebras has constructed an impressively quick inference functionality that will likely be very useful for such workloads,” he stated.

Cerebras’ ambitions don’t finish there. Feldman stated the corporate is “engaged with a number of hyperscalers” about providing its capabilities on their cloud providers. “We aspire to have them as prospects,” he stated, in addition to AI specialty suppliers corresponding to CoreWeave Inc. and Lambda Inc.

Moreover the inference service, Cerebras additionally introduced quite a lot of strategic partnerships to supply its prospects with entry to all the specialised instruments required to speed up AI improvement. Its companions embody the likes of LangChain, LlamaIndex, Docker Inc., Weights & Biases Inc. and AgentOps Inc.

Cerebras stated its Inference API is totally suitable with OpenAI’s Chat Completions API, which implies present purposes might be migrated to its platform with only a few strains of code.

With reporting by Robert Hof

Photograph: Cerebras Programs

Your vote of assist is vital to us and it helps us preserve the content material FREE.

One click on beneath helps our mission to supply free, deep, and related content material.

Be a part of our neighborhood on YouTube

Be a part of the neighborhood that features greater than 15,000 #CubeAlumni specialists, together with Amazon.com CEO Andy Jassy, Dell Applied sciences founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and lots of extra luminaries and specialists.

THANK YOU