Find out how the Azure {Hardware} Programs and Interconnect group leverages Azure NetApp Information for chip improvement.

Excessive-performance computing (HPC) workloads place vital calls for on cloud infrastructure, requiring sturdy and scalable sources to deal with complicated and intensive computational duties. These workloads usually necessitate excessive ranges of parallel processing energy, usually offered by clusters of central processing unit (CPU) or graphics processing unit (GPU)-based digital machines. Moreover, HPC purposes demand substantial knowledge storage and quick entry speeds, which exceed the capabilities of conventional cloud file programs. Specialised storage options are required to fulfill the low latency and excessive throughput enter/output (I/O) wants.

Microsoft Azure NetApp Information is designed to ship low latency, excessive efficiency, and enterprise-grade knowledge administration at scale. Distinctive capabilities of Azure NetApp Information make it appropriate for a number of high-performance computing workloads reminiscent of Digital Design Automation (EDA), Seismic Processing, Reservoir Simulations, and Threat Modeling. This weblog highlights Azure NetApp Information’ differentiated capabilities for EDA workloads and Microsoft’s silicon design journey.

Infrastructure necessities of EDA workloads

EDA workloads have intensive computational and knowledge processing necessities to handle complicated duties in simulation, bodily design, and verification. Every design stage includes a number of simulations to boost accuracy, enhance reliability, and detect design defects early, decreasing debugging and redesigning prices. Silicon improvement engineers can use further simulations to check completely different design eventualities and optimize the chip’s Energy, Efficiency, and Space (PPA).

EDA workloads are labeled into two major sorts—Frontend and Backend, every with distinct necessities for the underlying storage and compute infrastructure. Frontend workloads concentrate on logic design and purposeful facets of chip design and include hundreds of short-duration parallel jobs with an I/O sample characterised by frequent random reads and writes throughout tens of millions of small recordsdata. Backend workloads concentrate on translating logic design to bodily design for manufacturing and consists of lots of of jobs involving sequential learn/write of fewer bigger recordsdata.

The selection of a storage resolution to fulfill this distinctive mixture of frontend and backend workload patterns is non-trivial. The SPEC consortium has established the SPEC SFS benchmark to assist with benchmarking the assorted storage options within the trade. For EDA workloads, the EDA_BLENDED benchmark offers the attribute patterns of the frontend and backend workloads. The I/O operations composition is described within the following desk.

| EDA workload stage | I/O operation sorts |

| Frontend | Stat (39%), Entry (15%), Learn File (7%), Random Learn (8%), Write File (10%), Random Write (15%), Different Ops (6%) |

| Backend | Learn (50%), Write (50%) |

Azure NetApp Information helps common volumes which are perfect for workloads like databases and general-purpose file programs. EDA workloads work on giant volumes of information and require very excessive throughput; this requires a number of common volumes. The introduction of huge volumes to help increased portions of information is advantageous for EDA workloads, because it simplifies knowledge administration and delivers superior efficiency in comparison with a number of common volumes.

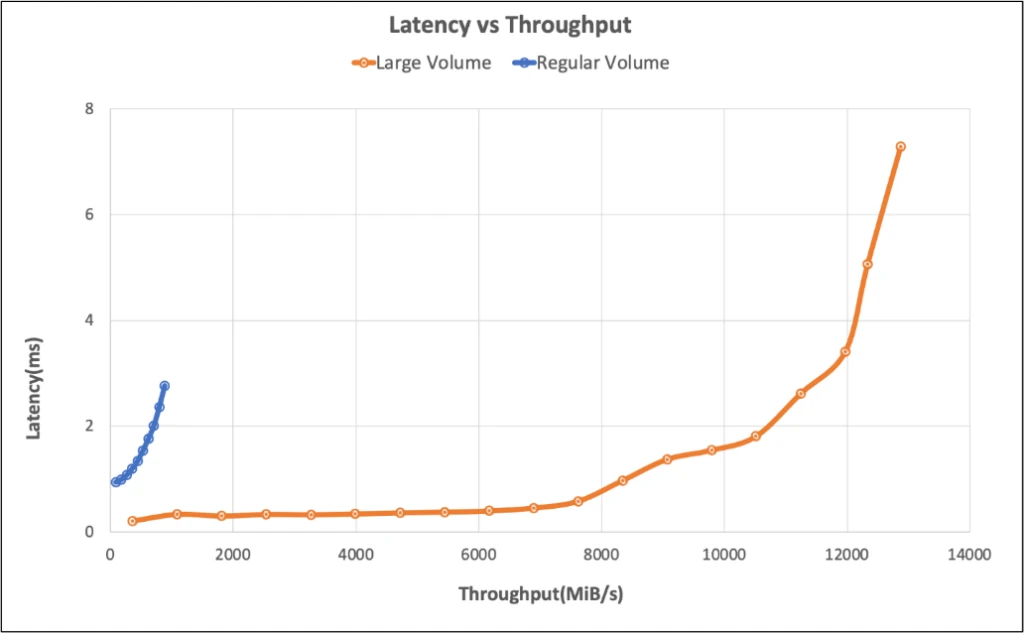

Under is the output from the efficiency testing of the SPEC SFS EDA_BLENDED benchmark which demonstrates that Azure NetApp Information can ship ~10 GiB/s throughput with lower than 2 ms latency utilizing giant volumes.

Digital Design Automation at Microsoft

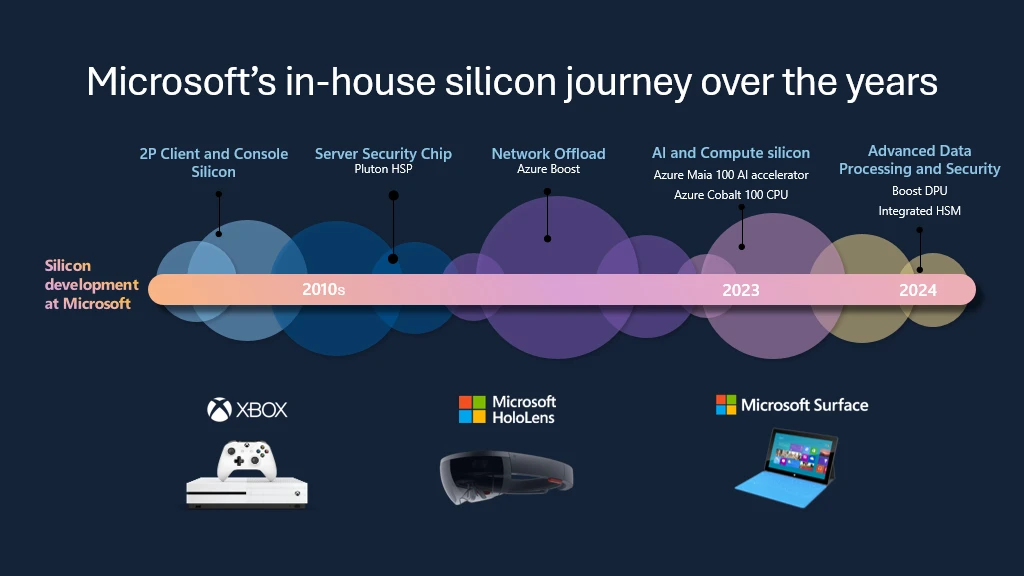

Microsoft is dedicated to enabling AI on each workload and expertise for gadgets of at present and tomorrow. It begins with the design and manufacturing of silicon. Microsoft is surpassing scientific boundaries at an unprecedented tempo for operating EDA workflows, pushing the bounds of Moore’s Regulation by adopting Azure for our personal chip design wants.

Utilizing the very best practices mannequin to optimize Azure for chip design between prospects, companions, and suppliers has been essential to the event of a few of Microsoft’s first totally customized cloud silicon chips:

- The Azure Maia 100 AI Accelerator, optimized for AI duties and generative AI.

- The Azure Cobalt 100 CPU, an Arm-based processor tailor-made to run normal function compute workloads on Microsoft Azure.

- The Azure Built-in {Hardware} Safety Module; Microsoft’s latest in-house safety chip designed to harden key administration.

- The Azure Increase DPU, the corporate’s first in-house knowledge processing unit designed for data-centric workloads with excessive effectivity and low energy.

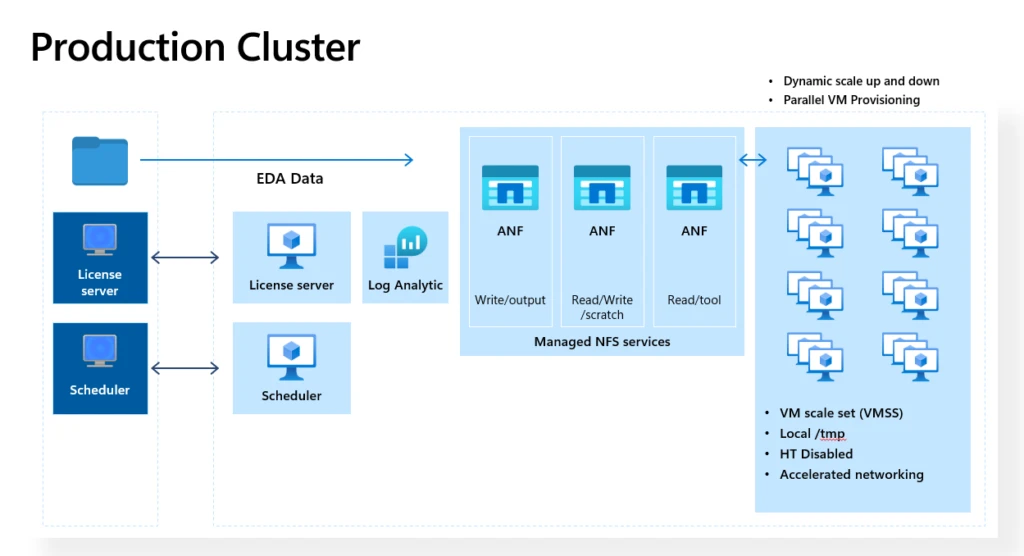

The chips developed by the Azure cloud {hardware} group are deployed in Azure servers delivering best-in-class compute capabilities for HPC workloads and additional speed up the tempo of innovation, reliability, and operational effectivity used to develop Azure’s manufacturing programs. By adopting Azure for EDA, the Azure cloud {hardware} group enjoys these advantages:

- Fast entry to scalable on-demand innovative processors.

- Dynamic pairing of every EDA device to a selected CPU structure.

- Leveraging Microsoft’s improvements in AI-driven applied sciences for semiconductor workflows.

How Azure NetApp Information accelerates semiconductor improvement innovation

- Superior efficiency: Azure NetApp Information can ship as much as 652,260 IOPS with lower than 2 milliseconds of latency, whereas reaching 826,000 IOPS on the efficiency edge (~7 milliseconds of latency).

- Excessive scalability: As EDA initiatives advance, knowledge generated can develop exponentially. Azure NetApp Information offers large-capacity, excessive efficiency single namespaces with giant volumes as much as 2PiB, seamlessly scaling to help compute clusters even as much as 50,000 cores.

- Operational simplicity: Azure NetApp Information is designed for simplicity, with handy consumer expertise by way of the Azure Portal or by way of automation API.

- Price effectivity: Azure NetApp Information affords cool entry to transparently transfer cool knowledge blocks to managed Azure storage tier for diminished price, after which robotically again to the recent tier on entry. Moreover, Azure NetApp Information reserved capability offers vital price financial savings in comparison with pay-as-you-go pricing, additional decreasing the excessive prices related to enterprise-grade storage options.

- Safety and reliability: Azure NetApp Information offers enterprise-grade knowledge administration, control-plane, and data-plane safety options, guaranteeing that essential EDA knowledge is protected and obtainable with key administration and encryption for knowledge at relaxation and for knowledge in transit.

The graphic beneath exhibits a manufacturing EDA cluster deployed in Azure by the Azure cloud {hardware} group the place Azure NetApp Information serves purchasers with over 50,000 cores per cluster.

Azure NetApp Information offers the scalable efficiency and reliability that we have to facilitate seamless integration with Azure for a various set of Digital Design Automation instruments utilized in silicon improvement.

—Mike Lemus, Director, Silicon Improvement Compute Options at Microsoft.

In at present’s fast-paced world of semiconductor design, Azure NetApp Information affords agility, efficiency, safety, and stability—the keys to delivering silicon innovation for our Azure cloud.

—Silvian Goldenberg, Associate and Basic Supervisor for Design Methodology and Silicon Infrastructure at Microsoft.

Be taught extra about Azure NetApp Information

Azure NetApp Information has confirmed to be the storage resolution of alternative for probably the most demanding EDA workloads. By offering low latency, excessive throughput, and scalable efficiency, Azure NetApp Information helps the dynamic and complicated nature of EDA duties, guaranteeing speedy entry to cutting-edge processors and seamless integration with Azure’s HPC resolution stack.

Take a look at Azure Effectively-Architected Framework perspective on Azure NetApp Information for detailed info and steering.

For additional info associated to Azure NetApp Information, take a look at the Azure NetApp Information documentation right here.