Over the past 2-3 years, synthetic intelligence (AI) brokers have turn into extra embedded within the software program improvement course of. In line with Statista, three out of 4 builders, or round 75%, use GitHub Copilot, OpenAI Codex, ChatGPT, and different generative AI of their every day chores.

Nonetheless, whereas AI exhibits promise in directing software program improvement duties, it creates a wave of authorized uncertainty.

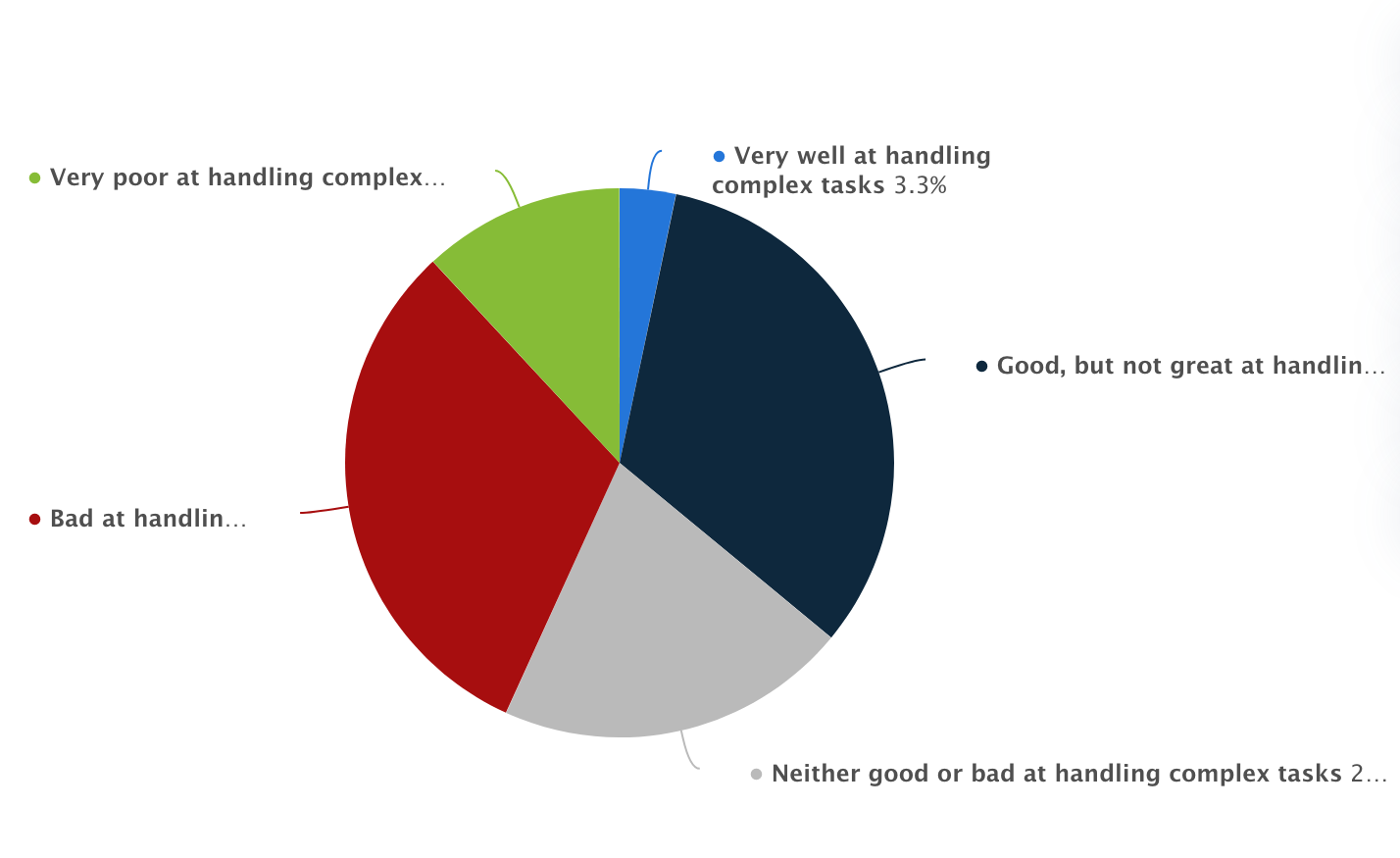

Capability of Synthetic Intelligence in Managing Complicated Duties, Statista

Who owns the code written by an AI? What occurs if AI-made code infringes on another person’s mental property? And what are the privateness dangers when business knowledge is processed by AI fashions?

To reply all these burning questions, we’ll clarify how AI improvement is regarded from the authorized facet, particularly in outsourcing instances, and dive into all concerns corporations ought to perceive earlier than permitting these instruments to combine into their workflows.

What Is AI in Customized Software program Improvement?

The marketplace for AI applied sciences is huge, amounting to round $244 billion in 2025. Usually, AI is split into machine studying and deep studying and additional into pure language processing, laptop imaginative and prescient, and extra.

In software program improvement, AI instruments discuss with clever techniques that may help or automate components of the programming course of. They’ll counsel traces of code, full capabilities, and even generate complete modules relying on context or prompts supplied by the developer.

Within the context of outsourcing initiatives—the place pace isn’t any much less essential than high quality—AI applied sciences are rapidly turning into staples in improvement environments.

They increase productiveness by taking redundant duties, lower the time spent on boilerplate code, and help builders who could also be working in unfamiliar frameworks or languages.

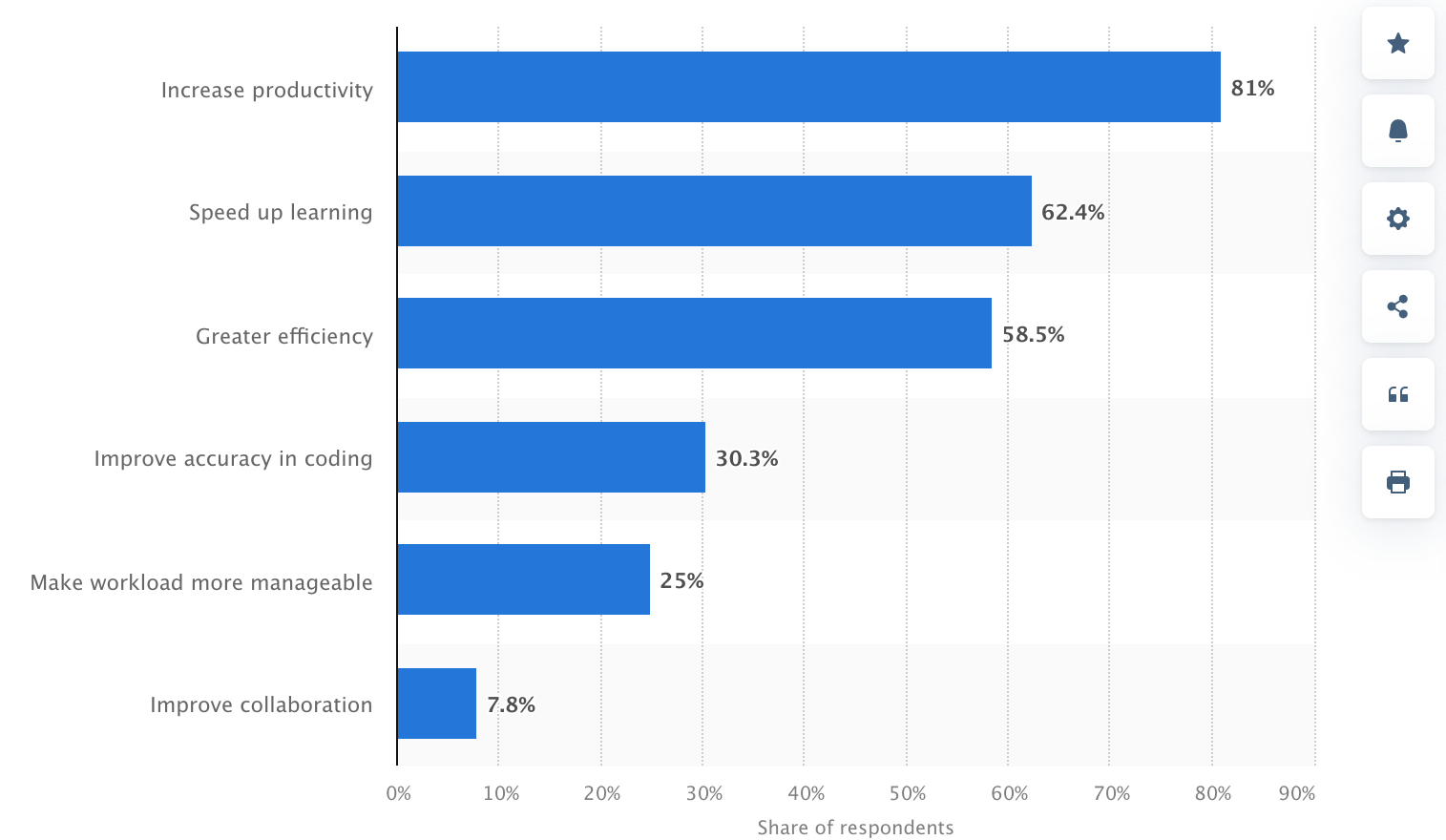

Advantages of Utilizing Synthetic Intelligence, Statista

How AI Instruments Can Be Built-in in Outsourcing Tasks

Synthetic intelligence in 2025 has turn into the specified ability for almost all technical professions.

Whereas the uncommon bartender or plumber might not require AI mastery to the identical degree, it has turn into clear that including an AI ability to a software program developer’s arsenal is a should as a result of within the context of software program improvement outsourcing, AI instruments can be utilized in some ways:

- Code Technology: GitHub Copilot and different AI instruments help outsourced builders in coding by making hints or auto-filling capabilities as they code.

- Bug Detection: As an alternative of ready for human verification in software program testing, AI can flag errors or dangerous code so groups can repair flaws earlier than they turn into irreversible points.

- Writing Assessments: AI can independently generate check instances from the code, thus the testing turns into faster and extra exhaustive.

- Documentation Help: AI can go away feedback and draw up documentation explaining what the code does.

- Multi-language Help: If the challenge wants to modify programming languages, AI can assist translate or re-write segments of code with the intention to decrease the necessity for specialised data for each programming language.

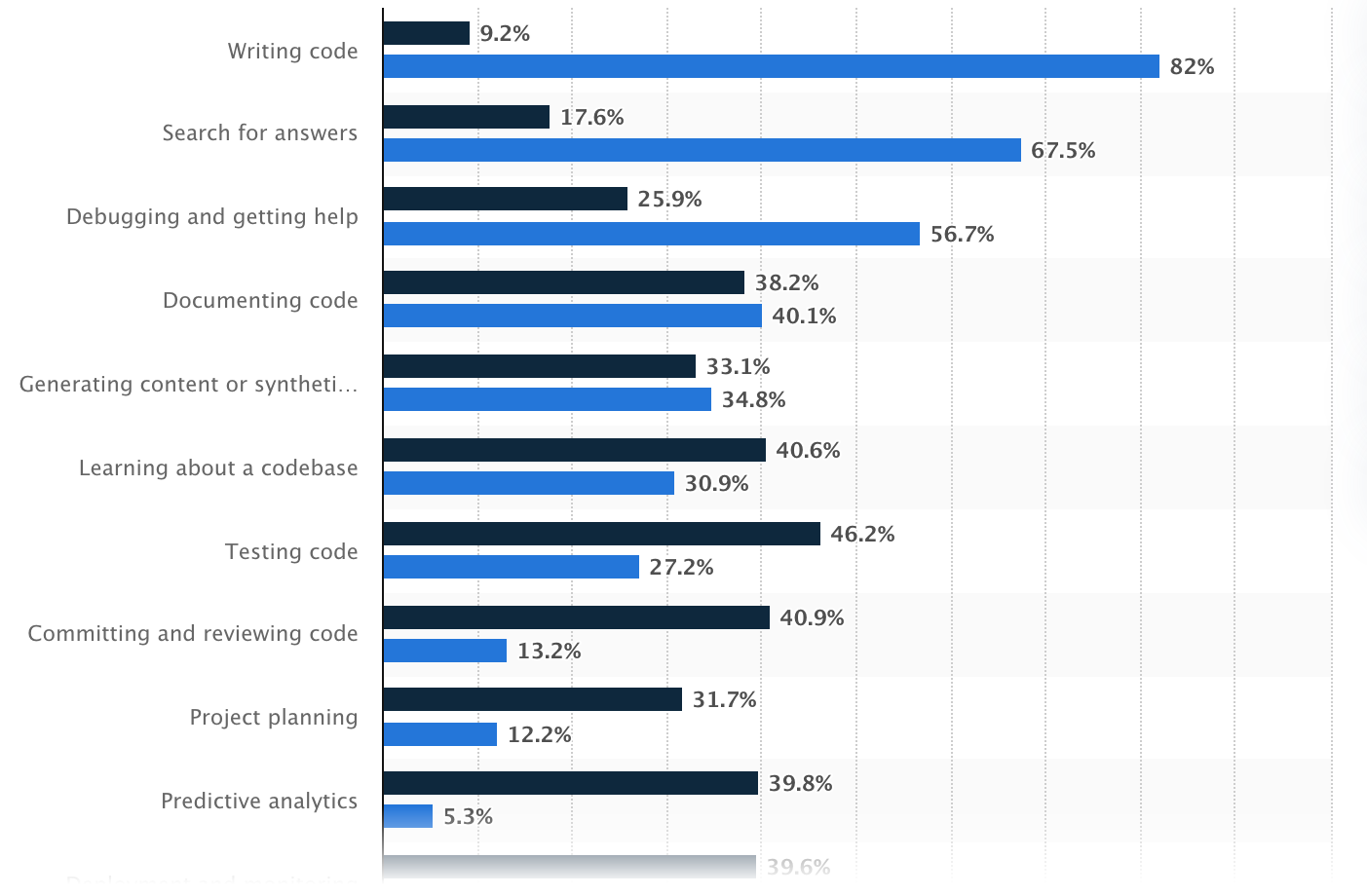

Hottest makes use of of AI within the improvement, Statista

Authorized Implications of Utilizing AI in Customized Software program Improvement

AI instruments might be extremely useful in software program improvement, particularly when outsourcing. However utilizing them additionally raises some authorized questions companies want to concentrate on, primarily round possession, privateness, and duty.

Mental Property (IP) Points

When builders use AI instruments like GitHub Copilot, ChatGPT, or different code-writing assistants, it’s pure to ask: Who really owns the code that will get written? This is among the trickiest authorized questions proper now.

Presently, there’s no clear world settlement. Normally, AI doesn’t personal something, and the developer who makes use of the instrument is taken into account the “writer,” nonetheless, this may increasingly range.

The catch is that AI instruments study from tons of present code on the web. Generally, they generate code that’s very comparable (and even similar) to the code they had been skilled on, together with open-source initiatives.

If that code is copied too carefully, and it’s beneath a strict open-source license, you possibly can run into authorized issues, particularly when you didn’t understand it or observe the license guidelines.

Outsourcing could make it much more problematic. In case you’re working with an outsourcing staff and so they use AI instruments throughout improvement, that you must be additional clear in your contracts:

- Who owns the ultimate code?

- What occurs if the AI instrument by chance reuses licensed code?

- Is the outsourced staff allowed to make use of AI instruments in any respect?

To 100% keep on the secure facet, you’ll be able to:

- Make sure that contracts clearly state who owns the code.

- Double-check that the code doesn’t violate any licenses.

- Think about using instruments that run regionally or restrict what the AI sees to keep away from leaking or copying restricted content material.

Information Safety and Privateness

When utilizing AI instruments in software program improvement, particularly in outsourcing, one other main consideration is knowledge privateness and safety. So what’s the danger?

Nearly all of AI instruments like ChatGPT, Copilot, and others typically run within the cloud, which implies the knowledge builders put into them could also be transmitted to outer servers.

If builders copy and paste proprietary code, login credentials, or business knowledge into these instruments, that data may very well be retained, reused, and later revealed. The state of affairs turns into even worse if:

- You’re giving confidential enterprise information

- Your challenge considerations buyer or consumer particulars

- You’re in a regulated business corresponding to healthcare or finance

So what does the regulation say relating to it? Certainly, totally different nations have totally different rules, however probably the most noticeable are:

- GDPR (Europe): In easy phrases, GDPR protects private knowledge. In case you collect knowledge from folks within the EU, it’s important to clarify what you’re amassing, why you want it, and get their permission first. Individuals can ask to see their knowledge, rectify something improper, or have it deleted.

- HIPAA (US, healthcare): HIPAA covers personal well being data and medical information. Submitting to HIPAA, you’ll be able to’t simply paste something associated to affected person paperwork into an AI instrument or chatbot—particularly one which runs on-line. Additionally, when you work with different corporations (outsourcing groups or software program distributors), they should observe the identical decrees and signal a particular settlement to make all of it authorized.

- CCPA (California): CCPA is a privateness regulation that provides folks extra management over their private data. If your small business collects knowledge from California residents, it’s important to allow them to know what you’re gathering and why. Individuals can ask to see their knowledge, have it deleted, or cease you from sharing or promoting it. Even when your organization relies someplace else, you continue to must observe CCPA when you’re processing knowledge from folks in California.

The obvious and logical query right here is how you can defend knowledge. First, don’t put something delicate (passwords, buyer data, or personal firm knowledge) into public AI instruments until you’re positive they’re secure.

For initiatives that concern confidential data, it’s higher to make use of AI assistants that run on native computer systems and don’t ship something to the web.

Additionally, take an excellent take a look at the contracts with any outsourcing companions to verify they’re following the fitting practices for holding knowledge secure.

Accountability and Duty

AI instruments can perform many duties however they don’t take duty when one thing goes improper. The blame nonetheless falls on folks: the builders, the outsourcing staff, and the enterprise that owns the challenge.

If the code has a flaw, creates a security hole, or causes injury, it’s not the AI’s guilt—it’s the folks utilizing it who’re accountable. If nobody takes possession, small compromises can flip into massive (and costly) points.

To keep away from this case, companies want clear instructions and human oversight:

- All the time assessment AI-generated code. It’s simply a place to begin, not a completed product. Builders nonetheless must probe, debug, and confirm each single half.

- Assign duty. Be it an in-house staff or an outsourced accomplice, be sure that somebody is clearly chargeable for high quality management.

- Embrace AI in your contracts. Your settlement with an outsourcing supplier ought to say:

- Whether or not they can apply AI instruments.

- Who’s chargeable for reviewing the AI’s work.

- Who pays for fixes if one thing goes improper due to AI-generated code.

- Maintain a document of AI utilization. Doc when and the way AI instruments are utilized, particularly for main code contributions. That means, if issues emerge, you’ll be able to hint again what occurred.

Case Research and Examples

AI in software program improvement is already a typical observe utilized by many tech giants although statistically, smaller corporations with fewer staff are extra probably to make use of synthetic intelligence than bigger corporations.

Beneath, now we have compiled some real-world examples that present how totally different companies are making use of AI and the teachings they’re studying alongside the way in which.

Nabla (Healthcare AI Startup)

Nabla, a French healthtech firm, built-in GPT-3 (through OpenAI) to help medical doctors with writing medical notes and summaries throughout consultations.

How they use it:

- AI listens to patient-doctor conversations and creates structured notes.

- The time medical doctors spend on admin work visibly shrinks.

Authorized & privateness actions:

- As a result of they function in a healthcare setting, Nabla deliberately selected to not use OpenAI’s API straight attributable to considerations about knowledge privateness and GDPR compliance.

- As an alternative, they constructed their very own safe infrastructure utilizing open-source fashions like GPT-J, hosted regionally, to make sure no affected person knowledge leaves their servers.

Lesson discovered: In privacy-sensitive industries, utilizing self-hosted or personal AI fashions is usually a safer path than counting on business cloud-based APIs.

Replit and Ghostwriter

Replit, a collaborative on-line coding platform, developed Ghostwriter, its personal AI assistant just like Copilot.

The way it’s used:

- Ghostwriter helps customers (together with novices) write and full code proper within the browser.

- It’s built-in throughout Replit’s improvement platform, typically utilized in training and startups.

Problem:

- Replit has to steadiness ease of use with license compliance and transparency.

- The corporate supplies disclaimers encouraging customers to assessment and edit the generated code, underlining it is just a tip.

Lesson discovered: AI-generated code is highly effective however not all the time secure to make use of “as is.” Even platforms that construct AI instruments themselves push for human assessment and warning.

Amazon’s Inner AI Coding Instruments

Amazon has developed its personal inside AI-powered instruments, just like Copilot, to help its builders.

How they use it:

- AI helps builders write and assessment code throughout a number of groups and providers.

- It’s used internally to enhance developer productiveness and pace up supply.

Why they don’t use exterior instruments like Copilot:

- Amazon has strict inside insurance policies round mental property and knowledge privateness.

- They like to construct and host instruments internally to sidestep authorized dangers and defend proprietary code.

Lesson discovered: Giant enterprises typically keep away from third-party AI instruments attributable to considerations about IP leakage and lack of management over inclined knowledge.

Tips on how to Safely Use AI Instruments in Outsourcing Tasks: Basic Suggestions

Utilizing AI instruments in outsourced improvement can deliver quicker supply, decrease prices, and coding productiveness. However to do it safely, corporations must arrange the fitting processes and protections from the beginning.

First, it’s essential to make AI utilization expectations clear in contracts with outsourcing companions. Agreements ought to specify whether or not AI instruments can be utilized, beneath what circumstances, and who’s chargeable for reviewing and validating AI-generated code.

These contracts must also embody robust mental property clauses, spelling out who owns the ultimate code and what occurs if AI by chance introduces open-source or third-party licensed content material.

Information safety is one other important concern. If builders use AI instruments that ship knowledge to the cloud, they need to by no means enter delicate or proprietary data until the instrument complies with GDPR, HIPAA, or CCPA.

In extremely regulated industries, it’s all the time safer to make use of self-hosted AI fashions or variations that run in a managed atmosphere to reduce the danger of information openness.

To keep away from authorized and high quality points, corporations must also implement human oversight at each stage. AI instruments are nice for recommendation, however they don’t perceive enterprise context or authorized necessities.

Builders should nonetheless check, audit, and reanalyze all code earlier than it goes stay. Establishing a code assessment workflow the place senior engineers double-check AI output ensures security and accountability.

It’s additionally smart to doc when and the way AI instruments are used within the improvement course of. Holding a document helps hint again the supply of any future defects or authorized issues and exhibits good religion in regulatory audits.

Lastly, be sure that your staff (or your outsourcing accomplice’s staff) receives fundamental coaching in AI greatest practices. Builders ought to perceive the constraints of AI ideas, how you can detect licensing dangers, and why it’s essential to validate code earlier than transport it.

FAQ

Q: Who owns the code generated by AI instruments?

Possession normally goes to the corporate commissioning the software program—however provided that that’s clearly said in your settlement. The complication comes when AI instruments generate code that resembles open-source materials. If that content material is beneath a license, and it’s not attributed correctly, it might increase mental property points. So, clear contracts and guide checks are key.

Q: Is AI-generated code secure to make use of as-is?

Not all the time. AI instruments can by chance reproduce licensed or copyrighted code, particularly in the event that they had been skilled on public codebases. Whereas the ideas are helpful, they need to be handled as beginning factors—builders nonetheless must assessment, edit, and confirm the code earlier than it’s used.

Q: Is it secure to enter delicate knowledge into AI instruments like ChatGPT?

Often, no. Until you’re utilizing a personal or enterprise model of the AI that ensures knowledge privateness, you shouldn’t enter any confidential or proprietary data. Public instruments course of knowledge within the cloud, which might expose it to privateness dangers and regulatory violations.

Q: What knowledge safety legal guidelines ought to we contemplate?

This will depend on the place you use and how much knowledge you deal with. In Europe, the GDPR requires consent and transparency when utilizing private knowledge. Within the U.S., HIPAA protects medical knowledge, whereas CCPA in California offers customers management over how their private data is collected and deleted. In case your AI instruments contact delicate knowledge, they need to adjust to these rules.

Q: Who’s accountable if AI-generated code causes an issue?

In the end, the duty falls on the event staff—not the AI instrument. Meaning whether or not your staff is in-house or outsourced, somebody must validate the code earlier than it goes stay. AI can pace issues up, however it will probably’t take duty for errors.

Q: How can we safely use AI instruments in outsourced initiatives?

Begin by placing every part in writing: your contracts ought to cowl AI utilization, IP possession, and assessment processes. Solely use trusted instruments, keep away from feeding in delicate knowledge, and ensure builders are skilled to make use of AI responsibly. Most significantly, maintain a human within the loop for high quality assurance.

Q: Does SCAND use AI for software program improvement?

Sure, however supplied that the consumer agrees. If public AI instruments are licensed, we use Microsoft Copilot in VSCode and Cursor IDE, with fashions like ChatGPT 4o, Claude Sonnet, DeepSeek, and Qwen. If a consumer requests a personal setup, we use native AI assistants in VSCode, Ollama, LM Studio, and llama.cpp, with every part saved on safe machines.

Q: Does SCAND use AI to check software program?

Sure, however with permission from the consumer. We use AI instruments like ChatGPT 4o and Qwen Imaginative and prescient for automated testing and Playwright and Selenium for browser testing. When required, we robotically generate unit exams utilizing AI fashions in Copilot, Cursor, or regionally accessible instruments like Llama, DeepSeek, Qwen, and Starcoder.