Latest progress in AI largely boils down to at least one factor: Scale.

Across the starting of this decade, AI labs observed that making their algorithms—or fashions—ever greater and feeding them extra knowledge persistently led to monumental enhancements in what they might do and the way nicely they did it. The most recent crop of AI fashions have tons of of billions to over a trillion inside community connections and study to jot down or code like we do by consuming a wholesome fraction of the web.

It takes extra computing energy to coach greater algorithms. So, to get thus far, the computing devoted to AI coaching has been quadrupling yearly, in accordance with nonprofit AI analysis group, Epoch AI.

Ought to that progress proceed by means of 2030, future AI fashions can be educated with 10,000 occasions extra compute than right this moment’s state-of-the-art algorithms, like OpenAI’s GPT-4.

“If pursued, we would see by the top of the last decade advances in AI as drastic because the distinction between the rudimentary textual content technology of GPT-2 in 2019 and the subtle problem-solving talents of GPT-4 in 2023,” Epoch wrote in a current analysis report detailing how probably it’s this state of affairs is feasible.

However trendy AI already sucks in a major quantity of energy, tens of 1000’s of superior chips, and trillions of on-line examples. In the meantime, the trade has endured chip shortages, and research recommend it might run out of high quality coaching knowledge. Assuming corporations proceed to put money into AI scaling: Is progress at this charge even technically attainable?

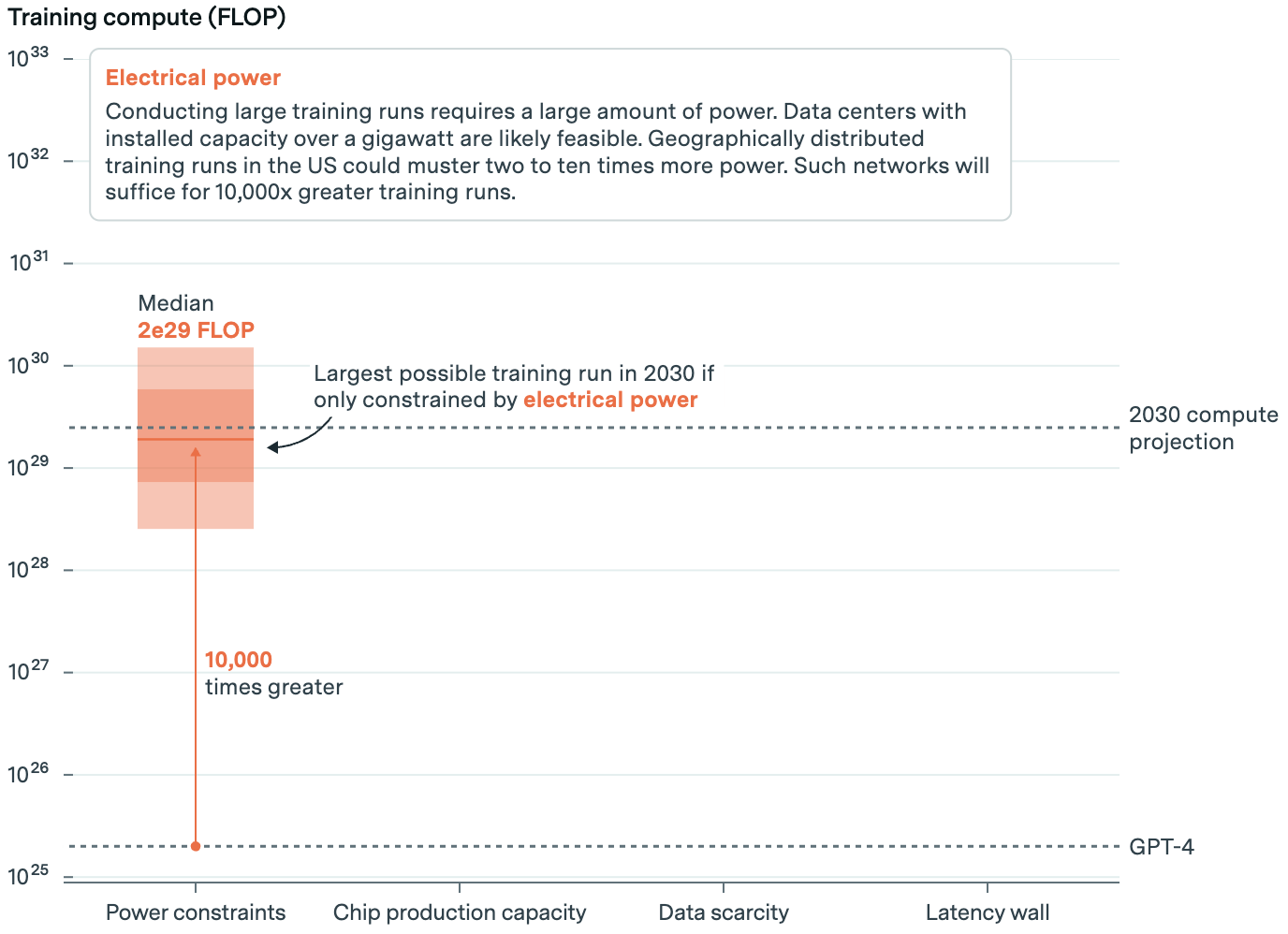

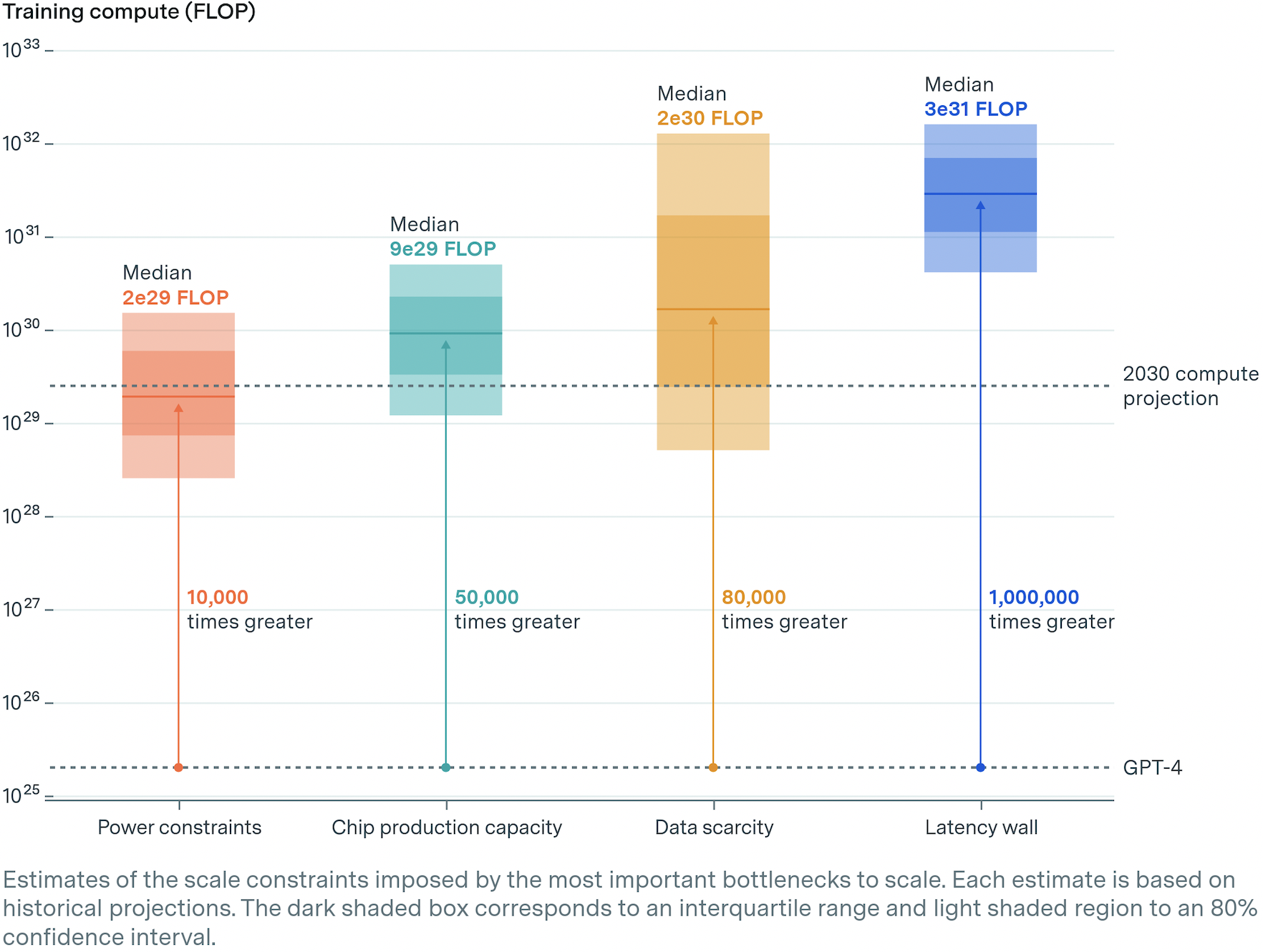

In its report, Epoch checked out 4 of the largest constraints to AI scaling: Energy, chips, knowledge, and latency. TLDR: Sustaining progress is technically attainable, however not sure. Right here’s why.

Energy: We’ll Want a Lot

Energy is the largest constraint to AI scaling. Warehouses filled with superior chips and the gear to make them run—or knowledge facilities—are energy hogs. Meta’s newest frontier mannequin was educated on 16,000 of Nvidia’s strongest chips drawing 27 megawatts of electrical energy.

This, in accordance with Epoch, is the same as the annual energy consumption of 23,000 US households. However even with effectivity good points, coaching a frontier AI mannequin in 2030 would want 200 occasions extra energy, or roughly 6 gigawatts. That’s 30 % of the ability consumed by all knowledge facilities right this moment.

There are few energy crops that may muster that a lot, and most are probably underneath long-term contract. However that’s assuming one energy station would electrify an information middle. Epoch suggests corporations will search out areas the place they’ll draw from a number of energy crops by way of the native grid. Accounting for deliberate utilities progress, going this route is tight however attainable.

To raised break the bottleneck, corporations might as an alternative distribute coaching between a number of knowledge facilities. Right here, they’d break up batches of coaching knowledge between plenty of geographically separate knowledge facilities, lessening the ability necessities of anybody. The technique would require lightning-quick, high-bandwidth fiber connections. However it’s technically doable, and Google Gemini Extremely’s coaching run is an early instance.

All advised, Epoch suggests a spread of prospects from 1 gigawatt (native energy sources) all the best way as much as 45 gigawatts (distributed energy sources). The extra energy corporations faucet, the bigger the fashions they’ll practice. Given energy constraints, a mannequin could possibly be educated utilizing about 10,000 occasions extra computing energy than GPT-4.

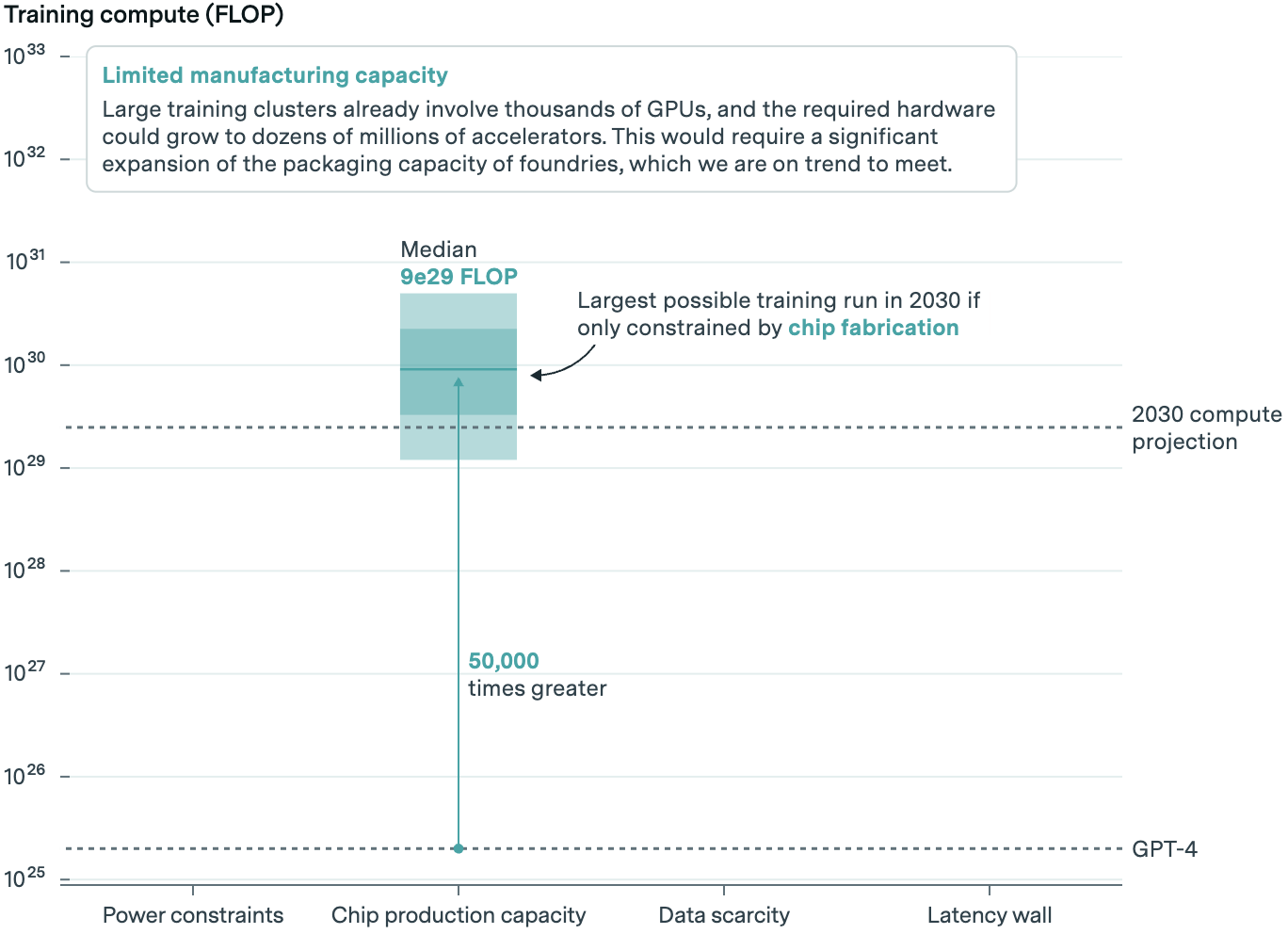

Chips: Does It Compute?

All that energy is used to run AI chips. A few of these serve up accomplished AI fashions to clients; some practice the subsequent crop of fashions. Epoch took an in depth take a look at the latter.

AI labs practice new fashions utilizing graphics processing models, or GPUs, and Nvidia is prime canine in GPUs. TSMC manufactures these chips and sandwiches them along with high-bandwidth reminiscence. Forecasting has to take all three steps into consideration. Based on Epoch, there’s probably spare capability in GPU manufacturing, however reminiscence and packaging might maintain issues again.

Given projected trade progress in manufacturing capability, they suppose between 20 and 400 million AI chips could also be accessible for AI coaching in 2030. A few of these can be serving up current fashions, and AI labs will solely be capable of purchase a fraction of the entire.

The wide selection is indicative of quantity of uncertainty within the mannequin. However given anticipated chip capability, they imagine a mannequin could possibly be educated on some 50,000 occasions extra computing energy than GPT-4.

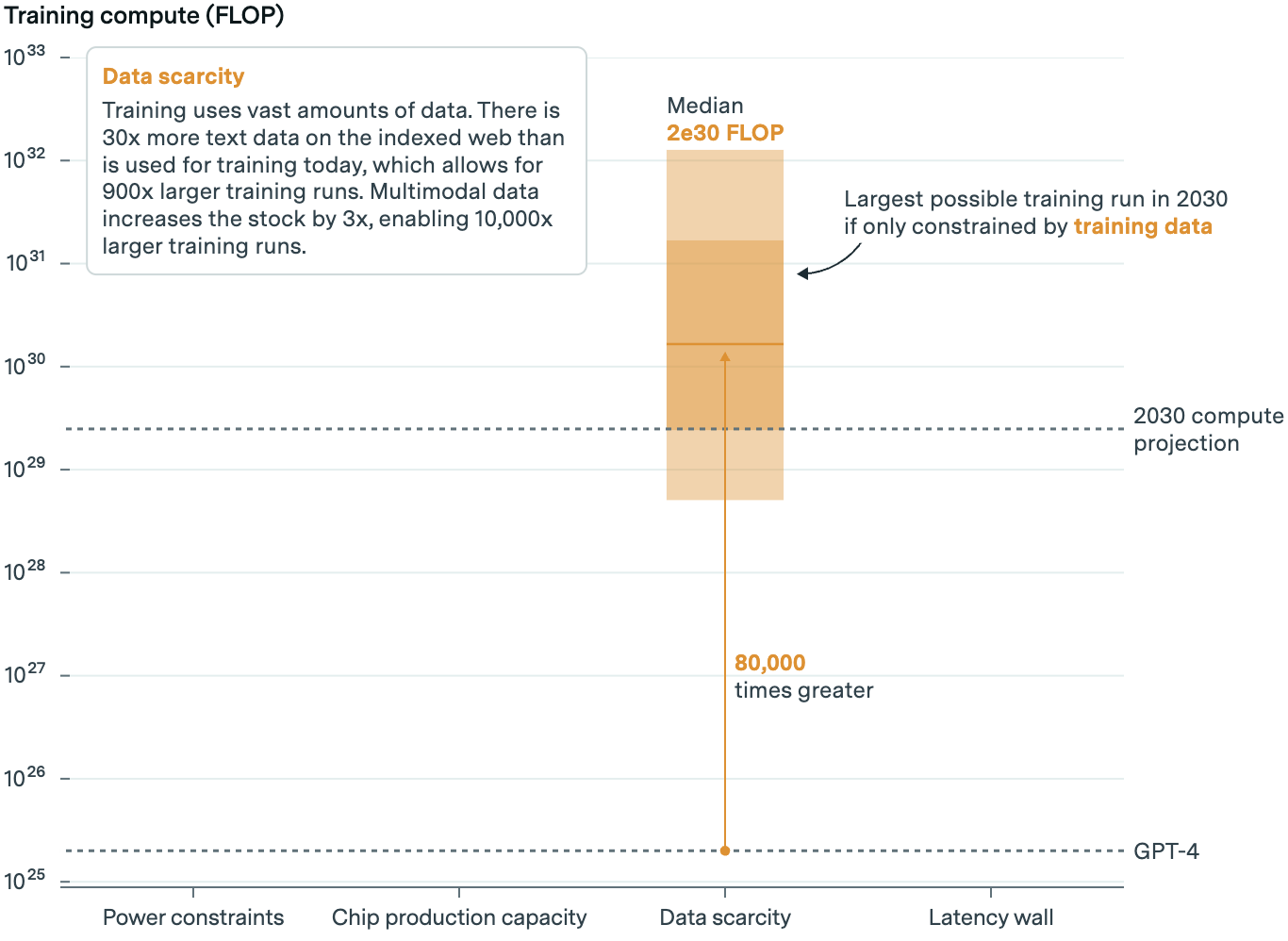

Knowledge: AI’s On-line Schooling

AI’s starvation for knowledge and its impending shortage is a well known constraint. Some forecast the stream of high-quality, publicly accessible knowledge will run out by 2026. However Epoch doesn’t suppose knowledge shortage will curtail the expansion of fashions by means of at the least 2030.

At right this moment’s progress charge, they write, AI labs will run out of high quality textual content knowledge in 5 years. Copyright lawsuits can also affect provide. Epoch believes this provides uncertainty to their mannequin. However even when courts resolve in favor of copyright holders, complexity in enforcement and licensing offers like these pursued by Vox Media, Time, The Atlantic and others imply the affect on provide can be restricted (although the standard of sources might undergo).

However crucially, fashions now eat extra than simply textual content in coaching. Google’s Gemini was educated on picture, audio, and video knowledge, for instance.

Non-text knowledge can add to the availability of textual content knowledge by means of captions and transcripts. It could additionally develop a mannequin’s talents, like recognizing the meals in a picture of your fridge and suggesting dinner. It might even, extra speculatively, end in switch studying, the place fashions educated on a number of knowledge varieties outperform these educated on only one.

There’s additionally proof, Epoch says, that artificial knowledge might additional develop the info haul, although by how a lot is unclear. DeepMind has lengthy used artificial knowledge in its reinforcement studying algorithms, and Meta employed some artificial knowledge to coach its newest AI fashions. However there could also be exhausting limits to how a lot can be utilized with out degrading mannequin high quality. And it might additionally take much more—expensive—computing energy to generate.

All advised, although, together with textual content, non-text, and artificial knowledge, Epoch estimates there’ll be sufficient to coach AI fashions with 80,000 occasions extra computing energy than GPT-4.

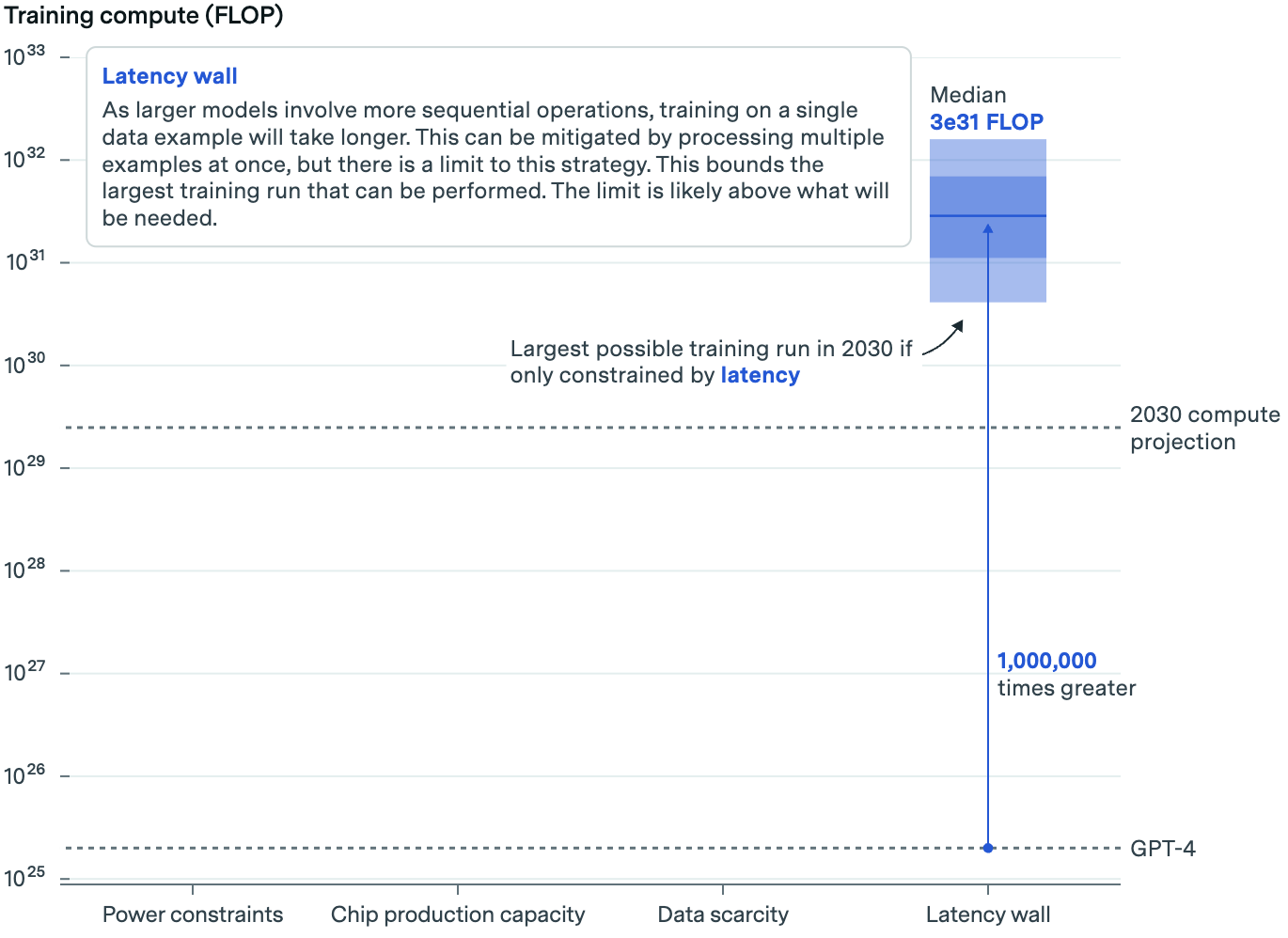

Latency: Greater Is Slower

The final constraint is said to the sheer measurement of upcoming algorithms. The larger the algorithm, the longer it takes for knowledge to traverse its community of synthetic neurons. This might imply the time it takes to coach new algorithms turns into impractical.

This bit will get technical. In brief, Epoch takes a take a look at the potential measurement of future fashions, the dimensions of the batches of coaching knowledge processed in parallel, and the time it takes for that knowledge to be processed inside and between servers in an AI knowledge middle. This yields an estimate of how lengthy it might take to coach a mannequin of a sure measurement.

The primary takeaway: Coaching AI fashions with right this moment’s setup will hit a ceiling finally—however not for awhile. Epoch estimates that, underneath present practices, we might practice AI fashions with upwards of 1,000,000 occasions extra computing energy than GPT-4.

Scaling Up 10,000x

You’ll have observed the size of attainable AI fashions will get bigger underneath every constraint—that’s, the ceiling is larger for chips than energy, for knowledge than chips, and so forth. But when we contemplate all of them collectively, fashions will solely be attainable as much as the primary bottleneck encountered—and on this case, that’s energy. Even so, important scaling is technically attainable.

“When thought of collectively, [these AI bottlenecks] indicate that coaching runs of as much as 2e29 FLOP can be possible by the top of the last decade,” Epoch writes.

“This may characterize a roughly 10,000-fold scale-up relative to present fashions, and it might imply that the historic pattern of scaling might proceed uninterrupted till 2030.”

What Have You Executed for Me Recently?

Whereas all this means continued scaling is technically attainable, it additionally makes a fundamental assumption: That AI funding will develop as wanted to fund scaling and that scaling will proceed to yield spectacular—and extra importantly, helpful—advances.

For now, there’s each indication tech corporations will maintain investing historic quantities of money. Pushed by AI, spending on the likes of recent tools and actual property has already jumped to ranges not seen in years.

“If you undergo a curve like this, the chance of underinvesting is dramatically higher than the chance of overinvesting,” Alphabet CEO Sundar Pichai stated on final quarter’s earnings name as justification.

However spending might want to develop much more. Anthropic CEO Dario Amodei estimates fashions educated right this moment can value as much as $1 billion, subsequent 12 months’s fashions might close to $10 billion, and prices per mannequin might hit $100 billion within the years thereafter. That’s a dizzying quantity, nevertheless it’s a price ticket corporations could also be prepared to pay. Microsoft is already reportedly committing that a lot to its Stargate AI supercomputer, a joint venture with OpenAI due out in 2028.

It goes with out saying that the urge for food to speculate tens or tons of of billions of {dollars}—greater than the GDP of many international locations and a major fraction of present annual revenues of tech’s largest gamers—isn’t assured. Because the shine wears off, whether or not AI progress is sustained might come right down to a query of, “What have you ever accomplished for me these days?”

Already, buyers are checking the underside line. At this time, the quantity invested dwarfs the quantity returned. To justify higher spending, companies should present proof that scaling continues to provide increasingly more succesful AI fashions. Which means there’s growing stress on upcoming fashions to transcend incremental enhancements. If good points tail off or sufficient folks aren’t prepared to pay for AI merchandise, the story might change.

Additionally, some critics imagine massive language and multimodal fashions will show to be a pricy useless finish. And there’s all the time the prospect a breakthrough, just like the one which kicked off this spherical, exhibits we are able to accomplish extra with much less. Our brains study constantly on a lightweight bulb’s price of power and nowhere close to an web’s price of information.

That stated, if the present method “can automate a considerable portion of financial duties,” the monetary return might quantity within the trillions of {dollars}, greater than justifying the spend, in accordance with Epoch. Many within the trade are prepared to take that guess. Nobody is aware of the way it’ll shake out but.

Picture Credit score: Werclive 👹 / Unsplash