Motivation

3D reconstruction is a long-standing downside in pc imaginative and prescient, with purposes in robotics, VR and multimedia. It’s a infamous downside because it requires lifting 2D photos to 3D house in a dense, correct method.

Gaussian splatting (GS) [1] represents 3D scenes utilizing volumetric splats, which might seize detailed geometry and look info. It has grow to be fairly in style attributable to their comparatively quick coaching, inference speeds and top quality reconstruction.

GS-based reconstructions typically encompass thousands and thousands of Gaussians, which makes them laborious to make use of on computationally constrained gadgets akin to smartphones. Our aim is to enhance storage and computational inefficiency of GS strategies. Therefore, we suggest Trick-GS, a set of optimizations that improve the effectivity of Gaussian Splatting with out considerably compromising rendering high quality.

Background

GS is a technique for representing 3D scenes utilizing volumetric splats, which might seize detailed geometry and look info. It has grow to be fairly in style attributable to their comparatively quick coaching, inference speeds and top quality reconstruction.

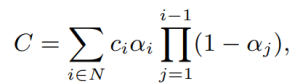

GS reconstructs a scene by becoming a group of 3D Gaussian primitives, which could be effectively rendered in a differentiable quantity splatting rasterizer by extending EWA quantity splatting [2]. A scene represented with 3DGS sometimes consists of tons of of hundreds to thousands and thousands of Gaussians, the place every 3D Gaussian primitive consists of 59 parameters. The method consists of rasterizing Gaussians from 3D to 2D and optimizing the Gaussian parameters the place a 3D covariance matrix of Gaussians are later parameterized utilizing a scaling matrix and a rotation matrix. Every Gaussian primitive additionally has an opacity (α ∈ [0, 1]), a subtle coloration and a set of spherical harmonics (SH) coefficients, sometimes consisting of 3-bands, to signify view-dependent colours. Coloration C of every pixel within the picture aircraft is later decided by Gaussians contributing to that pixel with mixing the colours in a sorted order.

GS initializes the scene and its Gaussians with level clouds from SfM based mostly strategies akin to Colmap. Later Gaussians are optimized and the scene construction is modified by eradicating, conserving or including Gaussians to the scene based mostly on gradients of Gaussians within the optimization stage. GS greedily provides Gaussians to the scene and this makes the method in environment friendly when it comes to storage and computation time throughout coaching.

Tips for Studying Environment friendly 3DGS Representations

We undertake a number of methods that may overcome the inefficiency of representing the scenes with thousands and thousands of Gaussians. Our adopted methods mutually work in concord and enhance the effectivity in numerous elements. We primarily categorize our adopted methods in 4 teams:

a) Pruning Gaussians; tackling the variety of Gaussians by pruning.

b) SH masking; studying to masks much less helpful Gaussian parameters with SH masking to decrease the storage necessities.

c) Progressive coaching methods; altering the enter illustration by progressive coaching methods.

d) Accelerated implementation; the optimization when it comes to implementation.

a) Pruning Gaussians:

a.1) Quantity Masking

Gaussians with low scale are likely to have minimal influence on the general high quality, due to this fact we undertake a method that learns to masks and take away such Gaussians. N binary masks, M ∈ {0, 1}N , are discovered for N Gaussians and utilized on its opacity, α ∈ [0, 1]N , and non-negative scale attributes, s by introducing a masks parameter, m ∈ RN. The discovered masks are then thresholded to generate laborious masks M;

![]()

These Gaussians are pruned at densification stage utilizing these masks and are additionally pruned at each km iteration after densification stage.

a.2) Significance of Gaussians

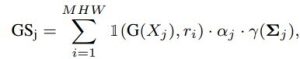

Significance rating calculation goals to seek out Gaussians that haven’t any influence or little general influence. We undertake a method the place the influence of a Gaussian is set by contemplating how usually it’s hit by a ray. Extra concretely, the so known as significance rating GSj is calculated with 1(G(Xj ), ri) the place 1(·) is the indicator operate, ri is ray i for each ray within the coaching set and Xj is the occasions Gaussian j hit ri.

the place j is the Gaussian index, i is the pixel, γ(Σj ) is the Gaussian’s quantity, M, H, and W represents the variety of coaching views, picture top, and width, respectively. Since it’s a pricey operation, we apply this pruning ksg occasions throughout coaching with a decay issue contemplating the percentile eliminated within the earlier spherical.

b) Spherical Harmonic (SH) Masking

SHs are used to signify view dependent coloration for a Gaussian, nevertheless, one can admire that not all Gaussians could have the identical ranges of various colours relying on the scene, which offers an extra pruning alternative. We undertake a method the place SH bands are pruned based mostly on a masks discovered throughout coaching, and pointless bands are eliminated after the coaching is full. Particularly, every Gaussian learns a masks per SH band. SH masks are calculated as within the following equation and SH values for the ith Gaussian for the corresponding SH band, l, is about to zero if its laborious masks, Mshi(l), worth is zero and unchanged in any other case.

![]()

the place mli ∈ (0, 1), Mlsh ∈ {0, 1}. Lastly, every masked view dependent coloration is outlined as ĉi(l) = Mshi(l)ci(l) the place ci(l) ∈ R(2l+1)×3. Masking loss for every diploma of SH is weighted by its variety of coefficients, because the variety of SH coefficients differ per SH band.

c) Progressive coaching methods

Progressive coaching of Gaussians refers to ranging from a coarser, much less detailed picture illustration and progressively altering the illustration again to the unique picture. These methods work as a regularization scheme.

c.1) By blurring

Gaussian blurring is used to alter the extent of particulars in a picture comparable. Kernel dimension is progressively lowered at each kb iteration based mostly on a decay issue. This technique helps to take away floating artifacts from the sub-optimal initialization of Gaussians and serves as a regularization to converge to a greater native minima. It additionally considerably impacts the coaching time since a coarser scene illustration requires much less variety of Gaussians to signify the scene.

c.2) By decision

Progressive coaching by decision. One other technique to mitigate the over-fitting on coaching knowledge is to begin with smaller photos and progressively enhance the picture decision throughout coaching to assist studying a broader world info. This method particularly helps to be taught finer grained particulars for pixels behind the foreground objects.

c.3) By scales of Gaussians

One other technique is to deal with low-frequency particulars in the course of the early phases of the coaching by controlling the low-pass filter within the rasterization stage. Some Gaussians may grow to be smaller than a single pixel if every 3D Gaussian is projected into 2D, which leads to artefacts. Due to this fact, the covariance matrix of every Gaussian is added by a small worth to the diagonal aspect to constraint the dimensions of every Gaussian. We progressively change scale for every Gaussian in the course of the optimization comparable to make sure the minimal space that every Gaussian covers within the display house. Utilizing a bigger scale at first of the optimization allows Gaussians obtain gradients from a wider space and due to this fact the coarse construction of the scene is discovered effectively.

d) Accelerated Implementation

We undertake a method that’s extra targeted on the coaching time effectivity. We comply with on separating larger SH bands from the 0th band throughout the rasterization, thus decrease the variety of updates for the upper SH bands than the subtle colours. SH bands (45 dims) cowl a big proportion of those updates, the place they’re solely used to signify view-dependent coloration variations. By modifying the rasterizer to separate SH bands from the subtle coloration, we replace larger SH bands each 16 iterations whereas subtle colours are up to date at each iteration.

GS makes use of photometric and structural losses for optimization the place structural loss calculation is dear. SSIM loss calculation with optimized CUDA kernels. SSIM is configured to make use of 11×11 Gaussian kernel convolutions by commonplace, the place as an optimized model is obtained by changing the bigger 2D kernel with two smaller 1D Gaussian kernels. Making use of much less variety of updates for larger SH bands and optimizing the SSIM loss calculation have a negligible influence on the accuracy, whereas it considerably helps to speed-up the coaching time as proven by our experiments.

Experimental Outcomes

We comply with the setup on real-world scenes. 15 scenes from bounded and unbounded indoor/out of doors situations; 9 from Mip-NeRF 360, two (truck and practice) from Tanks&Temples and two (DrJohnson and Playroom) from Deep Mixing datasets are used. SfM factors and digicam poses are used as supplied by the authors [1] and each eighth picture in every dataset is used for testing. Fashions are skilled for 30K iterations and PSNR, SSIM and LPIPS are used for analysis. We save the Gaussian parameters in 16-bit precision to save lots of further disk house as we don’t observe any accuracy drop in comparison with 32-bit precision.

Efficiency Analysis

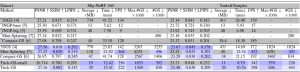

Our best mannequin Trick-GS-small improves over the vanilla 3DGS by compressing the mannequin dimension drastically, 23×, enhancing the coaching time and FPS by 1.7× and a couple of×, respectively on three datasets. Nonetheless, this leads to slight lack of accuracy, and due to this fact we use late densification and progressive scale-based coaching with our most correct mannequin Trick-GS, which remains to be extra environment friendly than others whereas not sacrificing on the accuracy.

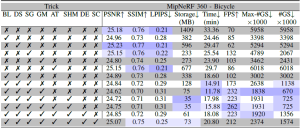

Desk 1. Quantitative analysis on MipNeRF 360 and Tanks&Temples datasets. Outcomes with marked ’∗’ methodology names are taken from the corresponding papers. Outcomes between the double horizontal strains are from retraining the fashions on our system. We coloration the outcomes with 1st, 2nd and third rankings within the order of strong to clear coloured cells for every column. Trick-GS can reconstruct scenes with a lot decrease coaching time and disk house necessities whereas not sacrificing on the accuracy.

Trick-GS improves PSNR by 0.2dB on common whereas shedding 50% on the cupboard space and 15% on the coaching time in comparison with Trick-GS-small. The discount on the effectivity with Trick-GS is due to the usage of progressive scale-based coaching and late densification that compensates for the loss from pruning of false constructive Gaussians. We examined an present post-processing step, which helps to additional cut back mannequin dimension as little as 6MB and 12MB respectively for Trick-GS-small and Trick-GS over MipNeRF 360 dataset. The post-processing doesn’t closely influence the coaching time however the accuracy drop on PSNR metric is 0.33dB which is undesirable for our methodology. Because of our trick selections, Trick-GS learns fashions as little as 10MB for some out of doors scenes whereas conserving the coaching time round 10mins for many scenes.

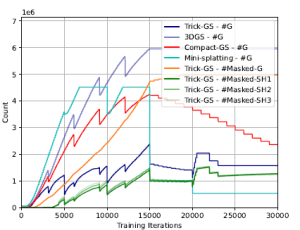

Determine 1. Variety of Gaussians (#G) throughout coaching (on MipNeRF 360 – bicycle scene) for all strategies, variety of masked Gaussians (#Masked-G) and variety of Gaussians with a masked SH band for our methodology. Our methodology performs a balanced reconstruction when it comes to coaching effectivity by not letting the variety of Gaussians enhance drastically as different strategies throughout coaching, which is a fascinating property for finish gadgets with low reminiscence.

Studying to masks SH bands helps our method to decrease the cupboard space necessities. Trick-GS lowers the storage necessities for every scene over three datasets though it would end in extra Gaussians than Mini-Splatting for some scenes. Our methodology improves some accuracy metrics over 3DGS whereas the accuracy adjustments are negligible. Benefit of our methodology is the requirement of 23× much less storage and 1.7× much less coaching time in comparison with 3DGS. Our method achieves this efficiency with out rising the utmost variety of Gaussians as excessive because the in contrast strategies. Fig. 1 exhibits the change in variety of Gaussians throughout coaching and an evaluation on the variety of pruned Gaussians based mostly on the discovered masks. Trick-GS achieves comparable accuracy stage whereas utilizing 4.5× much less Gaussians in comparison with Mini-Splatting and a couple of× much less Gaussians in comparison with Compact-GS, which is vital for the utmost GPU consumption on finish gadgets. Fig. 2 exhibits the qualitative influence of our progressive coaching methods. Trick-GS obtains structurally extra constant reconstructions of tree branches due to the progressive coaching.

Determine 2. Influence of progressive coaching methods on difficult background reconstructions. We empirically discovered that progressive coaching methods as downsampling, including Gaussian noise and altering the dimensions of discovered Gaussians have a big influence on the background objects with holes akin to tree branches.

Ablation Research

We consider the contribution of tips in Tab. 2 on MipNeRF360 – bicycle scene. Our tips mutually advantages from one another to allow on-device studying. Whereas Gaussian blurring helps to prune nearly half of the Gaussians in comparison with 3DGS with a negligible accuracy loss, downsampling the picture decision helps to deal with the main points by the progressive coaching and therefore their combination mannequin lowers the coaching time and the Gaussian depend by half. Significance rating based mostly pruning technique improves the cupboard space essentially the most amongst different tips whereas masking Gaussians technique leads to decrease variety of Gaussians at its peak and on the finish of studying. Enabling progressive Gaussian scale based mostly coaching additionally helps to enhance the accuracy due to having larger variety of Gaussians with the launched cut up technique.

Desk 2. Ablation research on tips adopted by our method utilizing‘bicycle’ scene. Our tips are abbreviated as BL: progressive Gaussian blurring, DS: progressive downsampling, SG: significance pruning, GM: Gaussian masking, SHM: SH masking, AT: accelerated coaching, DE: late densification, SC: progressive scaling. Our full mannequin Trick-GS makes use of all of the tips whereas Trick-GS-small makes use of all however DE and SC.

Abstract and Future Instructions

Now we have proposed a combination of methods adopted from the literature to acquire compact 3DGS representations. Now we have rigorously designed and chosen methods from the literature and confirmed aggressive experimental outcomes. Our method reduces the coaching time for 3DGS by 1.7×, the storage requirement by 23×, will increase the FPS by 2× whereas conserving the standard aggressive with the baselines. The benefit of our methodology is being simply tunable w.r.t. the applying/system wants and it additional could be improved with a post-processing stage from the literature e.g. codebook studying, huffman encoding. We consider a dynamic and compact studying system is required based mostly on system necessities and due to this fact depart automatizing such programs for future work.