In AI-driven functions, complicated duties usually require breaking down into a number of subtasks. Nonetheless, the precise subtasks can’t be predetermined in lots of real-world situations. As an example, in automated code technology, the variety of recordsdata to be modified and the precise modifications wanted rely completely on the given request. Conventional parallelized workflows wrestle unpredictably, requiring duties to be predefined upfront. This rigidity limits the adaptabilityof AI methods.

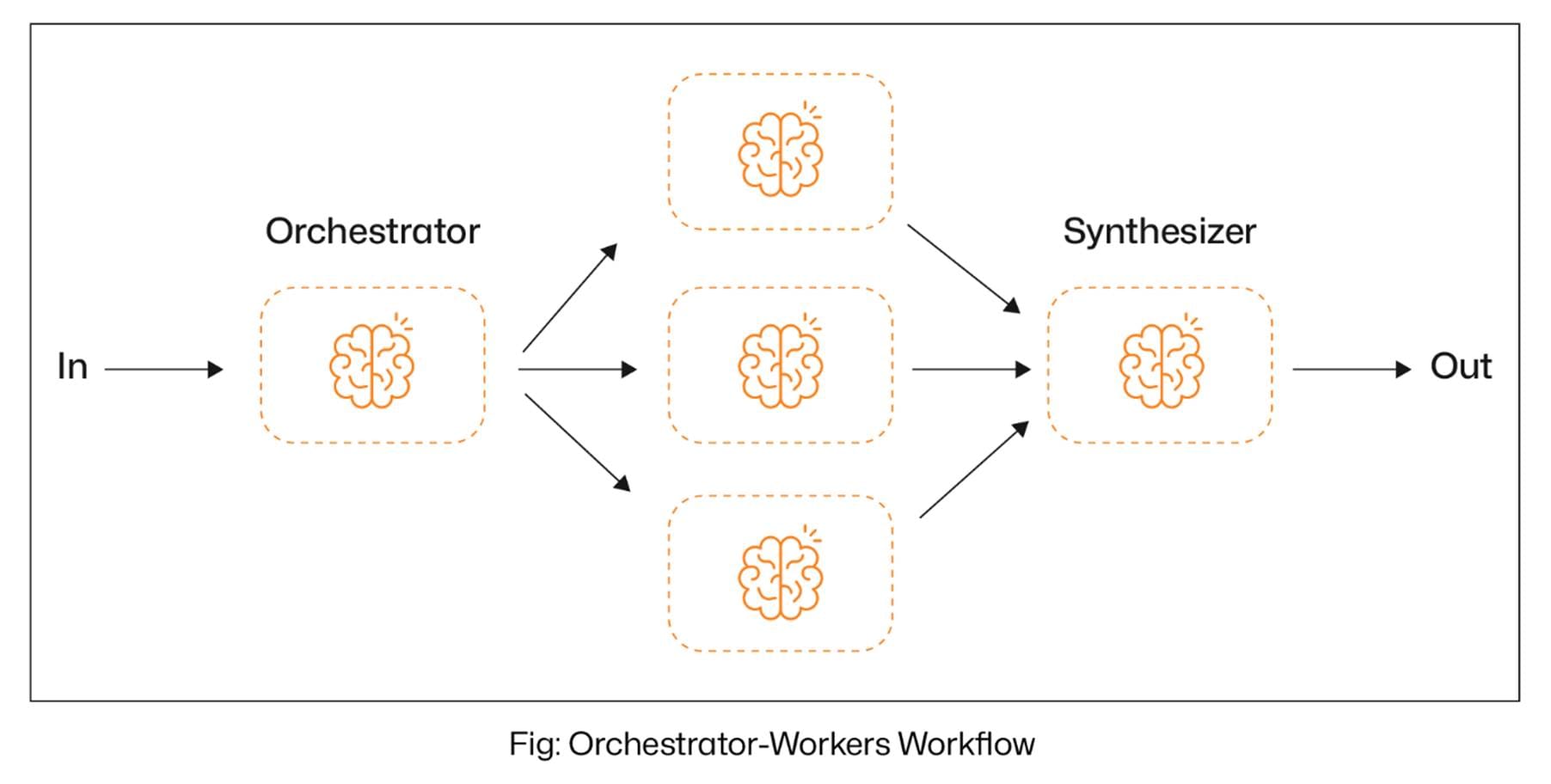

Nonetheless, the Orchestrator-Employees Workflow Brokers in LangGraph introduce a extra versatile and clever strategy to handle this problem. As a substitute of counting on static job definitions, a central orchestrator LLM dynamically analyses the enter, determines the required subtasks, and delegates them to specialised employee LLMs. The orchestrator then collects and synthesizes the outputs, making certain a cohesive last end result. These Gen AI companies allow real-time decision-making, adaptive job administration, and better accuracy, making certain that complicated workflows are dealt with with smarter agility and precision.

With that in thoughts, let’s dive into what the Orchestrator-Employees Workflow Agent in LangGraph is all about.

Inside LangGraph’s Orchestrator-Employees Agent: Smarter Process Distribution

The Orchestrator-Employees Workflow Agent in LangGraph is designed for dynamic job delegation. On this setup, a central orchestrator LLM analyses the enter, breaks it down into smaller subtasks, and assigns them to specialised employee LLMs. As soon as the employee brokers full their duties, the orchestrator synthesizes their outputs right into a cohesive last end result.

The principle benefit of utilizing the Orchestrator-Employees workflow agent is:

- Adaptive Process Dealing with: Subtasks aren’t predefined however decided dynamically, making the workflow extremely versatile.

- Scalability: The orchestrator can effectively handle and scale a number of employee brokers as wanted.

- Improved Accuracy: The system ensures extra exact and context-aware outcomes by dynamically delegating duties to specialised staff.

- Optimized Effectivity: Duties are distributed effectively, stopping bottlenecks and enabling parallel execution the place doable.

Let’s not take a look at an instance. Let’s construct an orchestrator-worker workflow agent that makes use of the person’s enter as a weblog matter, reminiscent of “write a weblog on agentic RAG.” The orchestrator analyzes the subject and plans numerous sections of the weblog, together with introduction, ideas and definitions, present functions, technological developments, challenges and limitations, and extra. Primarily based on this plan, specialised employee nodes are dynamically assigned to every part to generate content material in parallel. Lastly, the synthesizer aggregates the outputs from all staff to ship a cohesive last end result.

Importing the required libraries.

Now we have to load the LLM. For this weblog, we’ll use the qwen2.5-32b mannequin from Groq.

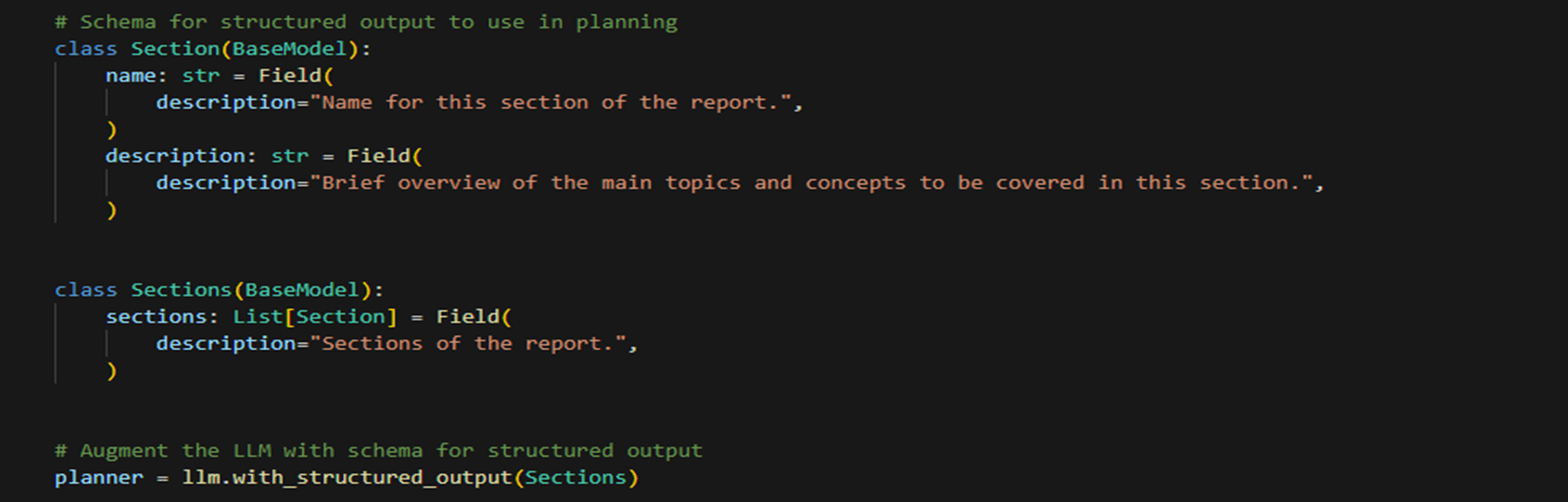

Now, let’s construct a Pydantic class to make sure that the LLM produces structured output. Within the Pydantic class, we’ll be sure that the LLM generates a listing of sections, every containing the part identify and outline. These sections will later be given to staff to allow them to work on every part in parallel.

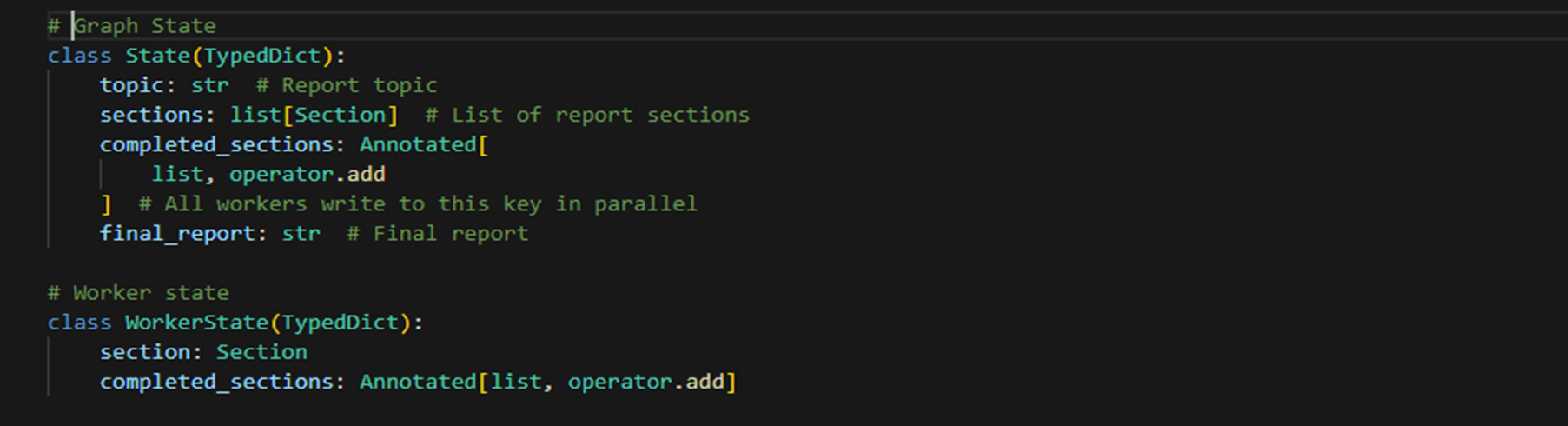

Now, we should create the state lessons representing a Graph State containing shared variables. We are going to outline two state lessons: one for the complete graph state and one for the employee state.

Now, we will outline the nodes—the orchestrator node, the employee node, the synthesizer node, and the conditional node.

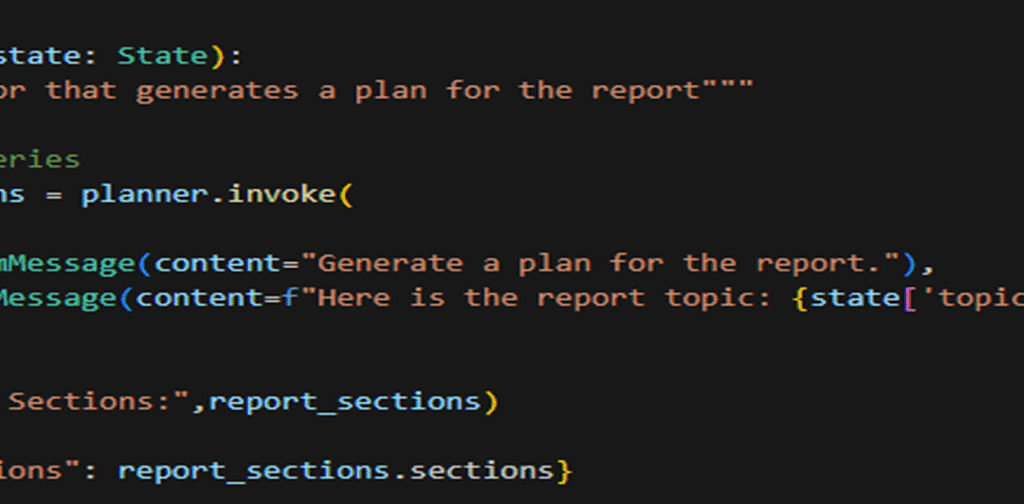

Orchestrator node: This node might be liable for producing the sections of the weblog.

Employee node: This node might be utilized by staff to generate content material for the completely different sections

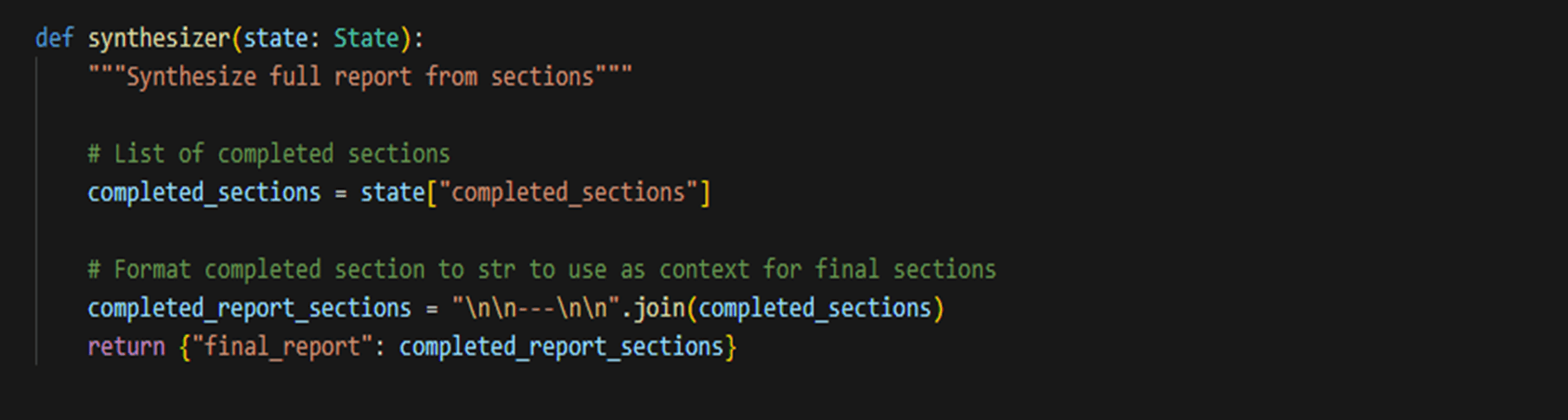

Synthesizer node: This node will take every employee’s output and mix it to generate the ultimate output.

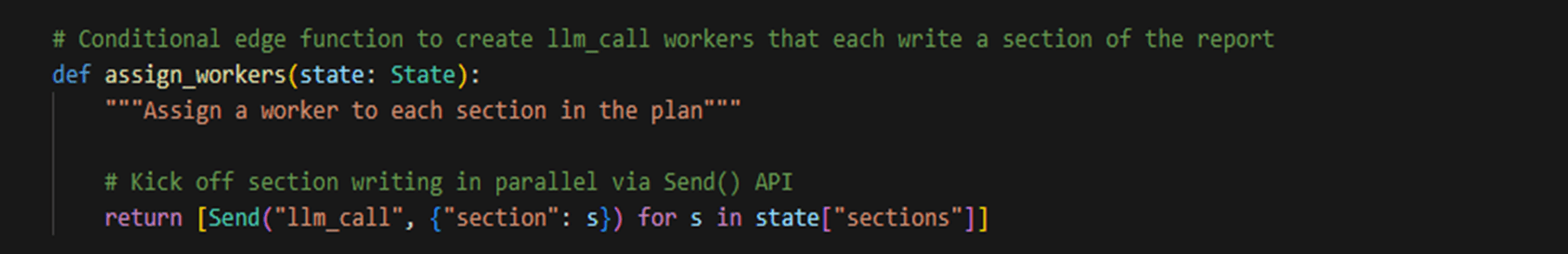

Conditional node to assign employee: That is the conditional node that might be liable for assigning the completely different sections of the weblog to completely different staff.

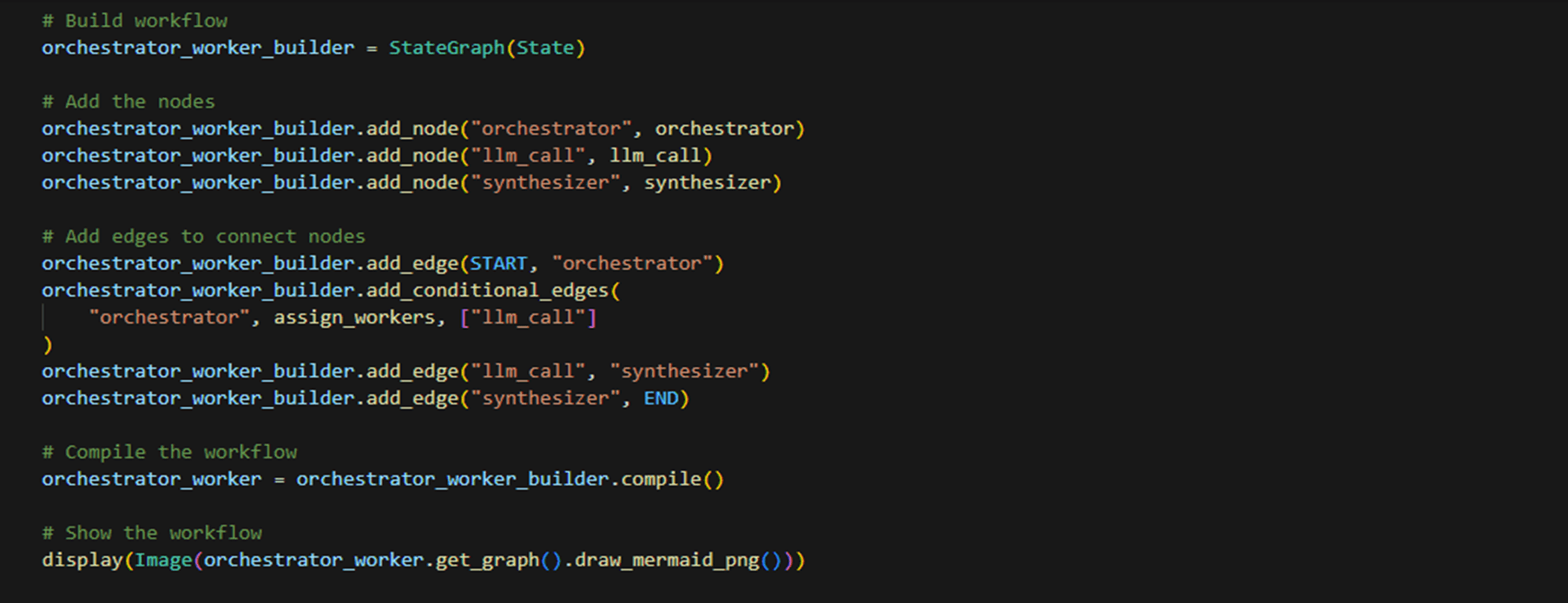

Now, lastly, let’s construct the graph.

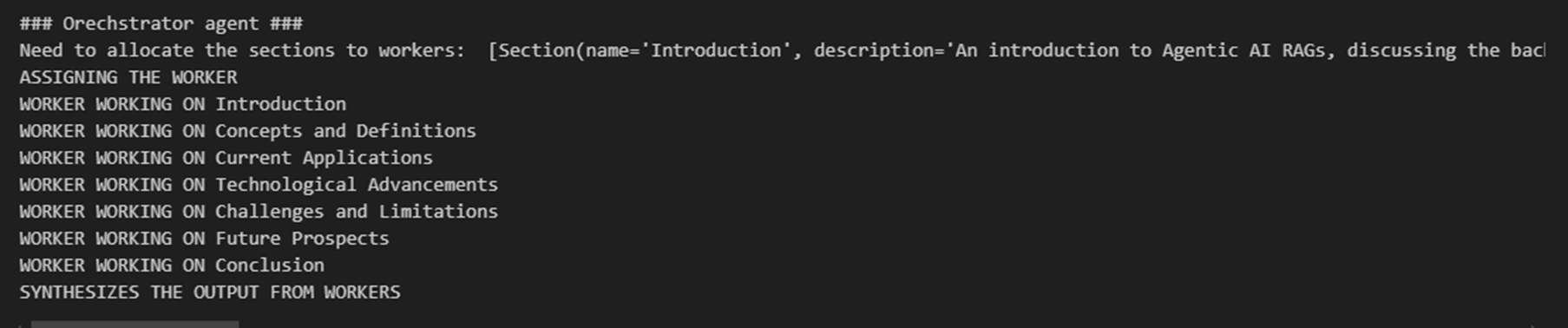

Now, if you invoke the graph with a subject, the orchestrator node breaks it down into sections, the conditional node evaluates the variety of sections, and dynamically assigns staff — for instance, if there are two sections, then two staff are created. Every employee node then generates content material for its assigned part in parallel. Lastly, the synthesizer node combines the outputs right into a cohesive weblog, making certain an environment friendly and arranged content material creation course of.

There are different use circumstances as effectively, which we will clear up utilizing the Orchestrator-worker workflow agent. A few of them are listed beneath:

- Automated Check Case Technology – Streamlining unit testing by robotically producing code-based take a look at circumstances.

- Code High quality Assurance – Guaranteeing constant code requirements by integrating automated take a look at technology into CI/CD pipelines.

- Software program Documentation – Producing UML and sequence diagrams for higher venture documentation and understanding.

- Legacy Code Refactoring – Helping in modernizing and testing legacy functions by auto-generating take a look at protection.

- Accelerating Improvement Cycles – Lowering handbook effort in writing checks, permitting builders to give attention to characteristic improvement.

Orchestrator staff’ workflow agent not solely boosts effectivity and accuracy but additionally enhances code maintainability and collaboration throughout groups.

Closing Strains

To conclude, the Orchestrator-Employee Workflow Agent in LangGraph represents a forward-thinking and scalable strategy to managing complicated, unpredictable duties. By using a central orchestrator to investigate inputs and dynamically break them into subtasks, the system successfully assigns every job to specialised employee nodes that function in parallel.

A synthesizer node then seamlessly integrates these outputs, making certain a cohesive last end result. Its use of state lessons for managing shared variables and a conditional node for dynamically assigning staff ensures optimum scalability and flexibility.

This versatile structure not solely magnifies effectivity and accuracy but additionally intelligently adapts to various workloads by allocating sources the place they’re wanted most. In brief, its versatile design paves the best way for improved automation throughout numerous functions, in the end fostering larger collaboration and accelerating improvement cycles in immediately’s dynamic technological panorama.