On the upcoming WWDC, Apple is anticipated to announce an on-device giant language mannequin (LLM). The subsequent model of the iOS SDK will seemingly make it simpler for builders to combine AI options into their apps. Whereas we await Apple’s debut of its personal Generative AI fashions, corporations like OpenAI and Google already present SDKs for iOS builders to include AI options into cell apps. On this tutorial, we’ll discover Google Gemini, previously often called Bard, and reveal tips on how to use its API to construct a easy SwiftUI app.

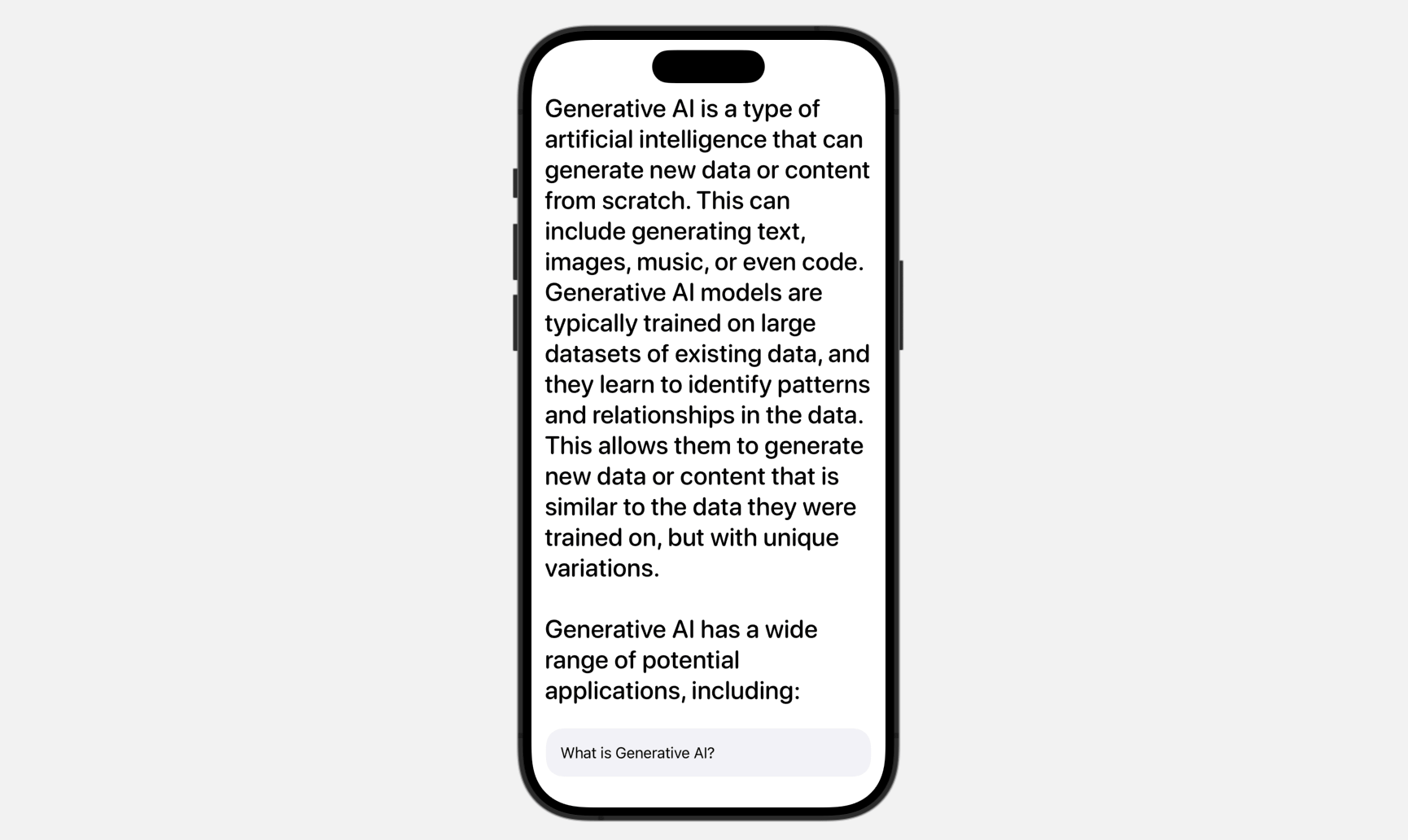

We’re set to construct a Q&A app that makes use of the Gemini API. The app includes a simple UI with a textual content area for customers to enter their questions. Behind the scenes, we’ll ship the person’s query to Google Gemini to retrieve the reply.

Please observe that you need to use Xcode 15 (or up) to comply with this tutorial.

Getting Began with Google Gemini APIs

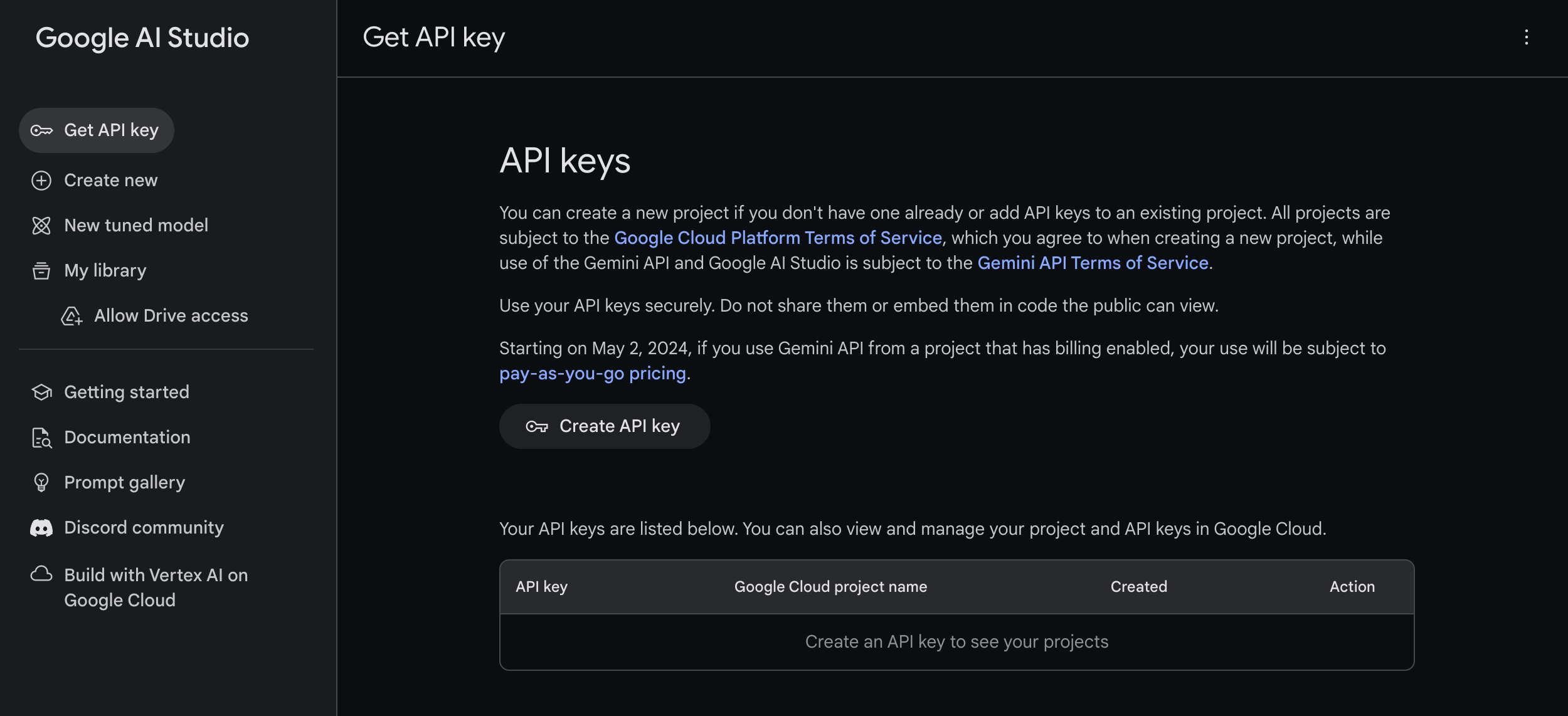

Assuming that you just haven’t labored with Gemini, the very very first thing is to go as much as get an API key for utilizing the Gemini APIs. To create one, you may go as much as Google AI Studio and click on the Create API key button.

Utilizing Gemini APIs in Swift Apps

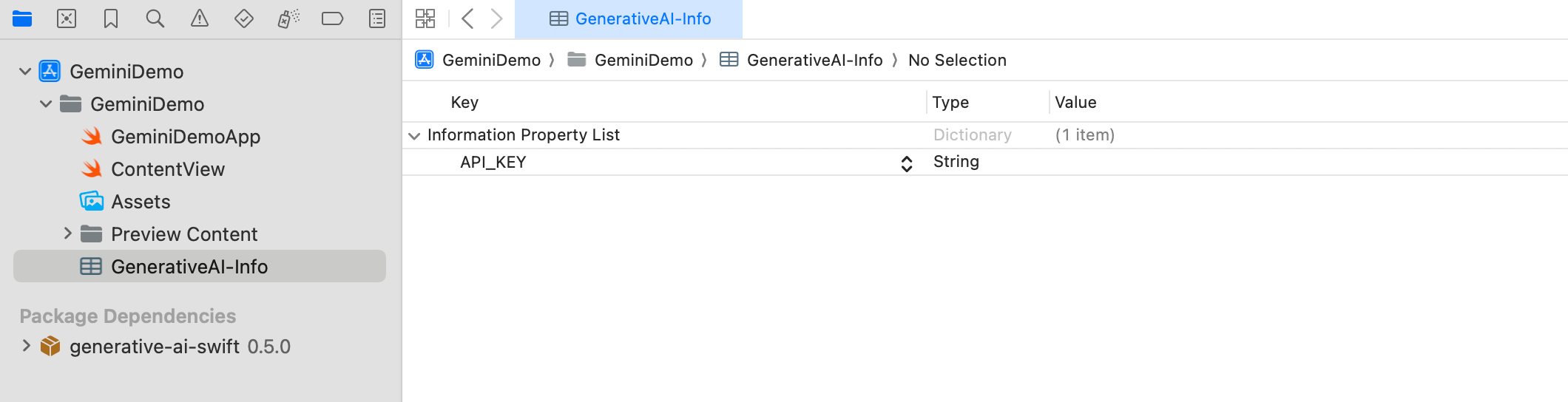

You must now have created the API key. We’ll use this in our Xcode undertaking. Open Xcode and create a brand new SwiftUI undertaking, which I’ll name GeminiDemo. To retailer the API key, create a property file named GeneratedAI-Data.plist. On this file, create a key named API_KEY and enter your API key as the worth.

To learn the API key from the property file, create one other Swift file named APIKey.swift. Add the next code to this file:

enum APIKey {

// Fetch the API key from `GenerativeAI-Data.plist`

static var `default`: String {

guard let filePath = Bundle.principal.path(forResource: "GenerativeAI-Data", ofType: "plist")

else {

fatalError("Could not discover file 'GenerativeAI-Data.plist'.")

}

let plist = NSDictionary(contentsOfFile: filePath)

guard let worth = plist?.object(forKey: "API_KEY") as? String else {

fatalError("Could not discover key 'API_KEY' in 'GenerativeAI-Data.plist'.")

}

if worth.begins(with: "_") {

fatalError(

"Comply with the directions at https://ai.google.dev/tutorials/setup to get an API key."

)

}

return worth

}

}For those who resolve to make use of a distinct identify for the property file as a substitute of the unique ‘GenerativeAI-Data.plist’, you will have to switch the code in your ‘APIKey.swift’ file. This modification is critical as a result of the code references the particular filename when fetching the API key. So, any change within the property file identify must be mirrored within the code to make sure the profitable retrieval of the API key.

Including the SDK Utilizing Swift Bundle

The Google Gemini SDK is definitely accessible as a Swift Bundle, making it easy so as to add to your Xcode undertaking. To do that, right-click the undertaking folder within the undertaking navigator and choose Add Bundle Dependencies. Within the dialog, enter the next bundle URL:

https://github.com/google/generative-ai-swiftYou possibly can then click on on the Add Bundle button to obtain and incorporate the GoogleGenerativeAI bundle into the undertaking.

Constructing the App UI

Let’s begin with the UI. It’s simple, with solely a textual content area for person enter and a label to show responses from Google Gemini.

Open ContentView.swift and declare the next properties:

@State non-public var textInput = ""

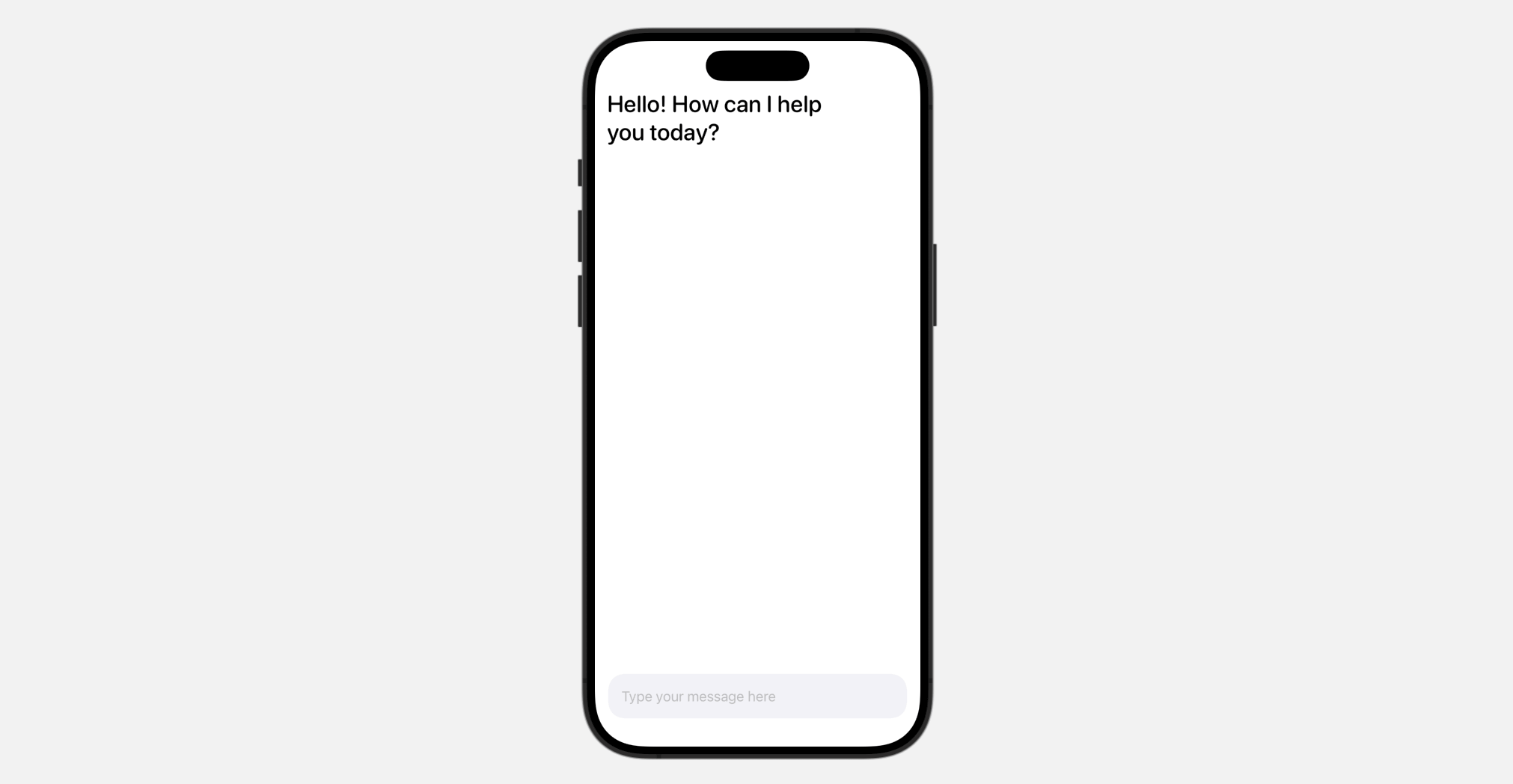

@State non-public var response: LocalizedStringKey = "Hi there! How can I aid you as we speak?"

@State non-public var isThinking = falseThe textInput variable is used to seize person enter from the textual content area. The response variable shows the API’s returned response. Given the API’s response time, we embrace an isThinking variable to watch the standing and present animated results.

For the physique variable, exchange it with the next code to create the person interface:

VStack(alignment: .main) {

ScrollView {

VStack {

Textual content(response)

.font(.system(.title, design: .rounded, weight: .medium))

.opacity(isThinking ? 0.2 : 1.0)

}

}

.contentMargins(.horizontal, 15, for: .scrollContent)

Spacer()

HStack {

TextField("Sort your message right here", textual content: $textInput)

.textFieldStyle(.plain)

.padding()

.background(Coloration(.systemGray6))

.clipShape(RoundedRectangle(cornerRadius: 20))

}

.padding(.horizontal)

}The code is sort of simple, particularly you probably have some expertise with SwiftUI. After making the adjustments, you must see the next person interface within the preview.

Integrating with Google Gemini

Earlier than you should use the Google Gemini APIs, you first have to import the GoogleGenerativeAI module:

import GoogleGenerativeAISubsequent, declare a mannequin variable and initialize the Generative mannequin like this:

let mannequin = GenerativeModel(identify: "gemini-pro", apiKey: APIKey.default)Right here, we make the most of the gemini-pro mannequin, which is particularly designed to generate textual content from textual content enter.

To ship the textual content to Google Gemini, let’s create a brand new operate known as sendMessage():

func sendMessage() {

response = "Pondering..."

withAnimation(.easeInOut(period: 0.6).repeatForever(autoreverses: true)) {

isThinking.toggle()

}

Job {

do {

let generatedResponse = strive await mannequin.generateContent(textInput)

guard let textual content = generatedResponse.textual content else {

textInput = "Sorry, Gemini bought some issues.nPlease strive once more later."

return

}

textInput = ""

response = LocalizedStringKey(textual content)

isThinking.toggle()

} catch {

response = "One thing went mistaken!n(error.localizedDescription)"

}

}

}As you may see from the code above, you solely have to name the generateContent methodology of the mannequin to enter textual content and obtain the generated response. The result’s in Markdown format, so we use LocalizedStringKey to wrap the returned textual content.

To name the sendMessage() operate, replace the TextField view and fix the onSubmit modifier to it:

TextField("Sort your message right here", textual content: $textInput)

.textFieldStyle(.plain)

.padding()

.background(Coloration(.systemGray6))

.clipShape(RoundedRectangle(cornerRadius: 20))

.onSubmit {

sendMessage()

}On this scenario, when the person finishes inputting the textual content and presses the return key, the sendMessage() operate is named to submit the textual content to Google Gemini.

That’s it! Now you can run the app in a simulator or execute it immediately within the preview to check the AI function.

Abstract

This tutorial exhibits tips on how to combine Google Gemini AI right into a SwiftUI app. It solely requires a couple of traces of code to allow your app with Generative AI options. On this demo, we use the gemini-pro mannequin to generate textual content from text-only enter.

Nonetheless, the capabilities of Gemini AI aren’t simply restricted to text-based enter. Gemini additionally presents a multimodal mannequin named gemini-pro-vision that enables builders to enter each textual content and pictures. We encourage you to take full benefit of this tutorial by modifying the supplied code and experimenting with it.

When you have any questions in regards to the tutorial, please let me know by leaving a remark beneath.